1. Article purpose[edit | edit source]

This article aims to give general information about the AI unified API for STM32MPUs: stai_mpu API.

2. Description[edit | edit source]

2.1. What is stai_mpu API?[edit | edit source]

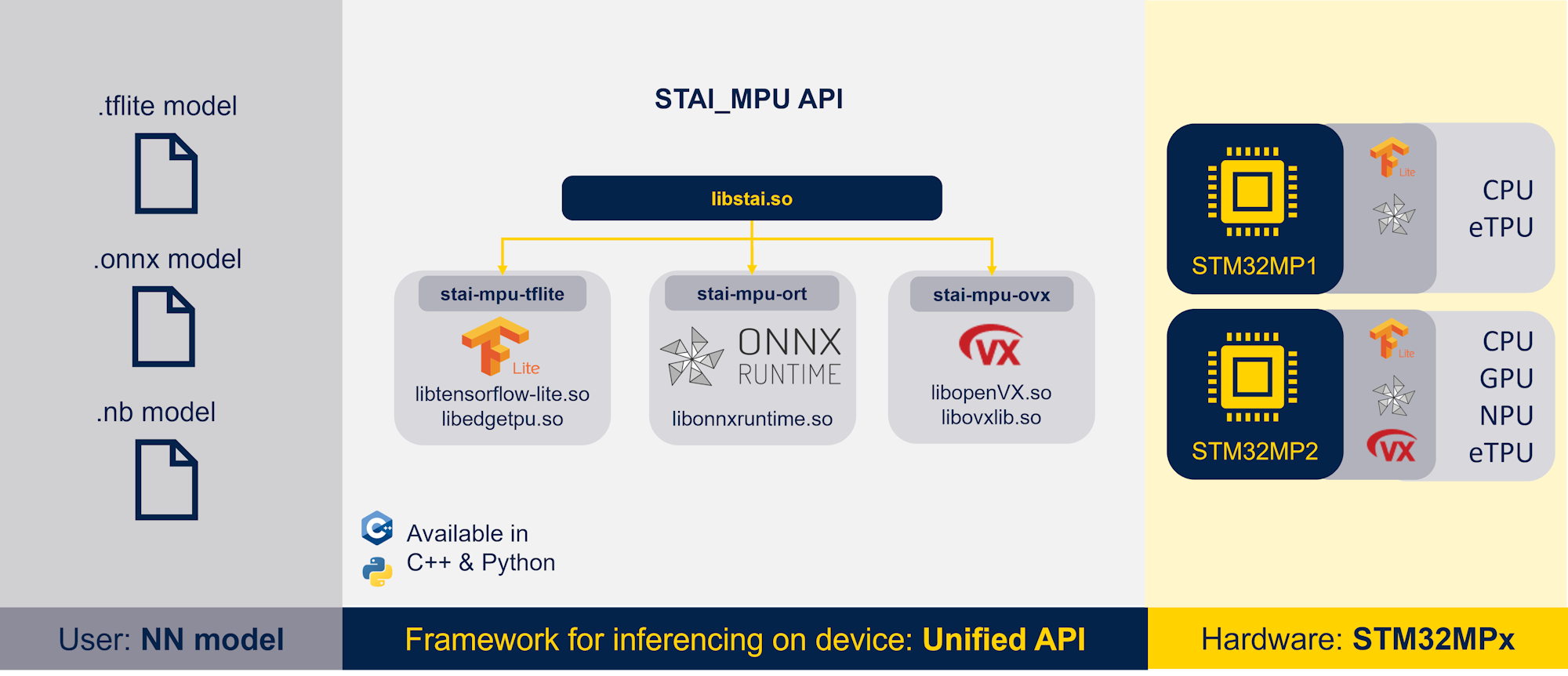

The stai_mpu API is a cross STM32MPUs platforms neural network inferencing API with a flexible interface to run several deep learning models formats such as TensorFlow™ Lite[1], ONNX[2] and Network Binary Graph (NBG).

This unified API powers neural network models on all STM32MPU series to provide a simple and unified interface for porting machine learning applications using unified API calls.

It provides support for C++ and Python language and is integrated as part of the X-LINUX-AI package.

The functionalities of the API are summarized in the picture below:

2.2. How it works?[edit | edit source]

This API provides a high-level interface for loading a model, getting input and output tensor metadata, setting input tensors to feed the network with data to infer and getting output tensors by reading raw data from output layers.

The working principle is simple. First, choose a neural network model from the following supported formats: ONNX, TFLite™, and Network Binary Graph (NBG).

Then you can use the stai_mpu API functionalities in an application to load and run the inference of your model easily. In the background, the API automatically loads the right framework according to the model given by the user, enabling the same stai_mpu function to be used for any model from any of the supported frameworks.

We have a unification of functionalities, including model loading, input and output retrieval, and inference launch in a single stai_mpu API, allowing abstraction of the framework from which the model originates.

3. In detail[edit | edit source]

In this part, the most important functions of the API are described. These main functions allow the user to set the input of the neural network model, run the inference and get the output.

3.1. Main functions[edit | edit source]

First, it is mandatory to declare a stai_mpu_network, it takes in argument a path to a TFLite™, ONNX, or NBG neural network model. This call allows you to access the functions below.

All the functions that can be called when the stai_mpu_network is defined are as follows:

get_num_inputs() Returns the number of inputs of the NN model. get_num_outputs() Returns the number of outputs of the NN model. get_input_infos() Returns a vector of the stai_mpu_tensor of the NN model’s input layers. get_output_infos() Returns a vector of the stai_mpu_tensor of the NN model’s output layers set_input(index, input_data) Set the input_data at the input specified index. get_output(index) Returns the output tensor data as void* pointer. run() Runs an inference on the stai_mpu_network associated to the model extension

To run an inference with your NN model, you need to set up the input using the “set_input” function and then launch the inference using the “run” function and finally to get the outputs using the “get_output” function. The outputs need to be post processed.

3.2. Package split[edit | edit source]

The stai_mpu API is divided in multiple packages. To be able to run a model with a specific framework, you need to have the stai-mpu package corresponding to the framework installed. If the package is not install, the API automatically detect it and propose to install it with an apt-get install command.

Here is the list of stai_mpu API packages that can be installed:

- The OpenVX™ part is available in the stai-mpu-ovx package and allow the user to use the API for NBG models execution.

- The TFLite™ part is available in the stai-mpu-tflite package and allow the user to use the API for TFLite™ models execution.

- The ONNX part is available in the stai-mpu-onnx package and allow the user to use the API for ONNX models execution.

3.3. Usage on STM32MPUs[edit | edit source]

This API is available in Python and C++. Moreover, the Python API is based on C++ API using pybind11[3] to create Python binding based on the C++ code.

The API is forward and backward compatible with all STM32MPUs. When the API is used in a C++ or Python application, it automatically detects the AI framework that must be used to run the NN model.

- For STM32MP2x with AI hardware accelerator:

The API handles the GPU and NPU hardware acceleration when an NBG model is used. The NBG model can be generated from your quantized TFLite™ or ONNX using ST edge AI, follow this article to convert your model to NBG format.

The TFLite™ and ONNX model run on CPU.

To install the OpenVX™ part of the API to use it to run NBG models, use the following command:

x-linux-ai -i stai-mpu-ovx

For the installation of the TFLite™ and ONNX part of the API, use these commands:

x-linux-ai -i stai-mpu-tflite x-linux-ai -i stai-mpu-onnx

- For STM32MPU without AI hardware accelerator:

| The API does not support NBG model and the package stai-mpu-ovx is not available on STM32MP1x boards. |

The API support TFLite™ and ONNX models and they run on CPU.

For the installation of the TFLite and ONNX part of the API to run the corresponding models, use these commands:

x-linux-ai -i stai-mpu-tflite x-linux-ai -i stai-mpu-onnx

4. References[edit | edit source]