1. Install from the OpenSTLinux AI package repository[edit source]

All the generated X-LINUX-AI packages are available from the OpenSTLinux AI package repository service hosted at the non-browsable URL http://extra.packages.openstlinux.st.com/AI.

This repository contains AI packages that can be simply installed using apt-* utilities, which the same as those used on a Debian system:

- the main group contains the selection of AI packages whose installation is automatically tested by STMicroelectronics

- the updates group is reserved for future uses such as package revision update.

You can install them individually or by package group.

1.1. Prerequisites[edit source]

- Flash the Starter Package on your SDCard

- For OpenSTLinux ecosystem release unknown revision 5.0.2.BETA

:

:

- For OpenSTLinux ecosystem release unknown revision 5.0.2.BETA

- Your board has an internet connection either through the network cable or through a WiFi connection.

1.2. Configure the AI OpenSTLinux package repository[edit source]

Once the board is booted, execute the following command in the console in order to configure the AI OpenSTLinux package repository:

For ecosystem release unknown revision 5.0.2.BETA ![]() :

:

- Move to the apt archives directory :

cd /var/cache/apt/archives

- Retrieve the specific package apt-openstlinux-ai_1.0_arm64.debTBD:

wget http://extra.packages.openstlinux.st.com/alpha/stm32mp2/AI/5.0/pool/config/a/apt-openstlinux-ai/TBDapt-openstlinux-ai_1.0_arm64.debTBD

- Install this package:

apt-get install ./apt-openstlinux-ai_1.0_arm64.debTBD

- Then synchronize the AI OpenSTLinux package repository.

apt-get update

1.3. Install AI packages[edit source]

1.3.1. Install all X-LINUX-AI packages[edit source]

| Command | Description |

|---|---|

apt-get install packagegroup-x-linux-ai |

Install all the X-LINUX-AI packages (TensorFlow™ Lite, Edge TPU™, application samples and tools) |

[edit source]

| Command | Description |

|---|---|

apt-get install packagegroup-x-linux-ai-tflite |

Install X-LINUX-AI packages related to TensorFlow™ Lite framework (including application samples) |

apt-get install packagegroup-x-linux-ai-coral |

Install X-LINUX-AI packages related to the Coral Edge TPU™ framework (including application samples) |

apt-get install packagegroup-x-linux-ai-onnxruntime |

Install X-LINUX-AI packages related to ONNX Runtime™ (including application samples) |

apt-get install packagegroup-x-linux-ai-npu |

Install minimal X-LINUX-AI packages allowing to use OpenVX stack for the NPU hardware acceleration (including NBG benchmark) |

1.3.3. Install individual packages[edit source]

| Command | Description |

|---|---|

apt-get install x-linux-ai-tool |

Install X-LINUX-AI binary tool |

apt-get install libedgetpu2 |

Install libedgetpu for Coral Edge TPU™ |

apt-get install libcoral2 |

Install libcoral API for Coral Edge TPU™ |

apt-get install libtensorflow-lite-tools |

Install Tensorflow™ Lite utilities |

apt-get install libtensorflow-lite2 |

Install Tensorflow™ Lite runtime |

apt-get install tflite-vx-delegate |

Install Tensorflow™ Lite OpenVX Delegate to enable inference hardware acceleration |

apt-get install python3-libtensorflow-lite |

Install Python TensorFlow™ Lite inference engine |

apt-get install python3-pycoral |

Install Python PyCoral API for Coral Edge TPU™ |

apt-get install coral-image-classification-cpp |

Install C++ image classification example using Coral Edge TPU™ TensorFlow™ Lite API |

apt-get install coral-image-classification-python |

Install Python image classification example using Coral Edge TPU™ TensorFlow™ Lite API |

apt-get install coral-object-detection-cpp |

Install C++ object detection example using Coral Edge TPU™ TensorFlow™ Lite API |

apt-get install coral-object-detection-python |

Install Python object detection example using Coral Edge TPU™ TensorFlow™ Lite API |

apt-get install tflite-image-classification-cpp |

Install C++ image classification using TensorFlow™ Lite |

apt-get install tflite-image-classification-python |

Install Python image classification example using TensorFlow™ Lite |

apt-get install tflite-object-detection-cpp |

Install C++ object detection example using TensorFlow™ Lite |

apt-get install tflite-object-detection-python |

Install Python object detection example using TensorFlow™ Lite |

apt-get install tflite-pose-estimation-python |

Install Python human pose estimation example using TensorFlow™ Lite |

apt-get install tflite-semantic-segmentation-python |

Install Python semantic segmentation example using TensorFlow™ Lite |

apt-get install coral-edgetpu-benchmark |

Install benchmark application for Coral Edge TPU™ models |

apt-get install tflite-models-coco-ssd-mobilenetv1 |

Install TensorFlow™ Lite COCO SSD Mobilenetv1 model |

apt-get install coral-models-coco-ssd-mobilenetv1 |

Install TensorFlow™ Lite COCO SSD Mobilenetv1 model for Coral Edge TPU™ |

apt-get install tflite-models-mobilenetv1 |

Install TensorFlow™ Lite Mobilenetv1 model |

apt-get install coral-models-mobilenetv1 |

Install TensorFlow™ Lite Mobilenetv1 model for Coral Edge TPU™ |

apt-get install tflite-models-mobilenetv3 |

Install TensorFlow™ Lite Mobilenetv3 model |

apt-get install tflite-models-movenet-singlepose-lightning |

Install TensorFlow™ Lite Movenet Singlepose Lightning model |

apt-get install tflite-models-deeplabv3 |

Install TensorFlow™ Lite Deeplabv3 model |

apt-get install onnxruntime |

Install ONNX Runtime™ |

apt-get install onnxruntime-tools |

Install ONNX Runtime™ utilities |

apt-get install python3-onnxruntime |

Install ONNX Runtime™ python API |

apt-get install onnx-image-classification-python |

Install Python image classification example using ONNX Runtime™ |

apt-get install onnx-object-detection-python |

Install Python object detection example using ONNX Runtime™ |

apt-get install onnx-object-detection-cpp |

Install C++ object detection example using ONNX Runtime™ |

apt-get install onnx-models-coco-ssd-mobilenetv1 |

Install ONNX Runtime™ COCO SSD Mobilenetv1 model |

apt-get install onnx-models-mobilenetv1 |

Install ONNX Runtime™ Mobilenetv1 model |

apt-get install onnx-models-mobilenetv3 |

Install ONNX Runtime™ Mobilenetv3 model |

apt-get install tim-vx |

Install TIM-VX VeriSilicon™ Tensor Interface Module |

apt-get install tim-vx-tools |

Install TIM-VX VeriSilicon™ Tensor Interface Module utilities |

apt-get install nbg-benchmark |

Install benchmark application for Network Binary Graph (NBG) representation |

apt-get install nbg-image-classification-cpp |

Install C++ image classification example using Network Binary Graph (NBG) |

apt-get install nbg-models-mobilenetv3 |

Install Mobilenetv3 Network Binary Graph (NBG) model |

2. How to use the X-LINUX-AI Expansion Package[edit source]

2.1. Material needed[edit source]

To use the X-LINUX-AI OpenSTLinux Expansion Package, choose one of the following materials:

Optional:

- Coral USB Edge TPU™[1] accelerator

2.2. Boot the OpenSTlinux Starter Package[edit source]

At the end of the boot sequence, the demo launcher application appears on the screen.

2.3. Install the X-LINUX-AI[edit source]

After having configured the AI OpenSTLinux package you can install the X-LINUX-AI components.

apt-get install packagegroup-x-linux-ai

And restart the demo launcher:

systemctl restart weston-graphical-session.service

Check that X-LINUX-AI is properly installed:

x-linux-ai -v

X-LINUX-AI version: 5.1.0

2.4. Launch an AI application sample[edit source]

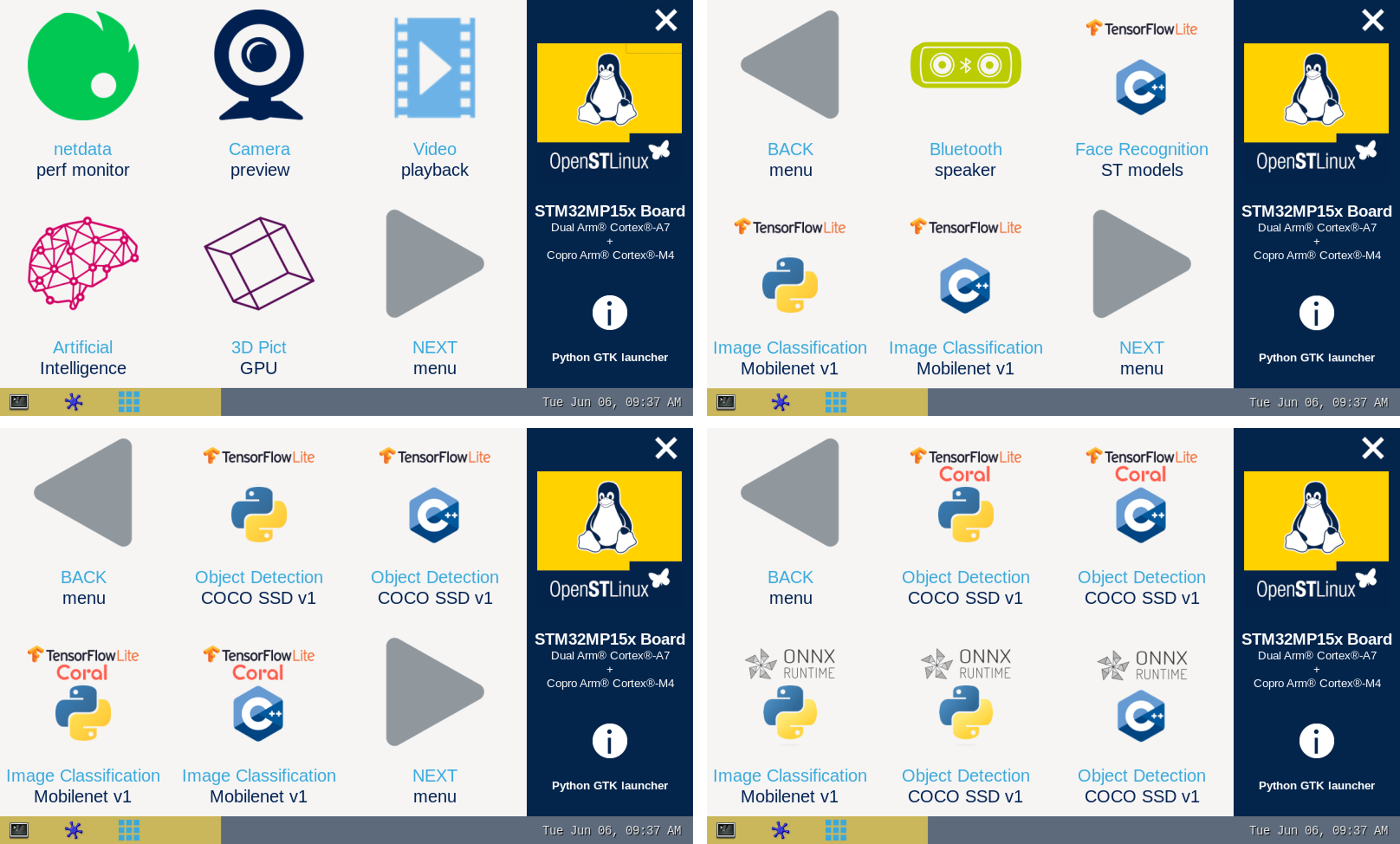

Once the demo launcher is restarted, notice that it is slightly different because new AI application samples have been installed.

The demo launcher has the following appearance, and you can navigate into the different screens by using the NEXT or BACK buttons.

The demo launcher now contain AI application samples that are described within dedicated article available in the X-LINUX-AI application samples zoo page.

2.5. Enjoy running your own NN models[edit source]

The above examples provide application samples to demonstrate how to execute models easily on the STM32MPUs.

You are free to update the C/C++ application or Python scripts for your own purposes, using your own NN models.

Source code locations are provided in application sample pages.

2.6. References[edit source]