1. Article purpose[edit source]

The main purpose of this article is to give main steps and advice on how to deploy NN models on STM32MPU boards through the X-LINUX-AI expansion package. The X-LINUX-AI is designed to be user-friendly and to facilitate the NN model deployment on all the STM32MPU targets with a common and coherent ecosystem.

3. Deploy NN model on STM32MP1x board[edit source]

This part details the steps to follow to deploy the NN model on the STM32MP1x board.

3.1. Which type of NN model is used[edit source]

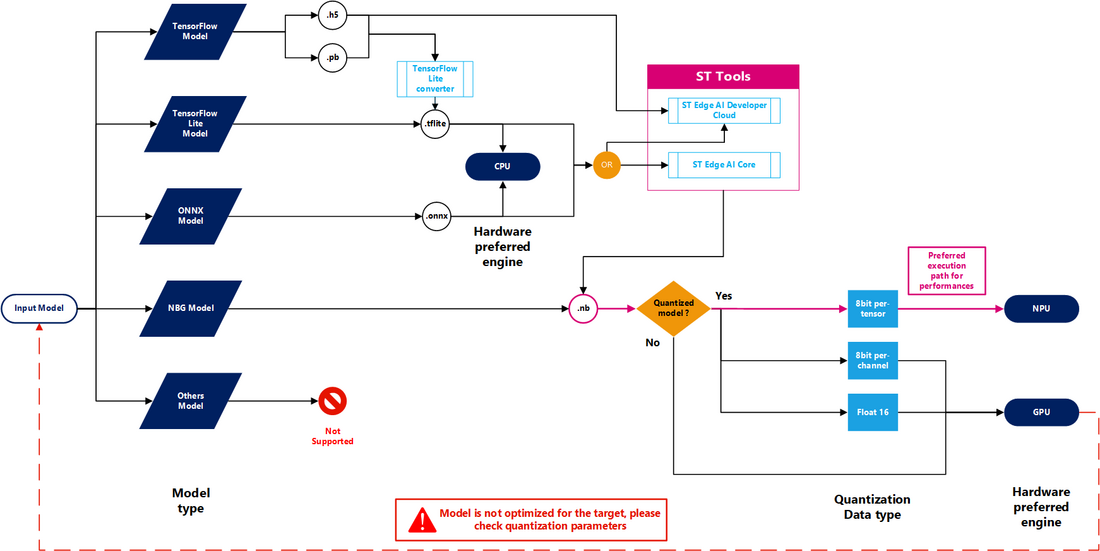

On STM32MP1x, X-LINUX-AI ecosystem only support three types of NN models which are :

- TensorFlow Lite model : the common extension for this type of model is .tflite

- Coral EdgeTPU model : this type of model is a derivative of classic TensorFlow Lite model, the extension of the model remain .tflite but the model is pre-compiled for EdgeTPU using a specific compiler. To go further with Coral models please refer to the dedicated wiki article : How to compile model and run inference on Coral Edge TPU

- ONNX model : the common extension for this type of model is .onnx

If the model that you want to deploy is not in the above list, it means that the model type is not supported as is and may need conversion. Here is a list of common AI frameworks extension conversion to TensorFlow Lite or ONNX type :

- TensorFlow : for TensorFlow saved model with .pb extension, the conversion to a TensorFlow Lite model, could be easily done using TensorFlow Lite converter

- Keras : for Keras .h5 file, the conversion to a TensorFlow Lite model, could also be done using TensorFlow Lite converter as Keras is part of TensorFlow since 2017

- Pytorch : for typical Pytorch model .pt, it is possible to directly export a ONNX model using the Pytorch built-in function torch.onnx.export. It is not possible to directly export a TensorFlow Lite model but it is possible to convert ONNX model to TensorFlow Lite model using packages like onnx-tf or onnx2tf

- Ultralitics Yolo : Ultralitics provide a build-in function to export YoloVx models with several formats such as ONNX and TensorFlow Lite

3.2. Which quantization type is used[edit source]

The most important point is to determine if the model to execute on target is quantized or not. Generally, common AI frameworks like TensorFlow, ONNX, Pytorch use 32-bit floating point representation during the training phase of the model which is optimized for modern GPUs and CPUs but not for embedded devices.

To determine if a model is quantized, the most convenient way is to use a tool like Netron, which is a visualizer for neural network models. For each layer of the NN, the data type is mentioned (float32, int8, uint8 ...) but also the quantization type and the quantization parameters ... If the data type of internal layers (excepted inputs and outputs layers) are in 8-bits or lower it means that the model is quantized.

Float-32 models can be run on the CPU of STM32MP1x using TensorFlow Lite or ONNX Runtime but the performances will be very slow. It is highly recommended to perform a 8-bits quantization. A 8-bit quantized model will run faster with in most cases an acceptable accuracy loss..

To quantize a model with post-training quantization,TensorFlow Lite converter and ONNX Runtime frameworks provide all the necessary to perform such quantization directly on host PC, the documentation can be found on their website.

3.3. Deploy the model on target[edit source]

Once the model is in TensorFlow Lite or ONNX format and optimized for embedded deployment, the next step is to perform a benchmark on target using X-LINUX-AI unified benchmark to validate the good functioning of the model. To do it please refer to the dedicated article : How to benchmark your NN model on STM32MPU

To go further, with developing an AI application based on this model using TensorFlow Lite runtime or ONNX runtime please refer to application example wiki articles : AI - Application examples