This document is a comprehensive guide on collecting good data, an essential aspect of any successful project. We will explore the key elements and best practices crucial for ensuring the accuracy, reliability, and relevance of the data you collect.

In short, a model can only be as good as the data it was trained on. So bad data equals bad models.

Below are listed simple but very important rules to collect relevant data and help you succeed in the realization of your NanoEdge AI Studio project.

1. Definitions

Here are some clarifications regarding important terms that are used in this document and in NanoEdge:

Axis/Axes:

In NanoEdge AI Studio, the axis/axes are the total number of variables outputted by the sensor used for a project. For example, a 3-axes accelerometer outputs a 3-variables sample (x,y,z) corresponding to the instantaneous acceleration measured in the 3 spatial directions.

In case of using multiple sensors, the number of axes is the total number of axes of all sensors. For example, if using a 3-axes accelerometer and a 3-axes gyroscope, the number of axes is 6.

Signal:

A signal is the continuous representation in time of a physical phenomenon. We are sampling a signal with a sensor to collect samples (discrete values). Example: vibration, current, sound.

Sample:

This refers to the instantaneous output of a sensor, and contains as many numerical values as the sensor has axes.

For example, a 3-axis accelerometer outputs 3 numerical values per sample, while a current sensor (1-axis) outputs only 1 numerical value per sample.

Data rate:

The data rate is the frequency at which we capture signal values (samples). The data rate must be chosen according to the phenomenon studied.

You must also pay attention to having a consistent data rate and number of samples to have buffers that represent a meaningful time frame.

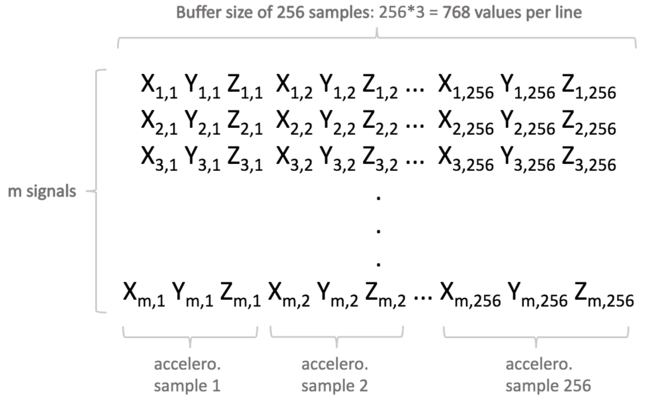

Buffer:

A buffer is a concatenation of consecutive samples collected by a sensor. A dataset contains multiples buffers, the term Line is also used when talking about buffers of a dataset.

Buffers are the input for NanoEdge, being in the Studio or in the microcontroller.

For optimization reasons, it must be a power of 2. For example, a 3-axis signal with a buffer length 256 is represented by 768 (256*3) numerical values.

Library:

NanoEdge AI Studio is a tool to generate machine learning (ML) models in the format of a C code library. We use the term libraries here because we not only provide the model, but also the required preprocessing and functions to easily integrate the model in an embedded C code.

To summarize, NanoEdge is using buffers (or lines) to generate ML models (or libraries). The buffers are a concatenation of signal's samples collected with a sensor. The signal being the physical phenomenon studied.

1.1. Data format

Here is the link to the documentation with all the information: https://wiki.st.com/stm32mcu/wiki/AI:NanoEdge_AI_Studio#General_rules

In short, NanoEdge needs datasets to train models. These datasets, generally .csv files, contains buffers of data (or lines) of a determined size, which is the number of samples collect at a certain frequency (or data rate Hz). Working with buffers instead of samples gives the model a temporal representation of the signals studied, which contains much more information than singular values.

Example of 3-axes accelerometer's buffers for Anomaly detection, N-class classification or 1-class classification:

The accelerometer collect samples based on its sampling rate (frequency), signals are a concatenation of samples (always the same amount based on the signal size) and datasets contains multiples buffers.

2. Key Considerations Prior to Data Collection

2.1. Study the right physical phenomenon

Vibration, current, humidity, pressure, or sound are examples of physical phenomena measurable with sensors. The choice of which phenomenon to use can lead to considerably different outcomes.

Here are possible ways to go in an example of motor predictive maintenance:

Sound: One might be inclined to consider sound as a diagnostic parameter, based on a technician's ability to discern anomalies through sound. However, in a factory environment with numerous ambient noises, sound-based analysis can be greatly influenced by the surrounding conditions, making it , in general, less reliable.

Vibration: In the cases described above, opting for vibration analysis is often a more viable choice compared to sound-based methods. Nevertheless, additional considerations come into play when the motor is situated in distinct contexts, like on a vibrating truck. Under such circumstances, vibration analysis might be impossible.

Current: Current-based analysis might yield more accurate results due to the inherent vibrations in the truck affecting the motor's behavior. Yet, it is worth noting that working with current data may involve a more intrusive data acquisition process compared to vibration-based analysis.

Selecting the most appropriate physical phenomenon is a nuanced decision, but it holds paramount importance. As a general guideline, vibration and current analysis typically prove to be the more reliable approaches in the majority of cases. Properly determining the ideal diagnostic parameter can significantly enhance the effectiveness of predictive maintenance endeavors.

2.2. Define the environment

Machine learning models will learn to treat the data that they were given. So if the final use case environment and the environment where the data collection took place are not exactly the same (same machine, same position of the sensor, same parameters ...), then it will not work as expected.

If you need the machine learning to work in different environment, you can, but you will need to adapt you data collection strategy. You will need to collect data for each environment you want the model to work in.

In NanoEdge AI Studio, the anomaly detection kind of project can help you if you have an environment that is changing thanks to the ability of models to be retrain on microcontrollers directly. As long as the situation is not to different from the one used for the training of the model, Anomaly detection model should be able to adapt to the new environment.

2.3. Work with buffers, not samples

A single sample provides only a snapshot of the machine's behavior at a specific point in time. It lacks the temporal context required to understand the machine's behavior over time.

By working with buffers of samples (time series data), you can capture the temporal context, which allows the NanoEdge AI models to identify trends and patterns over time, making the predictions more accurate.

For example, if we had an increasing signal and a decreasing signal, with exactly the same values, but inversed, we could not classify them using samples. Indeed, all the sample would be in both classes, but to see if the phenomenon is an increase, or a decrease, we would need buffer containing multiple consecutive samples, where we could see if the values were increasing or decreasing.

2.4. Work with raw data

NanoEdge can apply choose to apply an FFT to the data during a benchmark. So it can both look for model working with time domain analysis or frequency domain analysis.

NanoEdge can also apply a lot of other combinations of preprocessing. You can try to do your own, but it is suggested to stick with raw data, so NanoEdge can test more combinations.

2.5. Choose a relevant sampling rate and buffer size

Sampling rate:

To create buffers that captures the information contained in a signal, you need to choose how much samples you will get (buffer size) and chosse the frequency with which you collect the samples (sampling rate). Choosing them wrongly can lead to the following situation:

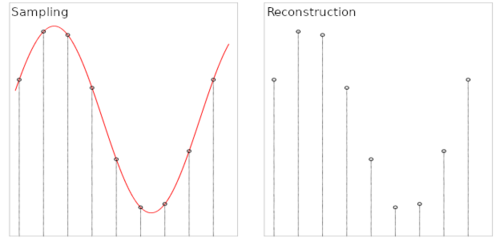

- Case 1: the sampling frequency and the number of samples make it possible to reproduce the variations of the signal.

- Case 2: the sampling frequency is not sufficient to reproduce the variations of the signal.

- Case 3: the sampling frequency is sufficient but the number of samples is not sufficient to reproduce the entire signal (meaning that only part of the input signal is reproduced).

Buffer size:

Buffer size must be a power of 2 for optimization reasons. Suggested buffer size are from 16 values per axis, up to 4096 values per axis. When choosing the buffer size, make sure that it make sense with the sampling rate: if the buffer size is 16 and the frequency is 26kHz, the signal was collected in 6x10^-4 second period. Is this short period enough to contains relevant data ? Probably not.

2.5.1. Sampling finder

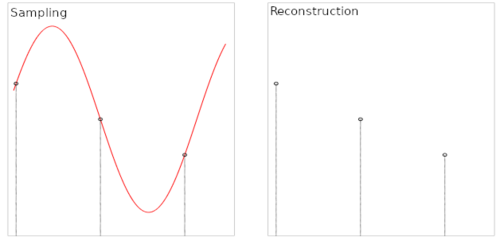

To find the best sampling rate and buffer size, you can either study the signal, test multiple combinations empirically or use the sampling finder.

The sampling finder is a tool provided by NanoEdge AI Studio to help find the best sampling rate and buffer size for a project.

It needs continuous data of the highest frequency possible, to then create buffers of multiples size at multiples data rate (subdivided from the original data rate). Using fast machine learning technics, it will then estimate the score that you would get using the different combinations of sampling rate and buffer size.

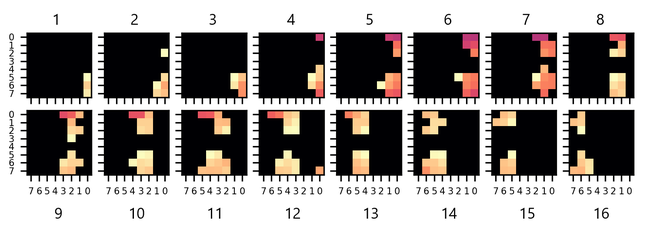

Example of sampling finder's result:

Find more about the sampling finder here: https://wiki.st.com/stm32mcu/wiki/AI:NanoEdge_AI_Studio#Sampling_finder

2.6. Use a temporal window that makes sense

When choosing the signal size and data rate, make sure that it makes sense in term of the temporal window the signal cover. For example, if working with signals of size 2048 and a frequency of 1,6kHz, the signal contains value collected during 1.22 seconds. If you were working with a signal of size 256 and a frequency of 26kHz, the signal would contain value collected during 0.009 seconds.

Looking at the behavior of a machine for 1.22 seconds and trying to classify it seems much more easier than trying to do the same by looking at the motor for only 0.009 seconds.

2.7. Start small and iterate

An AI project is not straightforward or linear; rather, it is an iterative process that involves starting small and progressively improving the results.

In the initial stages, you can begin with a simplified version of the problem that you are trying to solve. The results obtained from this preliminary model might not be optimal or accurate, but they serve as a starting point to understand the project's challenges and limitations.

The key is to view the AI project as a continuous loop of experimentation, data collection, and model refinement.

For example, you could start a project by trying to classify only two classes out of the ten that are planned for the final project. By doing so, you could see that even with two classes, the results are not good. You may have to change the sensor because the data that you are using do not contains information useful for the project, change the sensor placement, or the data collection process... Once achieving good result with two classes, you could iteratively add classes until you reach the ten initially planned.

2.8. Collect enough data

When there is not enough data in Machine Learning, the model may end up overfitting. Overfitting occurs when the model becomes too specialized to the limited data it has seen during training, and it fails to generalize well to new, unseen data.

Imagine you are trying to teach a model to identify cats from pictures. If you only show the model a few pictures of cats, it may memorize the specific details of those images and become too tailored to them. As a result, when you give the model new images of cats that it has never seen before, it might struggle to correctly recognize them because it lacks the ability to generalize beyond the few examples it was trained on.

In essence, not having enough diverse data can lead to overfitting because the model has not learned enough about the underlying patterns and variations in the data. To build a more robust and generalizable model, it is crucial to have a sufficient amount of diverse data for the model to learn from and avoid overfitting.

3. Workflow

Once you have considered the points mentioned above, here is a project workflow that can serve as inspiration:

Define the phenomenon to study:

Select the phenomenon which should contain the most useful information in its signal to be able to realize the project (current, vibration ...). Change it if you don't succeed in getting go results.

Make sure that your experimental setup make sense:

Create an experimental close to the real one. Think about the way you are collecting data. Avoid collecting useless noise or flat signals.

Find a sensor and a board:

Knowing the kind of phenomenon to study and the experimental setup, select a development board that suits your needs.

Use the datalogger generator in NEAI:

In NanoEdge is a tool to help you generate C code for data logging. If you can, use continuous datalogging at the maximum frequency possible to use the sampling finder and find which sampling rate and buffer size works the best.

If you cannot use continuous data, you can try multiple combination of sampling rate and buffer size iteratively, based on what worked, and what didn't worked.

Datalogger documentation: https://wiki.st.com/stm32mcu/wiki/AI:NanoEdge_AI_Studio#Datalogger

Start small:

If your problem is very complex, try to work with a simplified version of it first to see what results you can get.

Collect multiple datasets:

Machines are not flawless, and data variations can occur, even within the same class. For instance, when logging data for a motor's first speed, then the second speed, and returning to the first speed, slight changes may occur in the new collected data for the first class.

Logging multiple times every class while going through all of them helps getting more varied data.

Train a model, test it and deploy it:

Launch a benchmark with the collected data. If the results seems good enough, use the emulator and validation step to test the libraries on new data and see how it behave. If something is wrong, go back to the data collection and iterate.

Once satisfied by a library, you can compile it and deploy it on the microcontroller to test it in the real environment.

Think about the embedded part:

When deploying the model, NanoEdge provide few function for the user to use the library, but you have a lot or room to improve your application with just the way of using the model, here are some example of deployment strategies for you to use.

4. Bad results caused by data

A model can only be as good as the data it was trained on, here are some situation that you might encounter:

The benchmark's results are good, but when testing with new data, the results are bad :

The model is probably overfitting on the data, try using more data in the benchmark or select a different library than the best found. Previous library might have overfit less, because they learn the data less.

In the same idea, maybe the data from the test datasets were slightly different than the data used for the training. Try log more data, in multiple session to cover try to capture all possible cases and merge all the dataset to make the model learn more varied data.

The model confuses two classes:

Look at the confusion matrix to see if there is indeed two classes that the model doesn't recognize and confuse. This might indicate that the classes are two close from each other. A solution is to merge them, or to get more data for those classes in particular.

I get very bad result with the classification of one of my classes:

It might happens that a model is not able to classify a class in particular. Compared to the previous situation, the model is not just confusing this class with another, but randomly classifying it. It might come from the fact that this class is more difficult to classify. Adding data only for this class might help the benchmark focus more on this class.

5. Deployment strategies

Designing an embedded project taking in account the specific use case can improve the accuracy and robustness of the prediction.

By default, Nanoedge provides few functions for the user to use the model: an initialization function, the learn function if in anomaly detection and the detect function.

the simplest approach is to initiate the model at the beginning of the program, do some learning and put the detect in a loop but the example listed below can help design a better solution according to the use case:

- If detecting an anomaly or a specific class, wait to have x repetition in the next y detections to confirm the result

- In N-class classification, work with the probability vector to be of each class to create a lot more cases instead of working only with the maximum probability predicted.

- Wait for the machine to stabilize before doing learnings or detections in the case of a machine starting

- Make sure to have vibration before doing a detect in the case of a vibration use case

NanoEdge is just the model that will do the prediction, it is up to the user, which know its use case, its variables and needs to design a solution that works for him

6. Resources

7. Use case studies

This part presents several use case studies where NanoEdge AI Studio has been used successfully to create smart devices with machine learning features using a NanoEdge AI library.

The aim is to explain the methodology and thought process behind the choice of crucial parameters during the initial datalogging phase (that is, even before starting to use NanoEdge AI Studio) that can make or break a project.

7.1. Vibration patterns on an electric motor

7.1.1. Context and objective

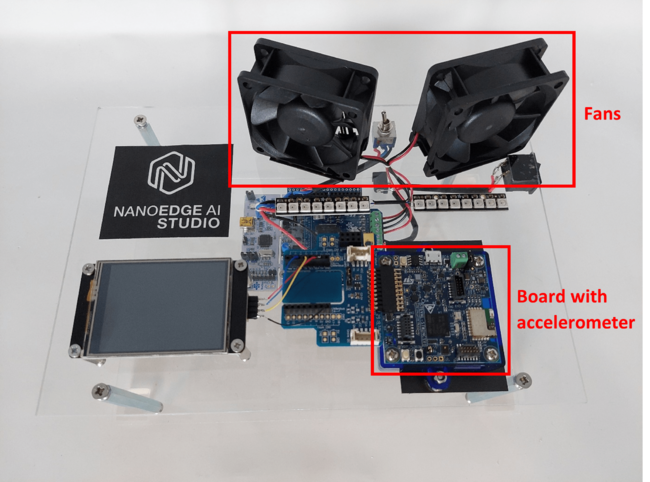

In this project, the goal is to perform an anomaly detection of an electric motor by capturing the vibrations it creates.

This is achieved using a 3-axis accelerometer. The sensor should be placed on the motor itself or as close to it as possible, to maximize the amplitude of the detected vibrations, and the accuracy of the analysis of the motor's behavior.

In this application, the signals used are temporal. Motors are modeled using 12V DC fans.

7.1.2. Initial thoughts

Even before starting data collection, it is necessary to analyze the use case, and think about the characteristics of the signal we are about to sample. Indeed, we want to make sure that we capture the essence of the physical phenomenon that takes place, in a way that it is mathematically meaningful for the Studio (that is, making sure the signal captured contains as much useful information as possible). This is particularly true with vibration monitoring, as the vibrations measured can originate from various sources and do not necessarily translate the motor's behavior directly. Hence the need for the user to be careful about the datalogging environment, and think about the conditions in which inferences are carried out.

Some important characteristics of the vibration produced can be noted:

- To consider only useful vibration patterns, accelerometer values must be logged when the motor is running. This is helpful to determine when to log signals.

- The vibration may be continuous on a short, medium or long-time scale. This characteristic must be considered to choose a proper buffer size (how long the signals should be, or how long the signal capture should take).

- Vibration frequencies of the different motor patterns must be considered to determine the target sampling frequency (output data rate) of the accelerometer. More precisely, the maximum frequency of the vibration impacts the minimum data rate allowing to reproduce the variations of the signal.

- The intensity of the vibration depends on the sensor's environment and motor regime. To record the maximum amplitude of the vibration and the most accurate patterns, the accelerometer should be placed as closed to the motor as possible. The maximum vibration amplitude must be considered to choose the accelerometer’s sensitivity.

7.1.3. Sampling frequency

While it may easy to determine the fundamental frequencies of sounds produced by a musical instrument (see 2.1.3), it is much more complex to guess which are the frequencies of the mechanical vibrations produced by a motor.

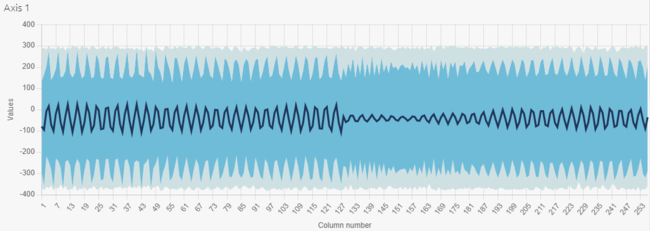

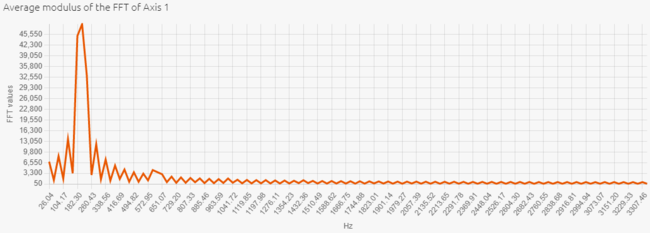

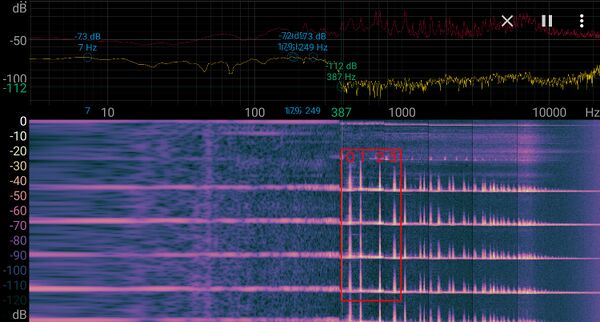

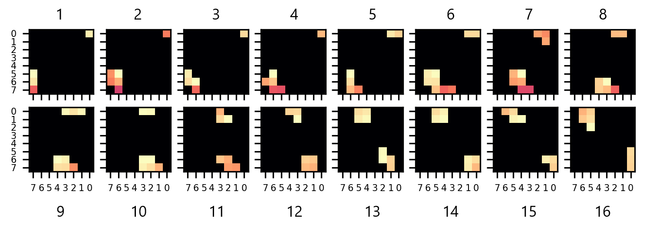

Therefore, to determine the best sampling frequency, we proceed by iterations. First, we set a medium frequency (among those available for our accelerometer): 833 Hz. We have chosen a signal length of 256 values. These settings allow to record patterns of 256/833 = 307 ms each. We can visualize the dataset in both temporal and frequential spaces in NanoEdgeAI Studio:

The recorded vibrations have one fundamental frequency. According to the FFT, this frequency is equal to 200 Hz.

The temporal view shows that we have logged numerous periods of vibrations in each signal (200 * 0,307 ~ 61 periods). So, the accuracy of the datalog is low (256 / 61 ~ 4 values per period).

Five or ten periods per signal are enough to have patterns representative of the motor behavior. The accuracy is the key point of an efficient datalog.

So we can begin the second iteration. We are going to increase the output data rate of the accelerometer to record a few periods per signal with a high accuracy.

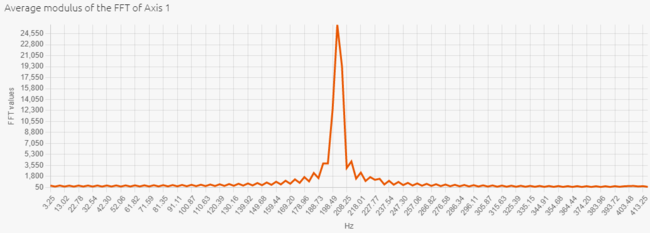

The maximum output date rate we can set is 6667 Hz. This new setting allows to record patterns of 256/6667 = 38,4 ms each. Fundamental frequency of vibrations being equal to 200 Hz, we now record 200 * 0,0384 ~ 8 periods per signal.

Here are the temporal and frequential views of this new dataset:

The vibrations logged here have a sinewave shape: the sampling frequency is sufficient to reproduce the entire signal. On the FFT graph, we note that the amplitude of the fundamental frequency is higher, and the harmonics are more visible. The signal is clearly more accurate. So a 6667 Hz sampling frequency is the most appropriate value in this example.

7.1.4. Buffer size

The buffer size is the number of samples composing our vibration signal. It should be a power of two for a proper data processing.

We have seen in the previous part that we record 8 periods of vibrations in a 256-sample buffer. We consider that signals containing 5 to 10 periods allow the most efficient processing by AI algorithms. So, our current length is optimal to have patterns representative of the motor behavior.

7.1.5. Sensitivity

The accelerometer used supports accelerations ranging from 2g up to 16g. Motors do not generate very ample vibrations, so a 2g sensitivity is enough in most cases. Sensitivity may be increased in case a saturation is observed in the temporal signals.

7.1.6. Signal capture

In order to capture every relevant vibration pattern produced by a running motor, two rules may be followed:

- Implement a trigger mechanism, to avoid logging when the motor is not powered. A simple solution is to define a threshold of vibration amplitude which is reached only when the motor is running.

- Set a random delay between logging 2 signals. This feature allows to begin datalogging at different signal phases, and therefore avoid recording the same periodic patterns continuously, which may in fact vary during the motors lifetime on the field.

The resulting 256-sample buffer is used as input signal in the Studio, and later on in the final device, as argument to the NanoEdge AI learning and inference functions.

Note: Each sample is composed of 3 values (accelerometer x-axis, y-axis and z-axis outputs), so each buffer contains 256*3 = 768 numerical values.

7.1.7. From signals to input files

Now that we managed to get a seemingly robust method to gather clean signals, we need to start logging some data, to constitute the datasets that are used as inputs in the Studio.

The goal is to be able to detect anomalies in the motor behavior. For this, we record 300 signal examples of vibrations when the motor is running normally, and 300 signal examples when the motor has an anomaly. We consider as an anomaly, a friction (applied to our prototype, a piece of paper rubbing fan blades) and an imbalance. For completeness, we record 150 signals for each anomaly. Therefore, we obtain the same amount of nominal and abnormal signals (300 each), which helps the NanoEdge AI search engine to find the most adapted anomaly detection library.

300 is an arbitrary number, but fits well within the guidelines: a bare minimum of 100 signal examples and a maximum of 10000 (there is usually no point in using more than a few hundreds to a thousand learning examples).

When recording abnormal signals, we make sure to introduce some amount of variety: for example, slightly different frictions on the blades. This increases the chances that the NanoEdge AI library obtained generalizes / performs well in different operating conditions.

Depending on the results obtained with the Studio, we adjust the number of signals used for input, if needed.

In summary:

- there are 3 input files in total, one nominal and one for each anomaly

- the nominal input file contains 300 lines, each abnormal input file contains 150 lines

- each line in each file represents a single, independent "signal example"

- there is no relation whatsoever between one line and the next (they could be shuffled), other than the fact that they testify to the same motor behavior

- each line is composed of 768 numerical values (3*256) (3 axes, buffer size of 256)

- each signal example represents a 38.4-millisecond temporal slice of the vibration pattern of the fans

7.1.8. Results

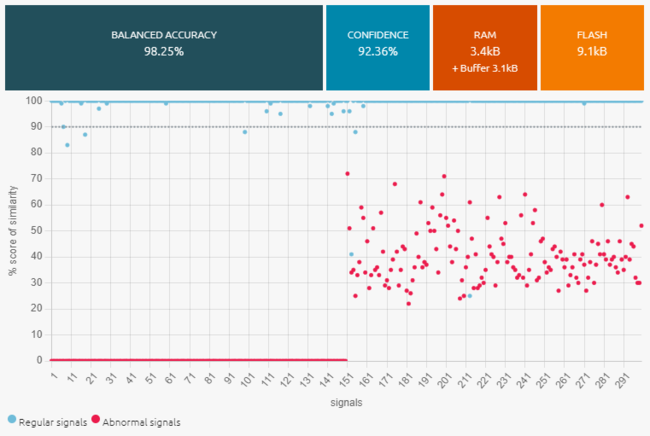

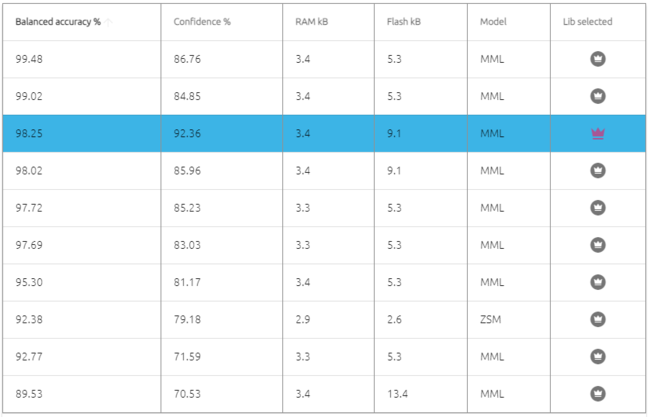

Here are the benchmark results obtained in the Studio with all our nominal and abnormal signals.

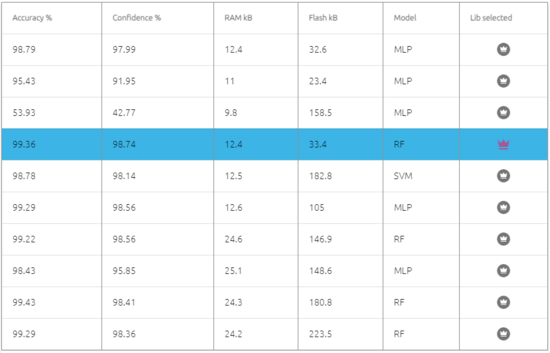

Here are some other candidate libraries that were found during benchmark, with their associated performances, memory footprint, and ML model info.

Observations:

- The best library found has very good performances.

- There is no need to adjust the datalogging settings.

- We could consider adjusting the size of the signal (taking 512 samples instead of 256) to increase the vibration patterns relevance.

- Before deploying the library, we could do additional testing, by collecting more signal examples, and running them through the NanoEdge AI Emulator, to check that our library indeed performs as expected.

- If collecting more signal examples is impossible, we could restart a new benchmark using only 60% of the data, and keep the remaining 40% exclusively for testing using the Emulator.

7.2. Vibration patterns on a ukulele

7.2.1. Context and objective

In this project, the goal is to capture the vibrations produced when the ukulele is strummed, in order to classify them, based on which individual musical note, or which chord (superposition of up to 4 notes), is played.

This is achieved using vibration (3-axis accelerometer) instead of sound (microphone). The 3-axis accelerometer is placed directly onto the ukulele, or in the sounding box, wherever the vibrations are expected to be most intense.

In this application, the signals used are temporal.

7.2.2. Initial thoughts

Even before starting data collection, it is necessary to analyze the use case, and think about the characteristics of the signal we are about to sample. Indeed, we want to make sure that we capture the essence of the physical phenomenon that takes place, in a way that it is mathematically meaningful for the Studio (that is, making sure the signal captured contains as much useful information as possible).

When strumming the ukulele, some important characteristics of the vibration produced can be noted:

- The action of strumming is sudden. Therefore the signal created is a burst of vibration, and dies off after a couple of seconds. The vibration may be continuous on a short time scale, but it does not last long, and is not periodic on a medium or long time scale. This is helpful to determine when to start signal acquisition.

- The previous observation is also useful to choose a proper buffer size (how long the signals should be, or how long the signal capture should take).

- The frequencies involved in the vibration produced are clearly defined, and can be identified through the pitch of the sound produced. This is helpful to determine the target sampling frequency (output data rate) of the accelerometer.

- The intensity of the vibrations are somewhat low, therefore we have to be careful about where to place the accelerometer on the ukulele's body, and how to choose the accelerometer's sensitivity.

7.2.3. Sampling frequency

When strumming the ukulele, all the strings vibrate at the same time, which makes the ukulele itself vibrate. This produces the sound that we hear, so we can assume that the sound frequencies, and the ukulele vibration frequencies, are closely related.

Each chord contains a superposition of four musical notes, or pitches. This means we have four fundamental frequencies (or base notes) plus all the harmonics (which give the instrument its particular sound). These notes are quite high-pitched, compared to a piano for example. For reference, we humans can usually hear frequencies between 20 Hz and 20000 Hz, and that the note A (or la) usually has a fundamental frequency of 440 Hz. Thus, we can estimate that the chords played on the ukulele contain notes between 1000 Hertz and 10000 Hertz maximum.

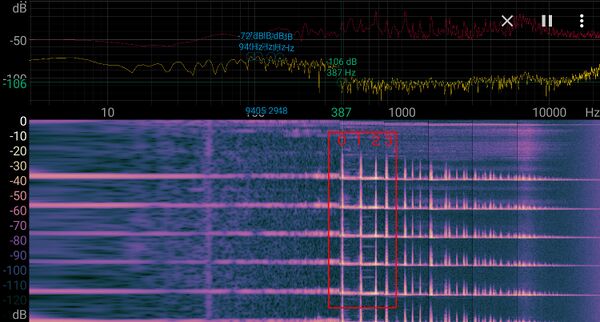

To be more precise, and learn more about our signal, we can use a simple real-time frequency analyzer. In this example, we are using Spectroid, a free Android application.

Here is the spectrogram obtained when playing the C major chord (or do) several times.

Here's the spectrogram on a F major chord (fa).

In both cases, the fundamental frequency (0) and the 3 first harmonics (1, 2, 3) are between around 380 Hz and 1000 Hz. There are many more harmonics up to 10 kHz, but they rapidly decrease in intensity, so we can assume for the moment that 1000 Hz is the maximum frequency to sample.

To perform this, we need to set the sampling frequency to at least double that frequency; 2000 Hz minimum.

In this example, the accelerometer used only supports a discrete range of sampling frequencies, among which: 1666 Hz, 3333 Hz and 6666 Hz.

We start testing with a sampling frequency of 3333 Hz. We adapt later, depending on the results we get from the Studio, for instance by doubling the frequency to 6666 Hz for more precision.

7.2.4. Buffer size

The buffer size is the number of samples that compose our vibration signal.

When strumming the ukulele, the sound produced lasts for one second or so. The vibration on the ukulele body quickly dies off. Therefor we can assume that capturing a signal of maximum 0.5 seconds is enough. We adapt later, depending on the results we get from the Studio.

With a target length of approximately 0.5 seconds, and a sampling frequency of 3333 Hz, we get a target buffer size of 3333*0.5 = 1666.

The chosen buffer size must be **a power of two**, let's go with 1024 instead of 1665. We can have picked 2048, but 1024 samples is enough for a first test. We can always increase it later if required.

As a result, the sampling frequency of 3333 Hz with the buffer size of 1024 (samples per signal per axis) make a recorded signal length of 1024/3333 = 0.3 seconds approximately.

So each signal example that is used for data logging, or passed to a NanoEdge AI function for learning or inference, represent a 300-millisecond slice of vibration pattern. During these 300 ms, all 1024 samples are recorded continuously. But since all signal examples are independent from one another, whatever happens between two consecutive signals does not matter (signal examples do not have to be recorded at precise time intervals, or continuously).

7.2.5. Sensitivity

The accelerometer used supports accelerations ranging from 2g up to 16g. For gentle ukulele strumming, 2g or 4g are enough (we can always adjust it later).

We choose a sensitivity of 4g.

7.2.6. Signal capture

In order to create our signals, we can imagine continually recording accelerometer data. However, we chose an actual signal length of 0.3 seconds. So if we started strumming the ukulele randomly, using continuous signal acquisition, we then end up with 0.3-second signal slices that do not necessarily contain the useful part of the strumming, and have no guarantee that the relevant signals are captured. Also, a lot of "silence" would be recorded, between strums.

Instead, we implement a trigger mechanism; so that only the vibration signature that directly follows a strum is recorded.

Here are a few ideas on how to implement such a trigger mechanism that detects a strum:

- We want to the capture of a single signal buffer (1024 samples) whenever the intensity of the ukulele's vibrations cross a threshold.

- We need to monitor the vibrations continuously, and check whether or not the intensity has suddenly risen.

- To measure the average vibration intensity at any time, we collect very small data buffers from the accelerometer (example: 4 consecutive samples), and compute the average vibration intensity across all sensor axes for each such "small buffer".

- We continuously compare the average vibration intensities obtained from one "small buffer" (let's call it I1) to the next (call it I2).

- We choose a threshold (example: 50%) to decide when to trigger (here, for instance, whenever I2 is at least 50% higher than I1).

- We trigger the recording of a full 1024 sample signal buffer whenever I2 >= 1.5*I1 (here using 50%).

The resulting 1024-sample buffer is used as input signal in the Studio, and later on in the final device, as argument to the NanoEdge AI learning and inference functions.

Of course, different approaches (equivalent, or more elaborate) might be used in order to get a clean signal.

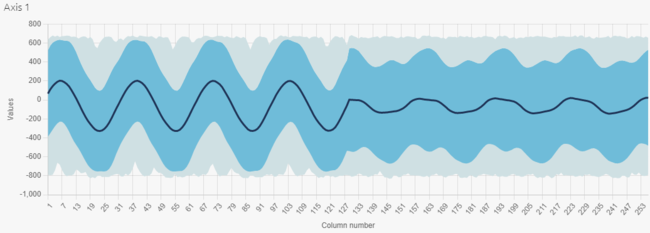

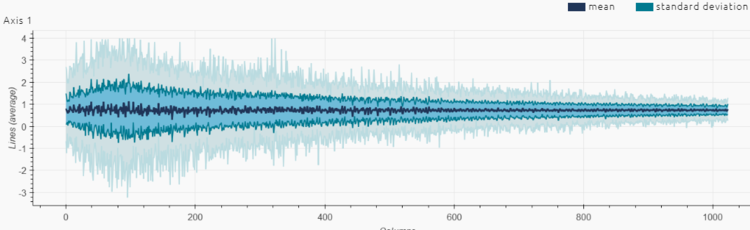

Here is what the signal obtained after strumming one chord on the ukulele looks like (plot of intensity (g) vs. time (sample number), representing the accelerometer's x-axis only):

Observations:

- There is no saturation/clipping on the signal (y-axis of the plot above), so the sensitivity chosen for the accelerometer seems right.

- The length seems appropriate because it is much longer than a few periods of all the frequency components contained in our vibration, and therefore contains enough information.

- The length also seems appropriate because it represents well the vibration burst associated to the strumming, including the quick rise and the slower decay.

- If the signal needs to be shortened (for instance, if we need to limit the amount of RAM consumed in the final application), we can consider cutting it in half (truncating it after the first 512 samples), and the resulting signal would probably still be very usable.

- The number of numerical values in the signal shown here is 1024 because we're only showing the x-axis of the accelerometer. In reality, the input signal is composed of 1024*3 = 3072 numerical values per signal.

7.2.7. From signals to input files

Now that we managed to get a seemingly robust method to gather clean signals, we need to start logging some data, to constitute the datasets that are used as inputs in the Studio.

The goal is to be able to distinguish all main diatonic notes and chords of the C major scale (C, D, E, F, G, A, B, Cmaj, Dm, Em, Fmaj, G7, Am, Bdim) for a total of 14 individual classes.

For each class, we record 100 signal examples (100 individual strums). This represents a total of 1400 signal examples across all classes.

100 is an arbitrary number, but fits well within the guidelines: a bare minimum of 20 signal examples per sensor axis (here 20*3 = 60 minimum) and a maximum of 10000 (there is usually no point in using more than a few hundreds to a thousand learning examples).

When recording data for each class (example, strumming a Fmaj chord 100 times), we make sure to introduce some amount of variety: slightly different finger pressures, positions, strumming strength, strumming speed, strumming position on the neck, overall orientation of the ukulele, and so on. This increases the chances that the NanoEdge AI library obtained generalizes/performs well in different operating conditions.

Depending on the results obtained with the Studio, we adjust the number of signals used for input, if needed.

In summary:

- there are 14 input files in total, one for each class

- the input files contain 100 lines each

- each line in each file represents a single, independent "signal example"

- there is no relation whatsoever between one line and the next (they could be shuffled), other than the fact that they pertain to the same class

- each line is composed of 3072 numerical values (3*1024) (3 axes, buffer size of 1024)

- each signal example represents a 300-millisecond temporal slice of the vibration pattern on the ukulele

7.2.8. Results

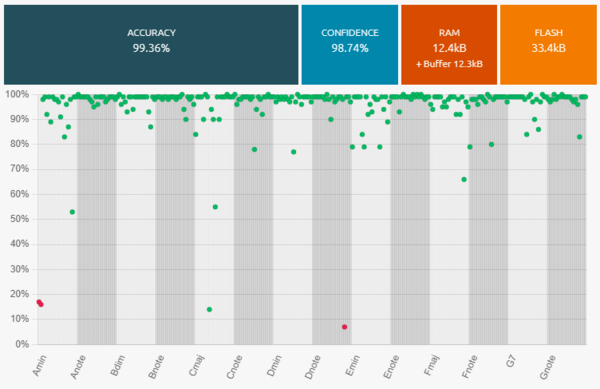

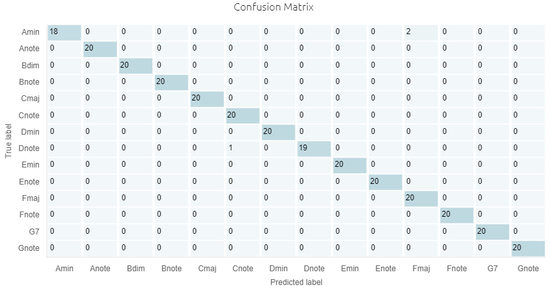

Here are the benchmark results obtained in the Studio, with 14 classes, representing all main diatonic notes and chords of the C major scale.

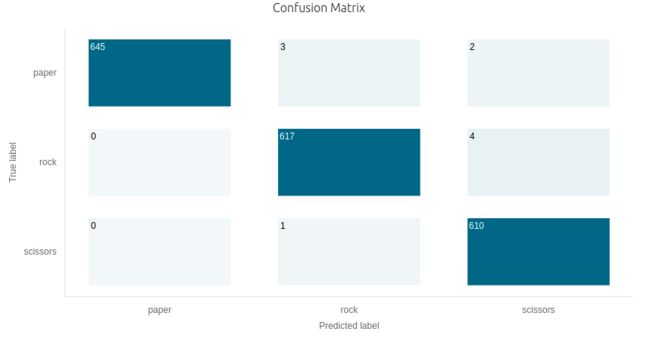

Here are the performances of the best classification library, summarized by its confusion matrix:

Here are some other candidate libraries that were found during benchmark, with their associated performances, memory footprint, and ML model info.

Observations:

- We get excellent results with very few misclassifications.

- There is no need to adjust the datalogging settings.

- We can consider adjusting the size of the signal (taking 512 samples instead of 1024) to reduce the memory footprint (RAM) of the libraries.

- Before deploying the library, we can do additional testing, by collecting more signal examples, and running them through the NanoEdge AI Emulator, to check that our library indeed performs as expected.

- If collecting more signal examples is impossible, we can restart a new benchmark using only 60% of the data, and keep the remaining 40% exclusively for testing using the Emulator.

7.3. Static pattern recognition (rock, paper, scissors) using time-of-flight

7.3.1. Context and objective

In this project, the goal is to use a time-of-flight sensor to capture static hand patterns, and classify them depending on which category they belong to.

The three patterns that are recognized here are: "Rock, Paper, Scissors", which correspond to 3 distinct hand positions, typically used during a "Rock paper scissors" game.

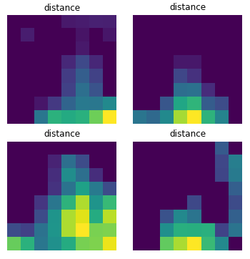

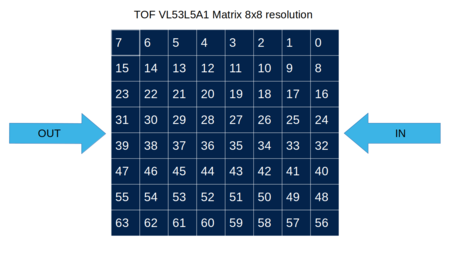

This is achieved using a time-of-flight sensor, by measuring the distances between the sensor and the hand, using a square array of size 8x8.

7.3.2. Initial thoughts

In this application, the signals used are not temporal.

Only the shape/distance of the hand is measured, not its movement.

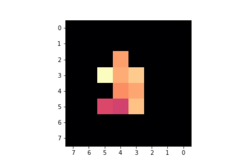

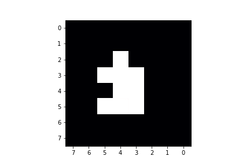

Here is an illustration of four independent examples of outputs from the time-of flight sensor. The coordinates of each measuring pixel is translated by its position on the 2D array, and the color of each pixel translates how close to the sensor the object is (the brighter the pixel, the closer the hand).

7.3.3. Sampling frequency

Because each signal is time-independent, the choice of a sampling frequency is irrelevant to the structure of our signals. Indeed, what we here consider as a full "signal" is nothing more than a single sample; a 8x8 array of data, in the form of 64 numerical values.

However, we choose a sampling frequency of 15 Hz, meaning that a full signal is to be outputted every 1/15th of a second.

7.3.4. Buffer size

The size of the signal is already constrained by the size of the sensor's array.

As mentioned above, each "signal example" used as input in the Studio is a single 8x8 array, as shown above. In practice, each array is represented as a line composed of 64 numerical values representing distances in millimeters. For example:

96.0 93.0 96.0 90.0 105.0 98.0 101.0 0.0 93.0 96.0 98.0 94.0 105.0 98.0 96.0 0.0 97.0 98.0 98.0 96.0 104.0 98.0 96.0 0.0 97.0 99.0 97.0 94.0 104.0 100.0 96.0 0.0 100. 0 98.0 96.0 96.0 103.0 98.0 98.0 0.0 99.0 97.0 98.0 97.0 102.0 98.0 101.0 0.0 102.0 99.0 100.0 100.0 102.0 98.0 102.0 0.0 102.0 95.0 99.0 96.0 96.0 96.0 102.0 102.0

7.3.5. Signal capture

In order to create our signals, we can imagine continually recording time-of-flight arrays. However, it is be preferable to do so only when needed (that is, only when some movement is detected under the sensor). This is also useful after the datalogging phase is over, when we implement the NanoEdge AI Library; inferences (classifications) only happen after some movement has been detected.

So instead of continuous monitoring, we use a trigger mechanism that only starts recording signals (arrays, buffers) when movement is detected. Here, it is built-in to the time-of-flight sensor, but it could easily be programmed manually. For instance:

- Choose threshold values for the minimum maximum detection distances

- Continuously monitor the distances contained in the 8x8 time-of-flight buffer

- Only keep a given buffer if at least 1 (or a predefined percentage) or the pixels returns a distance value comprised between the two predefined thresholds

During the datalogging, this approach prevents the collection of "useless" empty buffers (no hand). After the datalogging procedure (after the library is implemented), this approach is also useful to limit the number of inferences, effectively decreasing the computational load on the microcontroller, and its power consumption.

7.3.6. From signals to input files

The input signal is well defined, and needs to be captured in a relevant manner for what needs to be achieved.

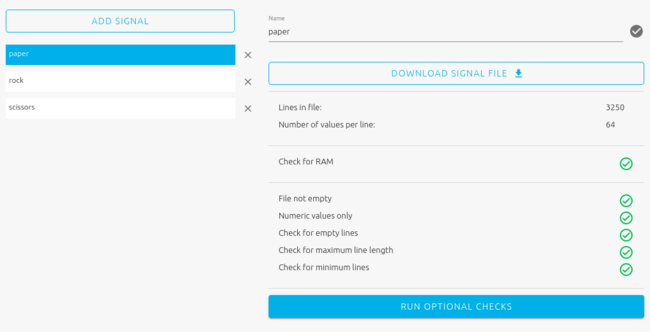

The goal is to distinguish among three possible patterns, or classes. Therefore, we create three input files, each corresponding to one of the classes: rock, paper, scissors.

Each input file to contain a significant number of signal examples (~100 minimum), covering the whole range of "behaviors" or "variety" that pertain to a given class. For instance, for "stone", we need to provide examples of many different versions of "stone" hand positions, that describe the range of everything that could happen and rightfully be called "stone". In practice, this means capturing slightly different hand positions (offset along any of the two directions of the time-of-flight array, but also, at slightly different distances from the sensor), orientations (hand tilt), and shapes (variations in the way the gesture is performed).

This data collection process is greatly helped by the fact that we sample sensor arrays at 15 Hz, which means that we get 900 usable signals per minute.

For each class, we collect data in this way for approximately three minutes. During these three minutes, slight variations are made:

- to the hand's position in all 3 directions (up/down left/right, top/bottom)

- to the hand's orientation (tilt in three directions)

- to the gesture itself (example, for the "scissors" gesture, wiggle the two fingers slightly)

We get a total of 3250 signal examples for each class.

7.3.7. Results

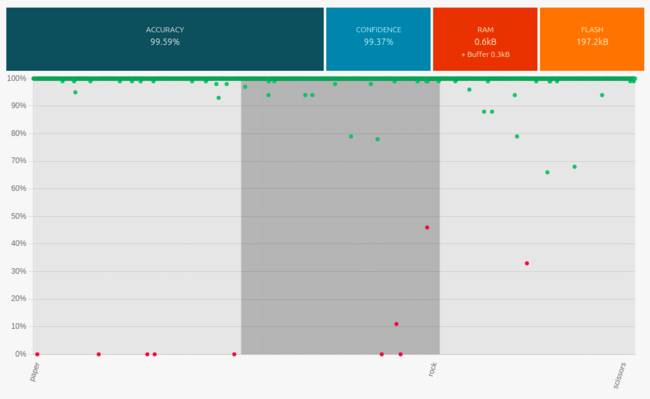

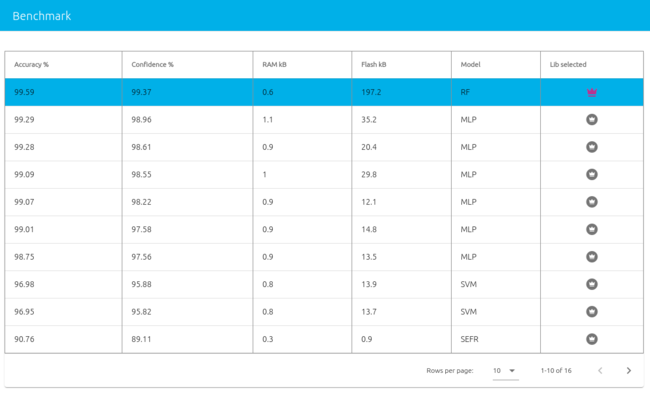

Below are the classification benchmark results obtained in the Studio with these three classes.

Here are the performances of the best classification library, summarized by its confusion matrix.

Below are some other candidate libraries that were found during benchmark, with their associated performances, memory footprint, and ML model info.

Observations:

- We get excellent results with very few misclassifications.

- Before deploying the library, it is good practice to test it by collecting more signal examples, and running them through the NanoEdge AI Emulator, to check that our library indeed performs as expected.

- Considering two libraries with similar performances (accuracy, confidence), experience shows that libraries requiring more flash memory often generalize better to unseen data (more reliable / adaptable when testing in slightly different conditions).

7.4. Dynamic people counting using Time-of-Flight

7.4.1. Context and objective

In this project, the goal is to use a time-of-flight sensor to capture people's movement while crossing a gate, and classify these movements into distinct categories. Here are some examples of behaviors to distinguish:

- One person coming in (IN_1)

- One person going out (OUT_1)

- Two people side-by-side coming in (IN_2)

- Two people side-by-side going out (OUT_2)

- Two people crossing the gate simultaneously in opposite directions (CROSS)

This is achieved using a time-of-flight sensor, by measuring the distances between the sensor (for example, mounted on the ceiling, or on top of the gate, looking down to the ground) and the people passing under it.

The time-of-flight arrays outputted by the sensor are 8x8 in resolution:

This device, placed at the entrance of a room, can also be used to determine the number of people present in the room.

7.4.2. Initial thoughts

In this application, because we want to capture movements and identify them, the signals considered have to be temporal.

This means that each signal example that are collected during datalogging, and that use later on to run inference (classification) using the NanoEdge AI library, have to contain several 8x8 arrays in a row. Putting several 8x8 sensor frames in a row enables us to capture the full length of person's movement.

Note: in order to prevent false detections (for example, a bug flying in front of the sensor, or pets / luggage crossing the sensor frame), we set on the sensor a minimum and maximum threshold distance.

#define MIN_THRESHOLD 100 /* Minimum distance between sensor & object to detect */ #define MAX_THRESHOLD 1400 /* Maximum distance between sensor & object to detect */

7.4.3. Sampling frequency and buffer size

For the sampling frequency, we choose the maximum rate available on the sensor: 15 Hz.

The duration of a person's movement crossing the sensor frame is estimated to be about 2 seconds. To get a two-second signal buffer, we need to get 15*2 = 30 successive frames, as we sample at 15 Hz.

It is good practice to choose a power of two for buffer lengths, in case the NanoEdge AI library requires FFT pre-processing (this is determined automatically by the Studio during benchmarking), so we choose 32 instead of 30.

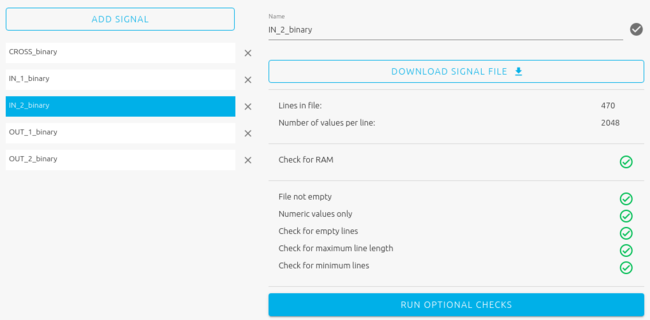

With 32 frames sampled at 15 Hz, it means each signal represents a temporal length of 32/15 = 2.13 seconds, and contains 32*64 = 2048 numerical values.

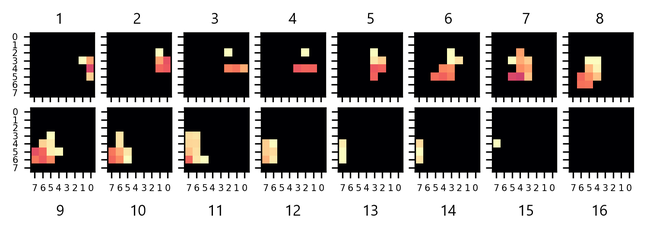

Below is a visual representation of a signal example, corresponding to one person coming in (moving from the right to the left of the frame).

Note: only half of the movement is represented, from frame 1 to frame 16 (the complete buffer has 32 frames). Red/purple pixels correspond to a presence detected at a short distance, yellowish pixels at a longer distance, and black pixels mean no presence detected (outside of the MIN_THRESHOLD and MAX_THRESHOLD).

Here are two people coming in (moving from the right to the left of the frame):

And two people crossing (person at the top moving from right to left, person at the bottom moving from left to right):

Note that some movements are faster than others: for some buffers, the last portion of the signal may be blank (empty / black frames). This is not necessarily a problem, instead it increases signal variety (as long as we also get other sorts of signals, some shorter, some longer, some offset, and so on), which can result in a more robust, more generalizable NanoEdge AI library.

7.4.4. Signal capture

Here, we won't collect ~2-second buffers continuously, but only when a presence is detected under the sensor. This presence detection is built-in to the time-of-flight sensor (but it could be easily re-coded, for example by monitoring each pixel, and watching for sudden changes in distances).

So as soon as some movement is detected under the sensor, a full 32-frame buffer is collected (2048 values).

We also want to prevent buffer collection whenever a static presence is detected under the sensor for an extended time period. To do so, a condition is implemented just after buffer collection, see below, in pseudo-code:

while (1) { if (presence) { collect_buffer(); do { wait_ms(1000); } while (presence); } }

7.4.5. From signals to input files

For this application, the goal is to distinguish among 5 classes:

- One person coming in (IN_1)

- One person going out (OUT_1)

- Two people side-by-side coming in (IN_2)

- Two people side-by-side going out (OUT_2)

- Two people crossing the gate simultaneously in opposite directions (CROSS)

We therefore create fives files, one for each class. Each input file is to contain a significant number of signal examples (~100 minimum), covering the whole range of "behaviors" or "variety" that pertain to a given class. This means that approximately 100 movements for each class must be logged (total of ~500 across all classes), which may be tedious, bus is nonetheless necessary.

With this base of 100 signal examples per class, a couple of additional steps can be carried out for better efficiency and performances: data binarization, and data augmentation.

Data binarization:

In this application, the goal is to detect a movement and characterize it, not to measure distances. Therefore, in order to simplify the input data buffers as much as possible, we replace the distance values by binary values. In other words, whenever a pixel detects some amount of presence, the distance value is replaced with 1, otherwise, it is replaced with 0.

Data augmentation:

To enrich the data, and cover some corner cases (for example, a person passing not in the middle of the frame, but near the edge), we may artificially expand the data, for example using a Python script. Here are some examples of operation that could be made:

- shifting the pixel lines on each frame up or down incrementally, one pixel at a time: by doing this, we simulate datalogs where each movement is slightly offset spatially, up or down, on the sensor's frame. This is done, on the flat 2048-value buffer, by shifting 8-value pixel lines to the right, or to the left (by 8 places) for each 64-value frame, and repeating the operation on all 32 frames. The 8 blank spaces created in this way are filled with zeros.

- shifting the frames contained on each buffer: by doing this, we simulate datalogs where each movement is slightly offset temporally (each movement starts one frame sooner, or one frame later). This is done, on the flat 2048-value buffer, by shifting all 32 64-value frames, to the right, or to the left (by 64 places). The 64 blank spaces created in this way are filled with zeros.

- inverting the frames, so that one type of movement becomes its opposite (for example, "IN_1" would become "OUT_1"). The same can be done to create "OUT_2" by inverting the buffers logged from "IN_2". This would be achieved on the 2048-value buffer by reversing each 8-value pixel line (inverting the order of the 8 numerical values corresponding to a pixel line) for each 64-value frame, and repeating the operation for all 32 frames.

After data binarization and augmentation, we end up with approximately 500-1500 signal examples per class (number of signals per class don't have to be perfectly balanced). For example, we have 470 signals for the class "IN_2":

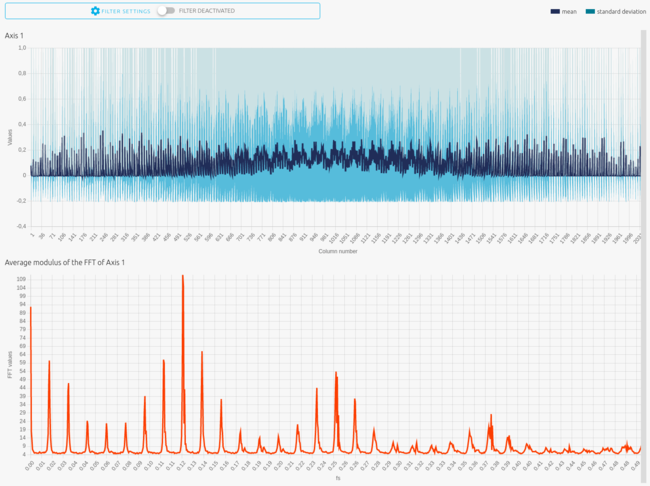

Here is the 2048-value signal preview for "IN_2":

7.4.6. Results

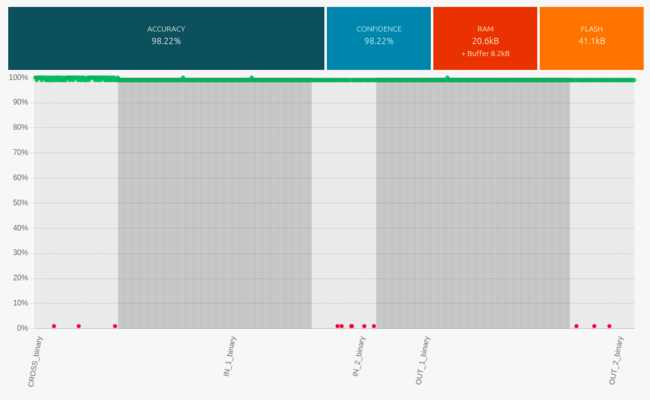

Here are the classification benchmark results obtained in the Studio with these 5 classes.

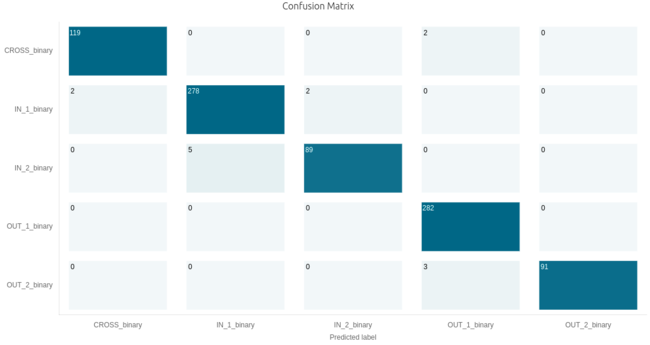

Here are the performances of the best classification library, summarized by its confusion matrix.

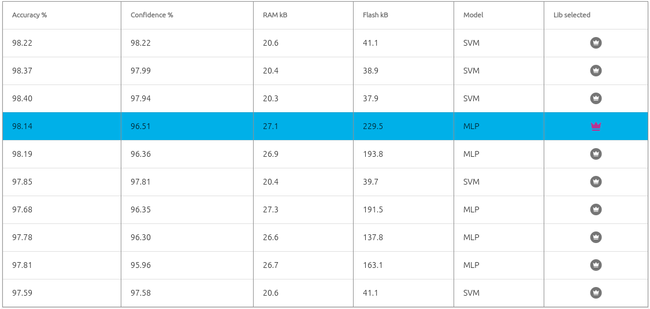

Here are some other candidate libraries that were found during benchmark, with their associated performances, memory footprint, and ML model info.

Note: on the picture above, the best library automatically selected by the Studio is displayed on the 1st line (SVM model, 41.1 KB flash). Here instead, we manually selected a different library (4th line, MLP model, 229.5 KB flash). Indeed, on this occasion the tests (using the Emulator) showed that this second library (MLP, taking the most flash memory), led on average to better results / better generalization than the SVM library.

7.5. Smart Italian coffee watcher

7.5.1. Context and objective

This project’s goal is to create a device that can be placed directly on an Italian coffee maker, that will learn its vibration patterns, and detect when the coffee is ready.

The device will be able to:

- Learn the coffee maker’s vibration patterns.

- Alert the user when an “anomaly” is detected, which corresponds to a perfect Italian coffee.

For this project we used a NUCLEO-L432KC and a LIS3DH.

7.5.2. Sampling frequency and Buffer size

As always, we first need to choose some crucial parameters for the accelerometer, namely:

* the sampling frequency

- the buffer size

- the sensitivity

It needs to be chosen so that the most important features of our signal are captured.

If we were able to log data continuously, we would have used the Sampling finder in NanoEdge AI Studio to select the best parameters. But here, we will start by selecting a sampling rate and buffer size based on experience and test multiple ones if the results are not good enough.

We chose:

- A sampling frequency of 1600 Hz

- 256 samples per signal

- which makes a recorded signal length of L = n / f = 256 / 1600, or 0.16 seconds approximately.

7.5.3. Sensitivity

The LIS3DH supports accelerations ranging from 2g up to 16g. For the coffee burbling, 2g should be enough (we can always adjust it later). We choose a sensitivity of 2g.

7.5.4. Signal capture

To prepare your data logger:

- Locate the spot on your coffee machine where vibrations are most intense when it starts heating up or boiling.

- Fix the accelerometer to the chosen spot, using glue or good double sided tape. Here, we fixed it directly on top of the coffee machine, so that it is not directly in contact with the hottest parts of the metal.

To log regular signals (coffee heating):

- Connect the NUCLEO-L432KC to your computer via USB.

- In the Studio, click Add Signals

- A window pops up. Click the second tab, From serial (USB).

- Select the serial / COM port associated to your NUCLEO-L432KC. Refresh if needed.

- Choose a baudrate of 115200.

- Tick the Max lines box, and choose 100 at least (or more, depending on your patience).

- In the delimiter section, select Space.

- When the coffee maker is starting to heat up, click the red Record button.

- Keep recording until you have at least 100 lines.

- Click the grey Stop button, and check in the preview that the data looks OK.

- Validate import.

To log abnormal signals (coffee burbling), move on to Step 3 in the Studio. Then, repeat the steps described previously, with modifications on the following steps:

- 2. Click Choose signals, under the Step 3 icon.

- 8. When the coffee is starting to burble, click the red Record button.

7.5.5. Using your NanoEdge AI to build your final product

Once you logged data, proceed as usual in NanoEdge AI Studio to get your library. In this section we will use the NanoEdge AI Library functions to build the desired features on our device, and test it out.

Our device acts like a data logger, with a smart twist:

- First, we create a new project in Cube IDE

- We first use the NanoEdgeAI_init and if the response is NEAI_OK we continue,

- It passes the recorded accelerometer buffers to the NanoEdgeAI_learn function to learn the first 50 signals captured (feel free to increase this value).

- Blue LEDs indicate that the learning is in progress.

- The end of the learning phase is indicated by three long LED blinks.

- It then passes all subsequent signals to the NanoEdgeAI_detect function which returns a similarity percentage.

- If similarity < 50%, it increments an alarm counter.

- If similarity >= 50%, it resets the alarm counter.

- If the alarm counter >= 3, it displays the Italian flag and plays a sound.

Now you are ready to play with your new smart sensor!

8. Resources