Sensing is a major part of the smart objects and equipment, for example, condition monitoring for predictive maintenance, which enables context awareness, production performance improvement, and results in a drastic decrease in the downtime due to preventive maintenance.

The FP-AI-MONITOR1 is a Multi-sensor AI data monitoring framework on the wireless industrial node, function pack for STM32Cube. It helps to jump-start the implementation and development of sensor-monitoring-based applications designed with the X-CUBE-AI Expansion Package for STM32Cube or with the NanoEdge™ AI Studio. It covers the entire design of the Machine Learning cycle from the data set acquisition to the integration on a physical node.

FP-AI-MONITOR1 runs learning and inference sessions in real-time on SensorTile Wireless Industrial Node development kit (STEVAL-STWINKT1B), taking data from onboard sensors as input. FP-AI-MONITOR1 implements a wired interactive CLI to configure the node, and manage the learning, detection, and classification phases using NanoEdge™ AI libraries. It also supports an advanced mode called dual-phase, in which it combines the detection from NanoEdge™ AI library and classification using a CNN model. In addition to this, for a simple in-the-field operation, a standalone battery-operated mode allows basic controls through the user button, without using the console.

The STEVAL-STWINKT1B has an STM32L4R9ZI ultra-low-power microcontroller (Arm® Cortex®‑M4 at 120 MHz with 2 Mbytes of Flash memory and 640 Kbytes of SRAM). In addition, the STEVAL-STWINKT1B embeds industrial-grade sensors, including 6-axis IMU, 3-axis accelerometer and vibrometer, and analog microphones to record any inertial, vibrational and acoustic data with high accuracy at high sampling frequencies.

The rest of the article discusses the following topics:

- The required hardware and software,

- Pre-requisites and setup,

- FP-AI-MONITOR1 console application,

- Running a human activity recognition (HAR) application for sensing on the device,

- Running an anomaly detection application using NanoEdge™ AI libraries on the device,

- Running an n-class classification application using NanoEdge™ AI libraries on the device,

- Running an advance dual model on the device, which combines anomaly detection using NanoEdge™ AI libraries based on vibration data and CNN based n-class classification based on ultrasound data,

- Performing the vibration and ultrasound sensor data collection using a prebuilt binary of FP-SNS-DATALOG1,

- Button operated modes, and

- Some links to useful online resources, to help the user better understand and customize the project for her/his own needs.

This article is just to serve as a quick starting guide and for full FP-AI-MONITOR1 user instructions, readers are invited to refer to FP-AI-MONITOR1 User Manual.

1. Hardware and software overview

1.1. SensorTile wireless industrial node Evaluation Kit STEVAL-STWINKT1B

The SensorTile Wireless Industrial Node (STEVAL-STWINKT1B) is a development kit and reference design that simplifies the prototyping and testing of advanced industrial IoT applications such as condition monitoring and predictive maintenance. It is powered with an ultra-low-power Arm® Cortex®-M4 MCU at 120 MHz with FPU, 2048-Kbyte Flash memory (STM32L4R9). STEVAL-STWINKT1B is equipped with a microSD™ card slot for standalone data logging applications. STEVAL-STWINKT1B also features a wide range of industrial IoT sensors including but not limited to:

- an ultra-wide bandwidth (up to 6 kHz), low-noise, 3-axis digital vibration sensor (IIS3DWB)

- a 6-axis digital accelerometer and gyroscope iNEMO inertial measurement unit (IMU) with machine learning core (ISM330DHCX), and

- an analog MEMS microphone with a frequency response up to 80 kHz (IMP23ABSU).

Refer to this document for all the information about different sensors and features supported by STEVAL-STWINKT1B.

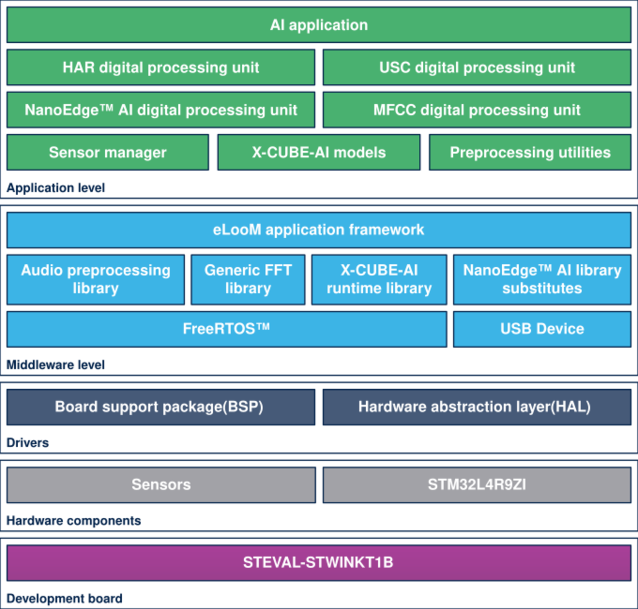

1.2. FP-AI-MONITOR1 software description

The top-level architecture of the FP-AI-MONITOR1 function pack is shown in the following figure.

2. Prerequisites and setup

2.1. Hardware prerequisites and setup

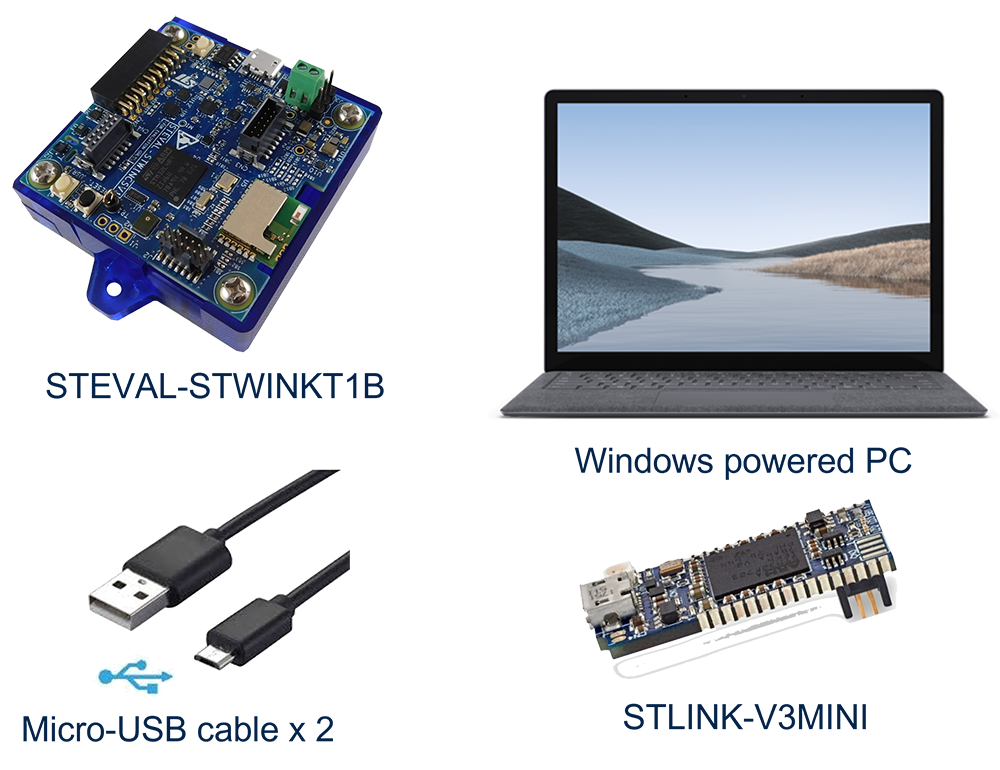

To use the FP-AI-MONITOR1 function pack on STEVAL-STWINKT1B, the following hardware items are required:

- STEVAL-STWINKT1B development kit board,

- Windows® powered laptop/PC (Windows® 7, 8, or 10),

- Two Micro-USB cables, one to connect the sensor-board to the PC, and another one for the STLINK-V3MINI, and

- an STLINK-V3MINI.

2.2. Software requirements

2.2.1. FP-AI-MONITOR1

- Download the FP-AI-MONITOR1 package from ST website, extract and copy the contents of the .zip file into a folder on your PC. The package contains binaries and source code for the sensor board STEVAL-STWINKT1B.

2.2.2. IDE

- Install one of the following IDEs:

- STMicroelectronics STM32CubeIDE version 1.10.0 or later (tested on 1.10.0),

- IAR Embedded Workbench for Arm (EWARM) toolchain version 9.20.1 or later (tested on 9.20.1), or

- μKeil® Microcontroller Development Kit (MDK-ARM) toolchain version 5.32 or later (tested on 5.32).

2.2.3. STM32CubeProgrammer

- STM32CubeProgrammer (STM32CubeProg) is an all-in-one multi-OS software tool for programming STM32 products. It provides an easy-to-use and efficient environment for reading, writing, and verifying device memory through both the debug interface (JTAG and SWD) and the bootloader interface (UART, USB DFU, I2C, SPI, and CAN). STM32CubeProgrammer offers a wide range of features to program STM32 internal memories (such as Flash memory, RAM, and OTP) as well as external memories. FP-AI-MONITOR1 is tested with STM32CubeProgrammer version 2.10.0.

- This software can be downloaded from STM32CubeProg.

2.2.4. TeraTerm

- TeraTerm is an open-source and freely available software terminal emulator, which is used to host the CLI of the FP-AI-MONITOR1 through a serial connection.

- Download and install the latest version available from TeraTerm.

2.2.5. STM32CubeMX

STM32CubeMX is a graphical tool that allows a very easy configuration of STM32 microcontrollers and microprocessors, as well as the generation of the corresponding initialization C code for the Arm® Cortex®-M core or a partial Linux® Device Tree for Arm® Cortex®-A core), through a step-by-step process. Its salient features include:

- Intuitive STM32 microcontroller and microprocessor selection.

- Generation of initialization C code project, compliant with IAR Systems®, Keil®, and STM32CubeIDE for Arm® Cortex®-M core

- Development of enhanced STM32Cube Expansion Packages thanks to STM32PackCreator, and

- Integration of STM32Cube Expansion Packages into the project.

FP-AI-MONITOR1 requires STM32CubeMX version 6.5.0 or later (tested with 6.6.0). To download the STM32CubeMX and obtain details of all the features visit st.com.

2.2.6. X-CUBE-AI

X-CUBE-AI is an STM32Cube Expansion Package part of the STM32Cube.AI ecosystem and extending STM32CubeMX capabilities with automatic conversion of pre-trained Artificial Intelligence models and integration of generated optimized library into the user's project. The easiest way to use it is to download it inside the STM32CubeMX tool. FP-AI-MONITOR1 requires X-CUBE-AI version 7.2.0 or later (tested with 7.2.0). This can be downloaded following the instructions in the user manual "Getting started with X-CUBE-AI Expansion Package for Artificial Intelligence (AI)" (UM2526). The X-CUBE-AI Expansion Package also offers several means to validate the AI models (Neural Network and Scikit-Learn models in .h5, .onnx, and .tflite format) both on desktop PC and STM32, as well as to measure performance on STM32 devices (computational and memory footprints) without user handmade ad-hoc C code.

2.2.7. Python 3.7.3

Python is an interpreted high-level general-purpose programming language. Python's design philosophy emphasizes code readability with its notable use of significant indentation. Its language constructs as well as its object-oriented approach aim to help programmers write clear, logical code for small and large-scale projects. To build and export AI models the reader requires to set up a Python environment with a list of packages. The list of the required packages along with their versions is available as a text file in /FP-AI-MONITOR1_V2.1.0/Utilities/requirements.txt directory. The following command is used in the command terminal of the anaconda prompt or Ubuntu to install all the packages specified in the configuration file requirements.txt:

pip install -r requirements.txt

2.2.8. NanoEdge™ AI Studio

NanoEdge™ AI Studio is a new Machine Learning (ML) technology to bring true innovation easily to the end-users. In just a few steps, developers can create optimal ML libraries using a minimal amount of data, for:

- Anomaly Detection,

- Classification, and

- Extrapolation.

In addition, users can also program and configure the STEVAL-STWINKT1B for the data logging from Studio through a high-speed data logger.

This function pack supports only the Anomaly Detection and n-Class Classification libraries generated by NanoEdge™ AI Studio for STEVAL-STWINKT1B.

FP-AI-MONITOR1 is tested using NanoEdge™ AI Studio V3.1.0.

.

2.3. Installing the function pack to STEVAL-STWINKT1B

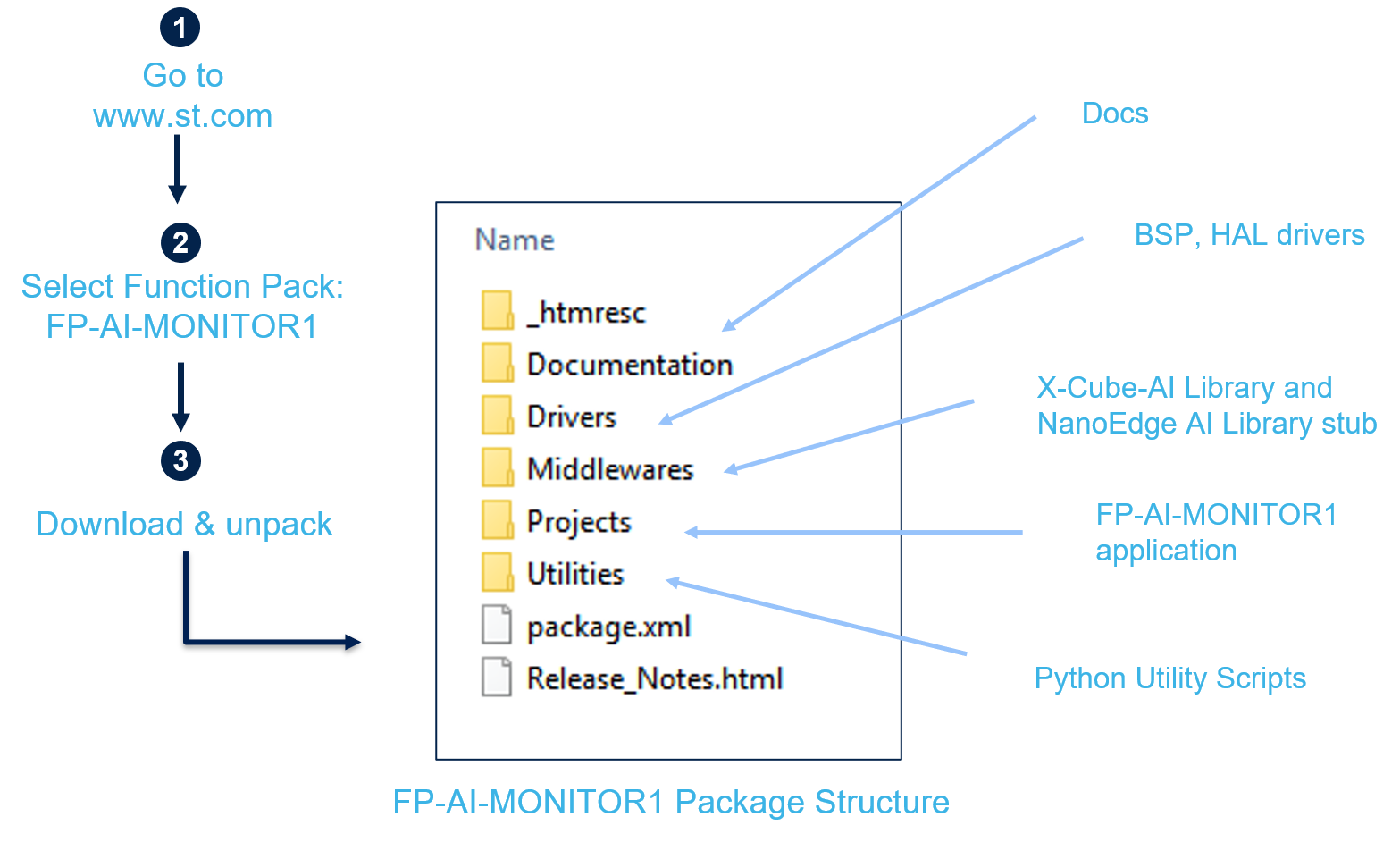

2.3.1. Getting the function pack

The first step is to get the function pack FP-AI-MONITOR1 from ST website. Once the pack is downloaded, unpack/unzip it and copy the content to a folder on the PC. The steps of the process along with the content of the folder are shown in the following image.

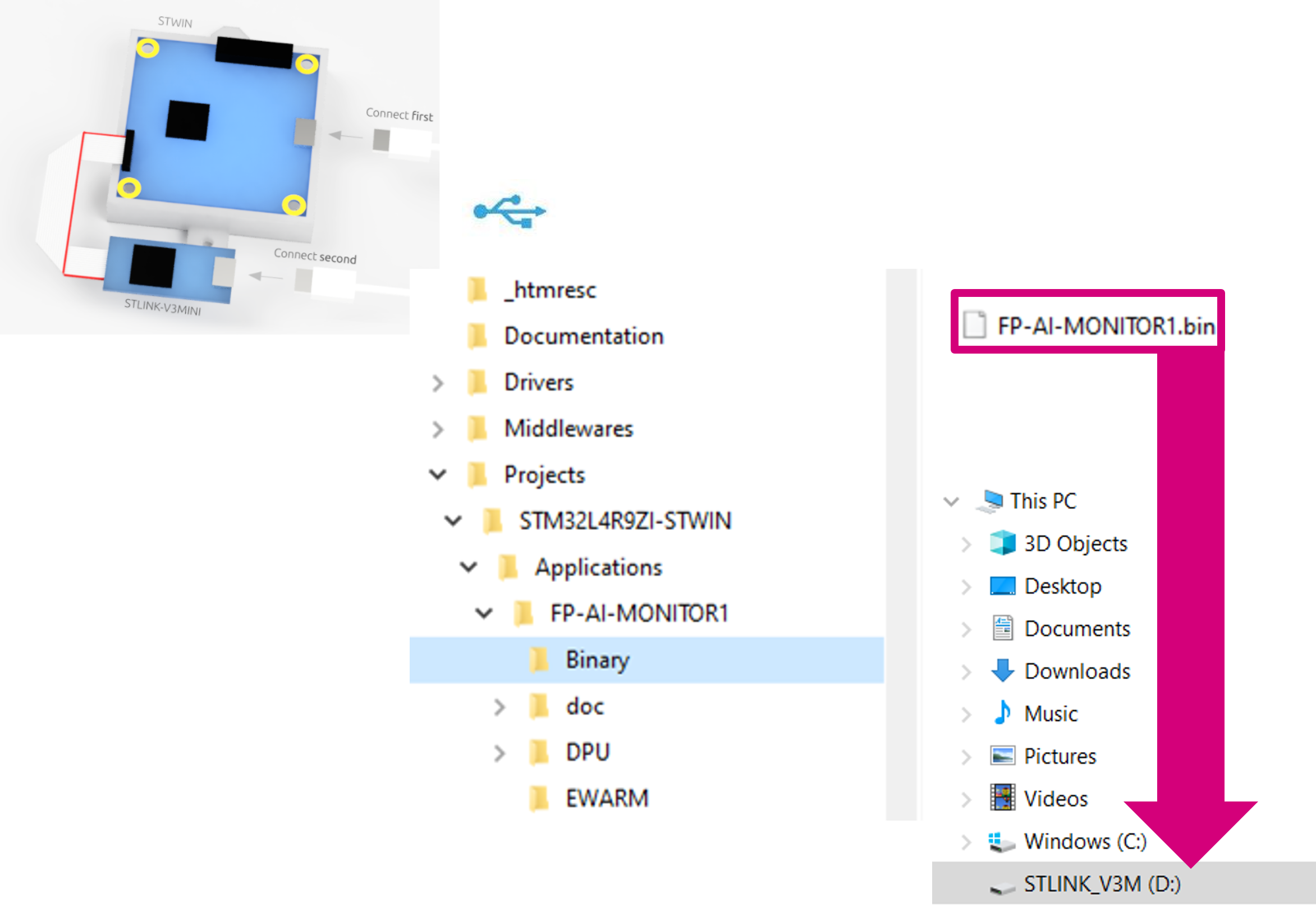

2.3.2. Flashing the application on the sensor board STEVAL-STWINKT1B

Once the package has been downloaded and unpacked, the next step is to program the sensor node with the binary of the function pack. For the convenience of the users, the function pack is equipped with a pre-built binary file of the project. This binary file can be found at path /FP-AI-MONITOR1_V2.1.0/Projects/STM32L4R9ZI-STWIN/Applications/FP-AI-MONITOR1/Binary/FP-AI-MONITOR1.bin. The sensor board can be very easily programmed with the provided binary by simply performing a drag-and-drop action as shown in the figure below.

3. FP-AI-MONITOR1 console application

FP-AI-MONITOR1 provides an interactive command-line interface (CLI) application. This CLI application equips a user with the ability to configure and control the sensor node, and to perform different AI operations on the edge including, learning and anomaly detection (for NanoEdge™ AI libraries), n-Class classification (NanoEdge™ AI libraries), dual (combination of NanoEdge AI detection and CNN based classification), and human activity recognition using CNN. The following sections provide a small guide on how to install this CLI application on a sensor board and control it through the serial connection from TeraTerm.

3.1. Setting up the console

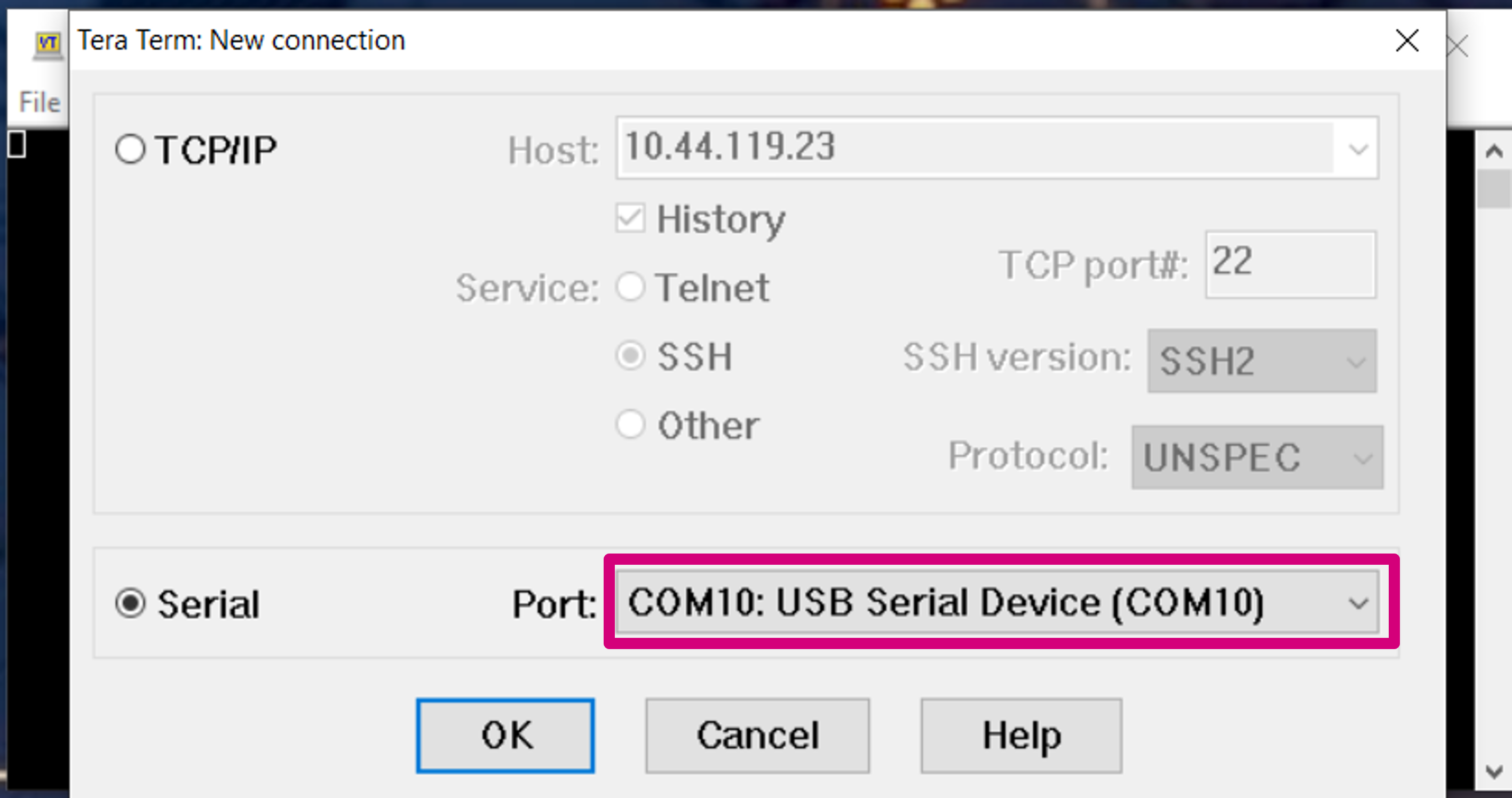

Once the sensor board is programmed with the binary of the project (as shown in section 2.3), the next step is to set up the serial connection of the board with the PC through TeraTerm. To do so, start TeraTerm and create a new connection by either selecting it from the toolbar or by selecting the proper port to establish the serial communication with the board. In the figure below, this is COM10 - USB Serial Device (COM 10), but it could vary for different users.

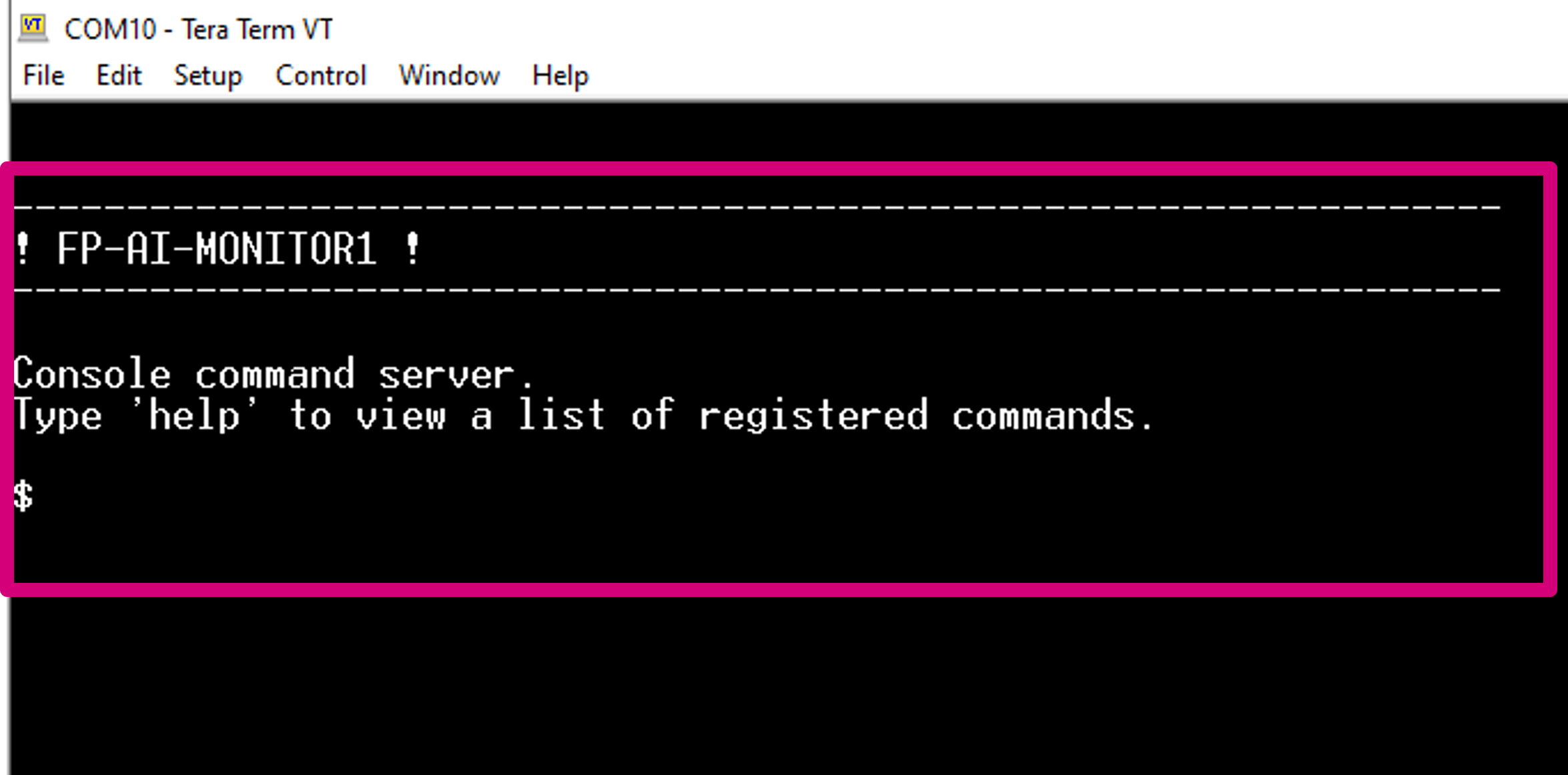

Once the connection is established, the message below is displayed. If this is not the case, try resetting the board by pressing the [RESET] button on the board.

Typing help shows the list of all the available commands along with their usage instructions.

3.2. Configuring the sensors

Through the CLI interface, a user can configure the supported sensors for sensing and condition monitoring applications. The list of all the supported sensors can be displayed on the CLI console by entering the command sensor_info. This command prints the list of the supported sensors along with their ids as shown in the image below. The user can configure these sensors using these ids. The configurable options for these sensors include:

- enable: set to '1' to activate or '0' to deactivate the sensor,

- ODR: to set the output data rate of the sensor from the list of available options, and

- FS: to set the full-scale range from the list of available options.

The current value of any of the parameters for a given sensor can be printed using the command,

$ sensor_get <sensor_id> <param>

or all the information about the sensor can be printed using the command:

$ sensor_get <sensor_id> all

Similarly, the values for any of the available configurable parameters can be set through the command:

$ sensor_set <sensor_id> <param> <val>

The figure below shows the complete example of getting and setting these values along with current and updated values before and after executing the sensor_set command.

4. Inertial data classification with STM32Cube.AI

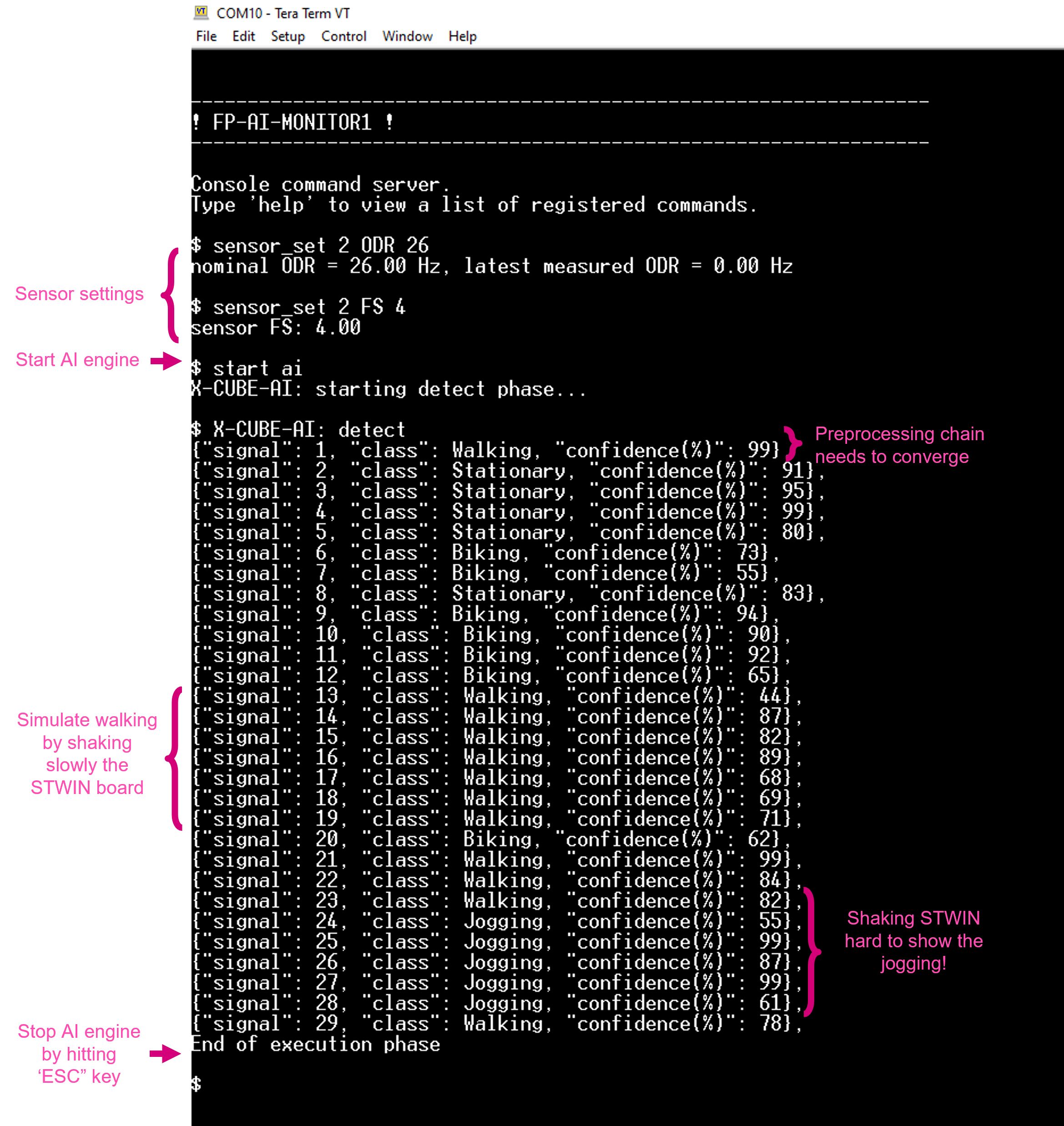

The CLI application comes with a prebuilt Human Activity Recognition (HAR) model. This functionality can be started by typing the command:

$ start ai

Note that the provided HAR model is built with a dataset created using the IHM330DHCX_ACC sensor with ODR = 26, and FS = 4. To achieve good performance, the user is required to set these parameters to the sensor configurations using the instructions provided in section Configuring the sensors.

Running the $ start ai command starts the inference on the accelerometer data and predicts the performed activity along with the confidence. The supported activities are:

- Stationary,

- Walking,

- Jogging, and

- Biking.

The following screenshot shows the normal working session of the AI command in the CLI application.

5. Anomaly detection with NanoEdge™ AI studio

FP-AI-MONITOR1 includes a pre-integrated stub which is easily replaced by an AI condition monitoring library generated and provided by NanoEdge™ AI Studio. This stub simulates the NanoEdge™ AI-related functionalities, such as running learning and detection phases on the edge.

The learning phase is started by issuing a command $ start neai_learn from the CLI console or by long-pressing the [USR] button. The learning process is reported either by slowly blinking the green LED light on STEVAL-STWINKT1B or in the CLI as shown below:

NanoEdge AI: learn

$ CTRL:! This is a stubbed version, please install the NanoEdge AI library!

{"signal": 1, "status": "need more signals"},

{"signal": 2, "status": "need more signals"},

:

:

{"signal": 10, "status": success}

{"signal": 11, "status": success}

:

:

End of execution phase

The CLI shows that the learning is being performed and at every signal learned. The NanoEdge AI library requires to learn for at least ten samples, so for all the samples until ninth sample a status message saying 'need more signals' is printed along with the signal id. Once, ten signals are learned the status of 'success' is printed. The learning can be stopped by pressing the ESC key on the keyboard or simply by pressing the [USR] button.

Similarly, the user starts the condition monitoring process by issuing the command $ start neai_detect or by double-pressing the [USR] button. This starts the inference phase. The anomaly detect phase checks the similarity of the presented signal with the learned normal signals. If the similarity is less than the set threshold default: 90%, a message is printed in the CLI showing the occurrence of an anomaly along with the similarity of the anomaly signal.

The process is stopped by pressing the ESC key on the keyboard or pressing the [USR] button.

This behavior is shown in the snippet below:

$ start neai_detect

NanoEdge AI: starting detect phase.

NanoEdge AI: detect

$ CTRL:! This is a stubbed version, please install NanEdge AI library!

{"signal": 1, "similarity": 0, "status": anomaly},

{"signal": 2, "similarity": 1, "status": anomaly},

{"signal": 3, "similarity": 2, "status": anomaly},

:

:

{"signal": 90, "similarity": 89, "status": anomaly},

{"signal": 91, "similarity": 90, "status": anomaly},

{"signal": 102, "similarity": 0, "status": anomaly},

{"signal": 103, "similarity": 1, "status": anomaly},

{"signal": 104, "similarity": 2, "status": anomaly},

End of execution phase

Other than CLI, the status is also presented using the LED lights on the STEVAL-STWINKT1B. Fast blinking green LED light shows the detection is in progress. Whenever an anomaly is detected, the orange LED light is blinked twice to report an anomaly. If not enough signals (at least 10) are learned, a message saying "need more signals" with a similarity value equals to 0 appears.

NOTE : This behavior is simulated using a STUB library where the similarity starts from 0 when the detection phase is started and increments with the signal count. Once the similarity is reached to 100 it resets to 0. One can see that the anomalies are not reported when similarity is between 90 and 100.

To find the detailed process of library generation and integration refer to the user manual of FP-AI-MONITOR1.

5.1. Additional parameters in condition monitoring

For user convenience, the CLI application also provides handy options to easily fine-tune the inference and learning processes. The list of all the configurable variables is available by issuing the following command:

$ neai_get all NanoEdgeAI: signals = 0 NanoEdgeAI: sensitivity = 1.000000 NanoEdgeAI: threshold = 95 NanoEdgeAI: timer = 0 NanoEdge AI: sensor = 2

Each of the these parameters is configurable using the neai_set <param> <val> command.

This section provides information on how to use these parameters to control the learning and detection phase. By setting the "signals" and "timer" parameters, the user can control how many signals or for how long the learning and detection is performed (if both parameters are set the learning or detection phase stops whenever the first condition is met). For example, to learn 10 signals, the user issues this command, before starting the learning phase as shown below.

$ neai_set signals 10

NanoEdge AI: signals set to 10

$ start neai_learn

NanoEdge AI: starting

$ {"signal": 1, "status": "need more signals"},

{"signal": 2, "status": "need more signals"}

{"signal": 3, "status": "need more signals"}

:

:

{"signal": 10, "status": success}

NanoEdge AI: stopped

If both of these parameters are set to "0" (default value), the learning and detection phases run indefinitely.

The threshold parameter is used to report any anomalies. For any signal which has similarities below the threshold value, an anomaly is reported. The default threshold value used in the CLI application is 90. Users can change this value by using neai_set threshold <val> command.

Finally, the sensitivity parameter is used as an emphasis parameter. The default value is set to 1. Increasing this sensitivity means that the signal matching is to be performed more strictly, reducing it relaxes the similarity calculation process, meaning resulting in higher matching values.

For further details on how NanoEdge™ AI libraries work users are invited to read the detailed documentation of NanoEdge™ AI Studio.

6. n-class classification with NanoEdge™ AI

This section provides an overview of the classification application provided in FP-AI-MONITOR1 based on the NanoEdge™ AI classification library. FP-AI-MONITOR1 includes a pre-integrated stub which is easily replaced by an AI classification library generated using NanoEdge™ AI Studio. This stub simulates the NanoEdge™ AI-classification-related functionality, such as running the classification by simply iterating between two classes for ten consecutive signals on the edge.

Unlike the anomaly detection library, the classification library from the NanoEdge™ AI Studio comes with static knowledge of the data and does not require any learning on the device. This library contains the functions based on the provided sample data to best classify one class from other and rightfully assign a label to it when performing the detection on the edge. The classification application powered by NanoEdge™ AI can be simply started by issuing a command $ start neai_class as shown in the snippet below.

$ start neai_class

NanoEdgeAI: starting classification phase...

$ CTRL:! This is a stubbed version, please install the NanoEdge AI library!

NanoEdge AI: classification

{"signal": 1, "class": Class1}

{"signal": 2, "class": Class1}

:

:

{"signal": 10, "class": Class1}

{"signal": 11, "class": Class2}

{"signal": 12, "class": Class2}

:

:

{"signal": 20, "class": Class2}

{"signal": 21, "class": Class1}

:

:

End of execution phase

The CLI shows that the first ten samples are classified as "Class1" while the upcoming ten samples are classified as "Class2". The classification phase can be stopped by pressing the ESC key on the keyboard or simply by pressing the [USR] button.

NOTE : This behavior is simulated using a STUB library where the classes are iterated by displaying ten consecutive labels for one class and then ten labels for the next class and so on.

For information on how to generate an actual library to replace the stub, readers are invited to refer to the FP-AI-MONITOR1 user manual.

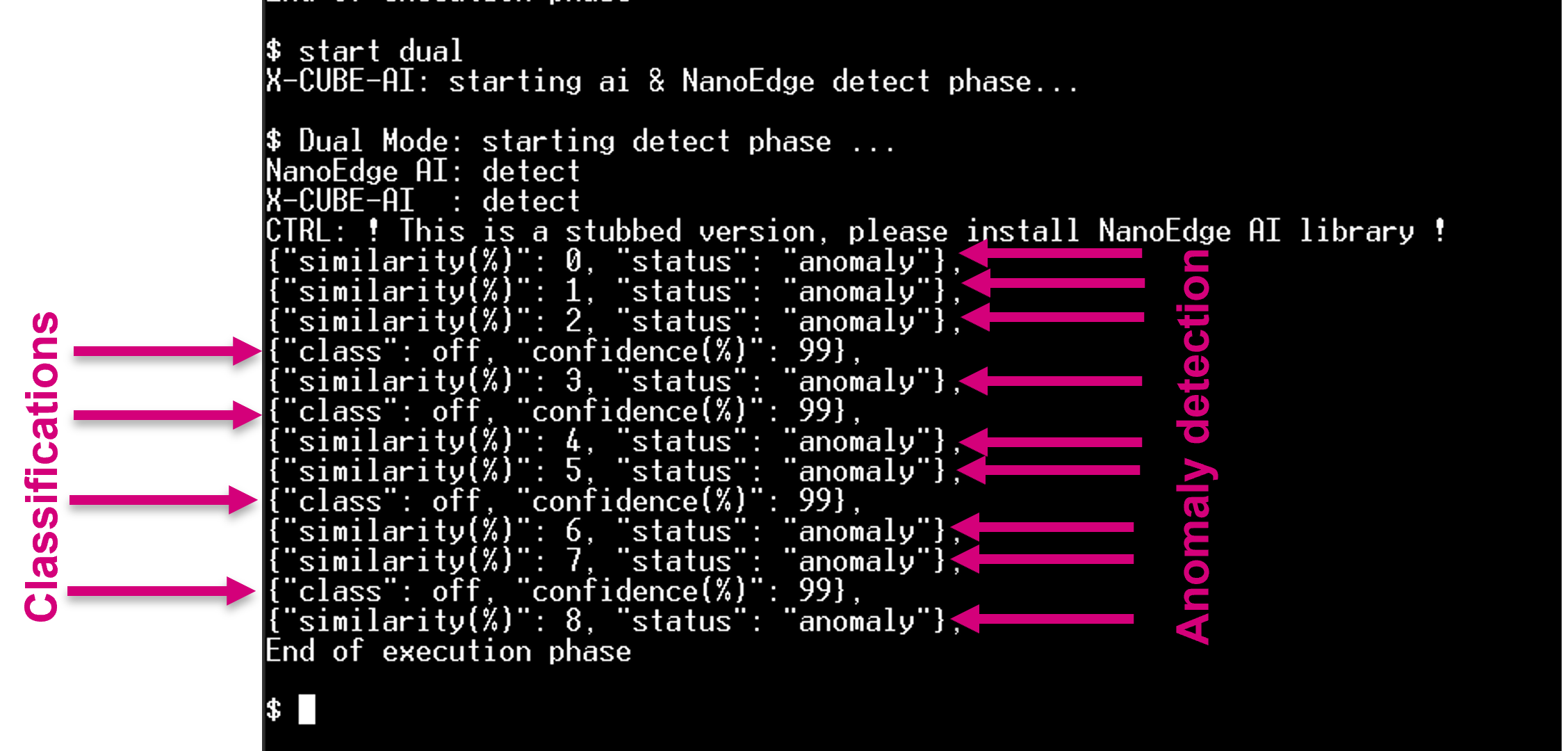

7. Anomaly detection and classification using dual-mode application

In addition to the three applications described in the sections above the FP-AI-MONITOR1 also provides an advanced execution phase which we call dual application mode. This mode uses anomaly detection based on the NanoEdge™ AI library and performs classification using a prebuilt ANN model based on ultrasound data, acquired using an analog microphone. The dual-mode works in a power saver configuration. A low power anomaly detection algorithm based on the NanoEdge™ AI library is always running based on vibration data and an ANN classification based on a high-frequency analog microphone pipeline is only triggered if an anomaly is detected. Other than this both applications are independent of each other. This is also worth mentioning that the dual-mode is created to work for a USB fan when the fan is running at the maximum speed and does not work very well when tested on other speeds. The working of the applications is very simple.

To start testing the dual application execution phase, the user first needs to train the anomaly detection library using the $ start neai_learn at the highest speeds of the fan for at least ten samples. If not enough signals (at least 10) are learned, a message saying "need more signals" with a similarity value equals to 0 appears and the dual mode does not run. Once the normal conditions have been learned, the user can start a dual application by issuing a simple command as $ start dual as shown in the below snippet:

Whenever there is an anomaly detected, meaning a signal with a similarity of less than 90% (or the threshold value set by the user), the ultrasound-based classifier is started. Both applications run in asynchronous mode. The ultrasound-based classification model takes almost one second of data, then preprocesses it using the mel-frequency cepstral coefficients (MFCC), and then feeds it to a pre-trained neural network. It then prints the label of the class along with the confidence. The network is trained for four classes [ 'Off', 'Normal', 'Clogging', 'Friction' ] to detect fan in 'Off' condition, or running in 'Normal' condition at max speed or clogged and running in 'Clogging' condition at maximum speed or finally if there is friction being applied on the rotating axis it is labeled as 'Friction' class. As soon as the anomaly detection detects the class to be normal, the ultrasound-based ANN is suspended.

8. Button operated modes

To facilitate the usage, of the FP-AI-MONITOR1 when there is no CLI connection, the FP is equipped with a button-operated mode. The purpose of the button-operated mode is to enable the FP-AI-MONITOR1 to work even in the absence of the CLI console. All the (default) values required for running different functionalities are provided in the firmware. In button-operated mode, the user can start/stop different execution phases by using only the user button. The following section describes the allocation of the buttons and LEDs to display the currently running contexts.

8.1. LED Allocation

In the function pack six execution phases exist:

- idle: the system waits for user input.

- ai detect: all data coming from the sensors are passed to the X-CUBE-AI library to detect anomalies.

- neai learn: all data coming from the sensors are passed to the NanoEdge™ AI library to train the model.

- neai detect: all data coming from the sensors are passed to the NanoEdge™ AI library to detect anomalies.

- neai class: all data coming from the sensors are passed to the NanoEdge™ AI library to perform classification.

- dual mode: all data coming from the sensors are passed to the NanoEdge™ AI library to detect anomalies and to X-CUBE-AI library to classify them.

At any given time, the user needs to be aware of the current active execution phase. We also need to report on the outcome of the detection when the detect execution phase is active, telling the user if an anomaly has been detected, or what activity is being performed by the user with the HAR application when the "ai" context is running.

The onboard LEDs indicate the status of the current execution phase by showing which context is running and also by showing the output of the context (anomaly or one of the four activities in the HAR case).

The green LED is used to show the user which execution context is being run.

| Pattern | Task |

|---|---|

| OFF | - |

| ON | IDLE |

| BLINK_SHORT | X-CUBE-AI Running |

| BLINK_NORMAL | NanoEdge™ AI learn |

| BLINK_LONG | NanoEdge™ AI detection or classification or Dual Mode |

The Orange LED is used to indicate the output of the running context as shown in the table below:

| Pattern | Reporting |

|---|---|

| OFF | Stationary (HAR) in X-CUBE-AI mode, Normal Behavior when in NEAI mode |

| ON | Biking (HAR) |

| BLINK_SHORT | Jogging (HAR) |

| BLINK_LONG | Walking (HAR) or anomaly detected (NanoEdge™ AI detection or Dual Mode) |

Looking at these LED patterns the user is aware of the state of the sensor node even when CLI is not connected.

8.2. Button Allocation

In the button-operated mode, the user can trigger only four of the available execution phases. The button supported modes are:

- idle: the system is waiting for a command.

- ai: run the X-CUBE-AI library and print the results of the live inference on the CLI (if CLI is available).

- neai_learn: all data coming from the sensor is passed to the NanoEdge™ AI library to train the model.

- neai_detect: all data coming from the sensor is passed to the NanoEdge™ AI library to detect anomalies.

To trigger these phases, the FP-AI-MONITOR1 is equipped with the support of the user button. In the STEVAL-STWINKT1B sensor node, there are two software usable buttons:

- The user button: This button is fully programmable and is under the control of the application developer.

- The reset button: This button is used to reset the sensor node and is connected to the hardware reset pin, thus is used to control the software reset. It resets the knowledge of the NanoEdge™ AI libraries and all the context variables and sensor configurations to the default values.

To control the executions phases, we need to define and detect at least three different button press modes of the user button.

The following are the types of the press available for the user button and their assignments to perform different operations:

| Button Press | Description | Action |

|---|---|---|

| SHORT_PRESS | The button is pressed for less than (200 ms) and released | Start AI inferences for X-CUBE-AI model. |

| LONG_PRESS | The button is pressed for more than (200 ms) and released | Starts the learning for NanoEdge™ AI Library. |

| DOUBLE_PRESS | A succession of two SHORT_PRESS in less than (500 ms) | Starts the inference for NanoEdge™ AI Library. |

| ANY_PRESS | The button is pressed and released (overlaps with the three other modes) | Stops the current running execution phase. |

- DB4494: Data brief for FP-AI-MONITOR1

- User Manual: User Manual for FP-AI-MONITOR1

- STEVAL-STWINKT1B: SensorTile Wireless Industrial Node STEVAL-STWINKT1B development kit and reference design for industrial IoT applications

- STM32CubeMX: STM32Cube initialization code generator

- X-CUBE-AI : expansion pack for STM32CubeMX

- NanoEdge™ AI Studio: NanoEdge AI™ Studio the first Machine Learning Software, specifically developed to entirely run on microcontrollers.

- DB4345: Data brief for STEVAL-STWINKT1B.

- UM2777: How to use the STEVAL-STWINKT1B SensorTile Wireless Industrial Node for condition monitoring and predictive maintenance applications.