NanoEdge AI Studio is a free software provided by ST to easily add AI into to any embedded project running on any Arm® Cortex®-M MCU.

It empowers embedded engineers, even those unfamiliar with AI, to almost effortlessly find the optimal AI model for their requirements through straightforward processes.

Operated locally on a PC, the software takes input data and generates a NanoEdge AI library that incorporates the model, its preprocessing, and functions for easy integration into new or existing embedded projects.

The main strength of NanoEdge AI Studio is its benchmark, which will explore thousands of combinations of preprocessing, models, and parameters. This iterative process identifies the most suitable algorithm tailored to the user's needs based on their data.

1. NanoEdge AI Studio v5.1 Release Notes

1.1. Overview

NanoEdge AI Studio v5.1 delivers significant performance improvements to embedded ML solutions, expanded hardware support with dedicated acceleration capabilities. This release includes a minor API breaking change due to architectural improvements in the library structure.

1.2. Performance & Core Improvements

Embedded Code Execution Optimization

- Substantial performance gains in embedded code execution

- Knowledge base now fully integrated within the library, eliminating external dependencies

- Streamlined runtime architecture for reduced overhead

Note: The knowledge integration necessitates API modifications. See Breaking Changes section below.

1.3. New Hardware Support

STM32U3 Target

- Full support for STM32U3 microcontroller with dedicated hardware accelerator (HSP), see dedicated documentation page.

- Generated libraries automatically leverage hardware acceleration capabilities

- Optimized inference performance on accelerated targets

Cortex-R52 Series

- Support added for Cortex-R52 series processors

- Extends NanoEdge AI Studio capabilities to real-time processing applications

1.4. New Features

Customizable Search Space

- Users can now manually select specific models and preprocessing functions

- Ability to constrain or expand search space based on application requirements

Benchmark Queue Management

- New queueing system for benchmark operations

- Schedule multiple benchmark runs for sequential execution

1.5. Breaking Changes

Library API Update

With the knowledge base now fully integrated within the library, the initialization API has been simplified for both classification and anomaly detection workflows.

Classification, Regression & Outlier API Changes

Initialization

// Old API neai_classification_init(knowledge) // New API (v5.1) neai_classification_init(void)

The knowledge parameter is no longer required. Knowledge is now embedded directly in the library at build time, eliminating the need to manage and pass knowledge data structures.

Anomaly Detection API Changes

Initialization

// Old API neai_anomalydetection_init() neai_anomalydetection_knowledge(knowledge) // Separate call for pretrained models // New API (v5.1) neai_anomalydetection_init(bool use_pretrained)

The separate neai_anomalydetection_knowledge() function has been removed. Instead, specify at initialization whether to use a pretrained model or perform on-device learning:

- neai_anomalydetection_init(true) - Use embedded pretrained model (no learning phase required)

- neai_anomalydetection_init(false) - Perform on-device learning using neai_anomalydetection_learn()

2. Getting started

2.1. What is NanoEdge AI Studio?

NanoEdge AI Studio is a software designed for embedded machine learning. It acts like a search engine, finding the best AI libraries for your project. It needs data from you to figure out the right mix of data processing, model structure, and settings.

Once it finds the best setup for your data, it creates AI libraries. These libraries make it easy to use the data processing and model in your C code.

2.1.1. NanoEdge AI Library

NanoEdge AI Libraries are the output of NanoEdge AI Studio. They are a static libraries for embedded C software on Arm® Cortex-M® microcontrollers (MCUs). Packaged as a precompiled .a file, it provides the building blocks to integrate smart features into C code without requiring expertise in mathematics, machine learning, or data science.

When embedded on microcontrollers, the NanoEdge AI Library enables them to automatically "understand" sensor patterns. Each library contains an AI model with easily implementable functions for tasks like learning signal patterns, detecting anomalies, classifying signals, and extrapolating data.

Each kind of project in NanoEdge has it own kind of AI library with their functions but they share the same characteristics:

- Highly optimized: Designed for MCUs (any Arm® Cortex®-M).

- Memory efficient: Requires only 1-20 Kbytes of RAM/flash memory.

- Fast inference: Executes in 1-20 ms on Cortex®-M4 at 80 MHz.

- Cloud independent: Runs directly within the microcontroller.

- Easy integration: Can be embedded into existing code/hardware.

- Energy efficient: Minimal power consumption.

- Static allocation: Preserves the stack.

- Data privacy: No data transmission or saving.

- User-friendly: No machine learning expertise required for deployment.

All NanoEdge AI Libraries are created using NanoEdge AI Studio.

2.1.2. NanoEdge AI Studio capabilities

NanoEdge AI Studio can:

- Search for optimal AI libraries (preprocessing + model) given user data.

- Simplify the development of machine learning features for embedded developers.

- Minimize the need for extensive machine learning and data science knowledge.

- Utilize minimal input data compared to traditional approaches.

Using project parameters (MCU type, RAM, sensor type) and signal examples, the Studio outputs the most relevant NanoEdge AI Library. This library can be either untrained (learning postembedding) or pretrained. The library performs inference directly on the microcontroller, involving:

- Signal preprocessing algorithms (for example, FFT, PCA, normalization).

- Machine learning models (for example, kNN, SVM, neural networks such as MLP, proprietary algorithms).

- Optimal hyperparameter settings.

The process is iterative: import signals, run a benchmark, test the library, adjust data, and repeat to improve results.

2.1.3. NanoEdge AI Studio limitations

NanoEdge AI Studio:

- Requires user-provided input data (sensor signals) for satisfactory results.

- Libraries' performances are heavily correlated to the quality of the data imported.

- Does not offer ready-to-use C code for final implementation. Users must write, compile, and integrate the code with the AI library.

In summary, NanoEdge AI Studio outputs a static library (.a file) based on user data, which must be linked and compiled with user-written C code for the target microcontroller.

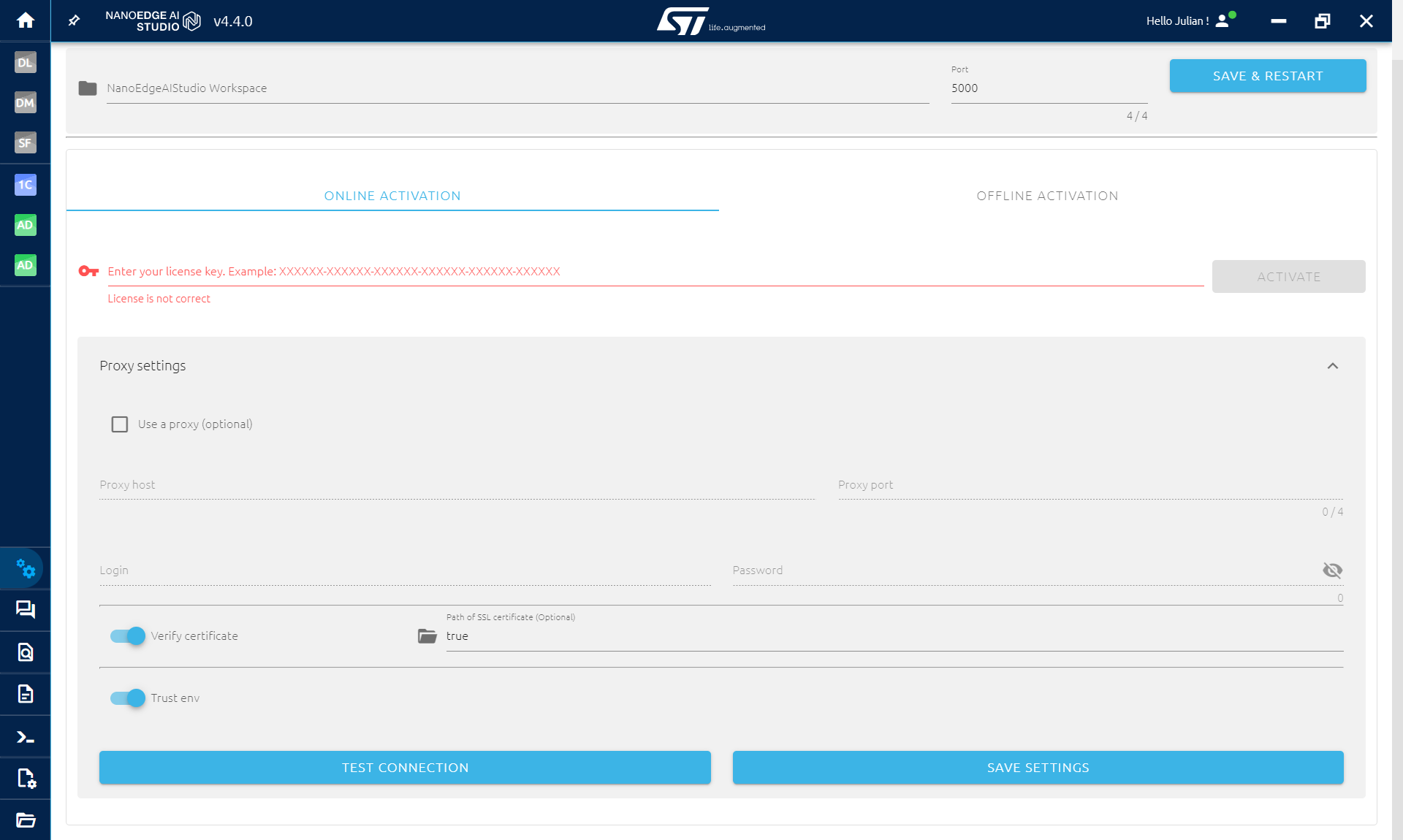

2.2. License activation

To use NanoEdge AI Studio, you need a license which is in the mail received after the software download atstm32ai.st.com.

Initial setup:

- Enter your license key.

- Set your proxy settings if you need.

- Save your settings.

IP authorization

You may need to authorize the following IP addresses:

| ST API for library compilation: | 52.178.13.227 | or via URL: https://api.nanoedgeaistudio.net |

|---|

Port configuration

By default, NanoEdge AI Studio uses port 5000. If this port is unavailable, the Studio will automatically search for an available port. To manually configure the port:

- Press windows key + R, type %appdata% and press enter.

- Navigate to nanoedgeaistudio folder and open config.json.

- Locate the line where the port is set, modify it, and save the file.

Project storage location

You can change the location where projects are saved. Avoid selecting cloud-synced or shared folders for project storage.

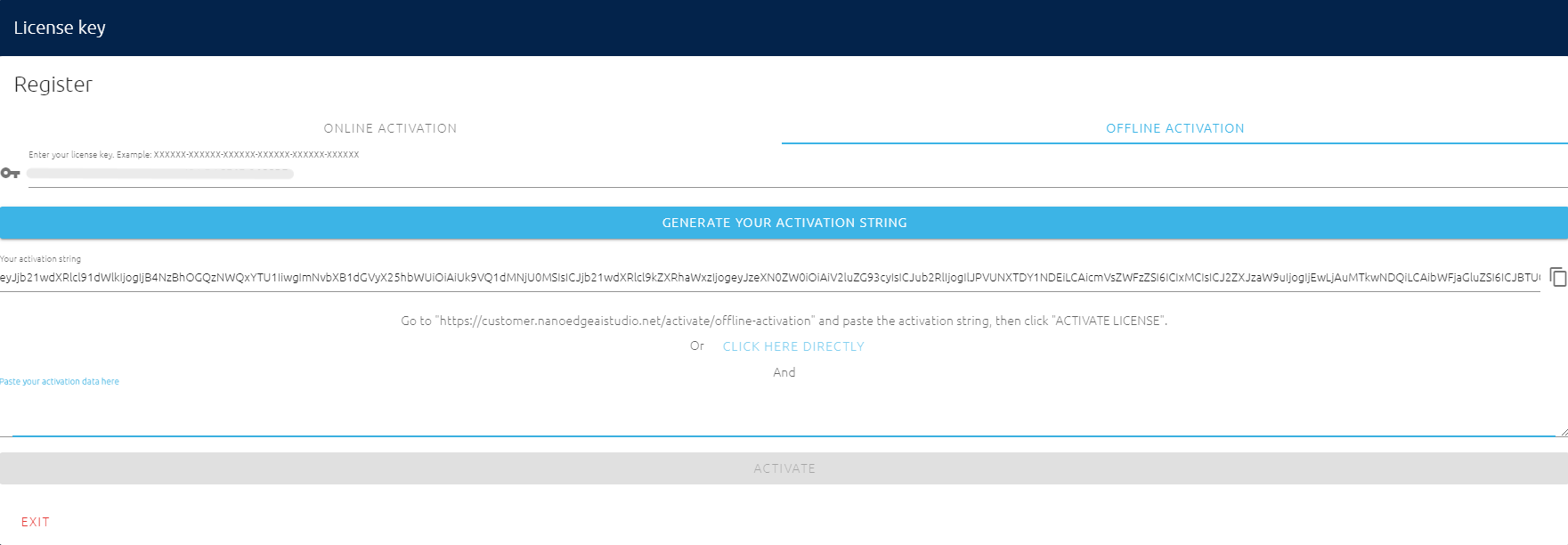

2.2.1. Offline license activation

If you lack an internet connection, you can activate your NanoEdge AI Studio license offline:

- Offline activation: Click on "Offline Activation" and enter your license key.

- Copy the activation string: A long string of characters appears. Copy this string or click the provided link.

- Activate license: Paste the string if needed and click "Activate License".

- Receive response string: You will receive a new string as a response. Copy this string.

- Finalize activation: Return to NanoEdge AI Studio, paste the response string, and click "Activate".

2.3. Type of projects

The four different types of projects that can be created using the Studio, along with their characteristics, outputs, and possible use cases, are outlined below:

Anomaly detection differentiates normal behavior signals from abnormal ones.

- User input: Datasets containing signals for both normal and abnormal situations.

- Studio output: The optimal anomaly detection AI library, including preprocessing and the model identified during benchmarking.

- Library output: The library provides a similarity score (0-100%) indicating the resemblance between the training data and the new signal.

- Retraining: This project type supports model retraining directly on the microcontroller.

- C library functions: Includes initialization, learning, and detection functions. See AI:NanoEdge AI Library for anomaly detection (AD).

- Use case example: For preventive maintenance of multiple machines, collect nominal data and simulate possible anomalies. Use NanoEdge AI Studio to create a library that can distinguish normal and abnormal behaviors. Deploy the same model on all machines and retrain it for each specific machine to enhance specialization for their environments.

2.3.1. Synthetic data

In version 5.0.0 we introduce a way to generate abnormal data for anomaly detection project using an accelerometer (only in these conditions). In most anomaly detection project, the most difficult part is to be able to collect abnormal data. To help the user facing this problem, we work on a way to generate plausible abnormal data based on the regular data imported by the user.

To use this feature, create an anomaly detection project, select the accelerometer as sensor. Then import only nominal data and go to the benchmark. When creating a new benchmark, you will see the option to use synthetic data, see more on the benchmark part of the documentation).

Classification assigns a signal to one of several predefined classes based on training data.

- User input: A dataset for each class to be detected.

- Studio output: The optimal Classification library, including preprocessing and the model identified during benchmarking.

- Library output: The library produces a probability vector corresponding to the number of classes, indicating the likelihood of the signal belonging to each class. It also directly identifies the class with the highest probability.

- C library functions: Includes initialization and classification functions. See AI:NanoEdge AI Library for n-class classification (nCC).

- Use case example: For machines prone to various errors, use Classification to precisely identify the type of error occurring, rather than just detecting the presence of an issue.

Outlier detection distinguishes normal behavior from abnormalities without needing abnormal examples.

- User input: A dataset containing only nominal (normal) examples.

- Studio output: The optimal outlier detection library, including preprocessing and the model identified during benchmarking.

- Library output: The library returns 0 for nominal signals and 1 for outliers.

- C library functions: Includes initialization and detection functions. See AI:NanoEdge AI Library for 1-class classification (1CC).

- Use case example: For predictive maintenance when abnormal data is unavailable, outlier detection can identify outliers. However, for better performance, using anomaly detection is recommended.

Extrapolation predicts discrete values, commonly known as regression.

- User input: A dataset containing pairs of target values to predict and corresponding signals. The data format differs from other projects in NanoEdge. [See here for details].

- Studio output: The optimal extrapolation library, including preprocessing and the model identified during benchmarking.

- Library output: The library predicts the target value based on the input signal.

- C library functions: Includes initialization and prediction functions. See AI:NanoEdge AI Library for extrapolation (E).

- Use case example: When monitoring a machine with multiple sensors, use extrapolation to predict the value of one sensor using data from other sensors. Create a dataset with signals representing the data evolution from the sensor to be kept and associate it with the values from the sensor to be replaced.

2.4. Data format

NanoEdge AI Studio and its output NanoEdge AI Libraries are compatible with any sensor type: NanoEdge is sensor-agnostic. NanoEdge tries to extract the information in the data being vibrations, current, sound, or anything else.

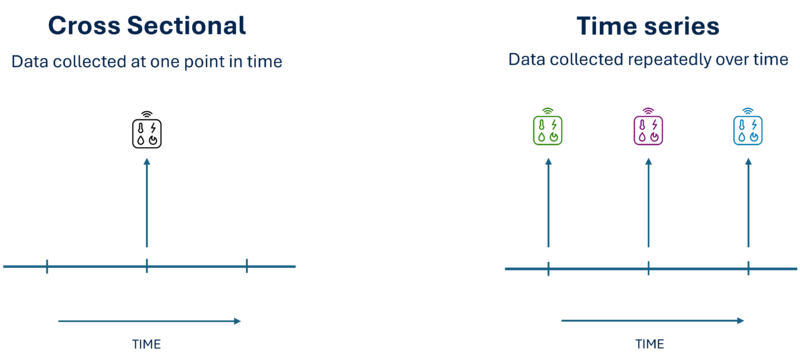

In NanoEdge AI Studio, sensors are divided in two distinct groups based on the format of data that you use:Time series sensors or cross sectional sensors.

2.4.1. Time series versus cross sectional

Time series refers to data that contains information of multiple points in time.

Cross sectional refers to data that contains information at a single point in time.

In an embedded environment, in general, you want to work with time series data. Information about a motor at a single point in time is, most of the time, useless. Most of the useful information is contained in the evolution in time of the data, not in a single observation. On the opposite side, if you want to predict the price of a house based on a lot of its characteristics (size, number of rooms, etc.), you do not necessarily need an evolution of these parameters as much of them are fixed.

Here is another example:

Multizone Time-of-Flight sensors can be selected in both time series or cross sectional to solve different use cases:

- Time series: You want to recognize a gesture. You use a consecutive matrix from the Time-of-Flight to solve the use case

- Cross sectional: You want to recognize a sign. You do not need temporal information, a single matrix contains the sign.

2.4.2. Defining important concepts

Here are some clarifications regarding the important terms that are used in this document and in NanoEdge:

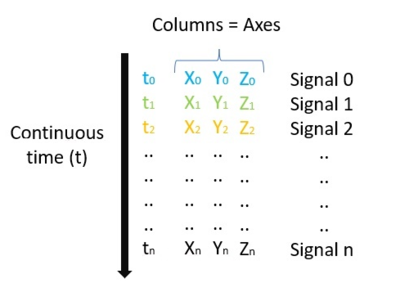

Axis/Axes:

In NanoEdge AI Studio, the axis/axes are the total number of variables outputted by the sensor used for a project. For example, a 3-axis accelerometer outputs a 3-variables sample (x,y,z) corresponding to the instantaneous acceleration measured in the 3 spatial directions.

In case of using multiple sensors, the number of axes is the total number of axes of all sensors. For example, if using a 3-axis accelerometer and a 3-axis gyroscope, the number of axes is 6.

Signal:

A signal is the continuous representation in time of a physical phenomenon. We are sampling a signal with a sensor to collect samples (discrete values). Example: vibration, current, sound.

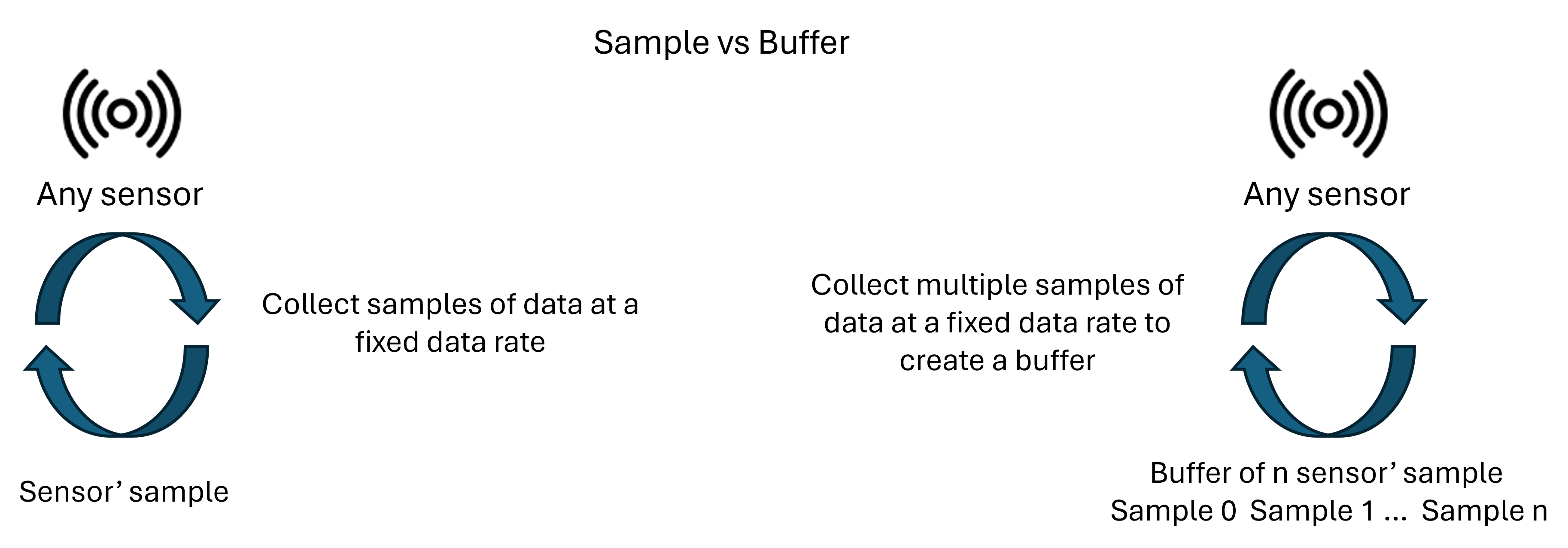

Sample:

This refers to the instantaneous output of a sensor, and contains as many numerical values as the sensor has axes.

For example, a 3-axis accelerometer outputs 3 numerical values per sample, while a current sensor (1-axis) outputs only 1 numerical value per sample.

Data rate:

The data rate is the frequency at which we capture signal values (samples). The data rate must be chosen according to the phenomenon studied.

Also pay attention to having a consistent data rate and number of samples to have buffers that represent a meaningful time frame.

Buffer:

A buffer is a concatenation of consecutive samples collected by a sensor. A dataset contains multiples buffers. The term Line is also used when talking about buffers of a dataset.

Buffers are the input for NanoEdge, being in the Studio or in the microcontroller.

2.4.3. Time series

When selecting time series in the first step of a project in NanoEdge AI Studio, you have multiple sensors as options:

- Accelerometer: 1, 2, or 3 axes

- Current: 1 axis

- Hall effect: 1, 2, or 3 axes

- Microphone: 1 axis

- Time-of-Flight: 4x4 or 8x8 matrix

- Generic: As much axis as you are using (example: current and accelerometer 3 axes = 4 axes)

Because time series involves multiple samples in time, the data imported are expected to contain multiple samples of the number of axes selected.

2.4.3.1. Work with temporal buffers

NanoEdge AI Studio expects buffers and not just samples of data.

Buffer = sensor' number of axes * number of samples taken at a fixed data rate (Hz)

It is crucial to maintain a consistent sampling frequency and buffer size.

Why are buffers more useful than samples?

In machine learning, you want to work on buffers as they contain information on the evolution of the phenomenon studied. Samples are just a single point in time representing the phenomenon. It is easier to distinguish patterns in buffers than in simple values.

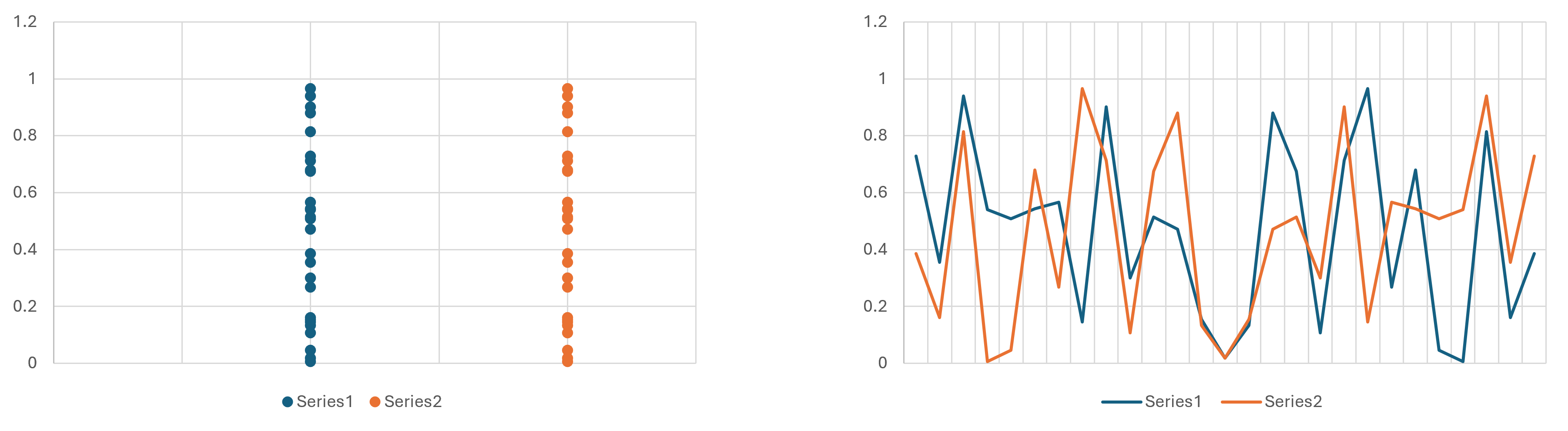

Let us consider a simple example where we collect two signals being the same but one in temporal order (t0 to tn), and the other being the opposite (tn to t0). We consider these two signals being two classes and we want to classify a new signal into one of these two classes (here if it is equal to one of them).

- Left plot: Samples independently

- Right plot: Samples as temporal buffers

We collect a new signal that is with certainty from one of the two classes and want to know which one:

- If we look at the samples (left approach), it is impossible as all the values are in the two classes. A single sample does not contain the information for it to be classified.

- On the other hand, if we use the temporal buffer, we can directly see which one it is equal to.

2.4.3.2. General rules

The Studio requires:

- Each line in a dataset must represent a single, independent signal example composed of multiple samples.

- The buffer size to be a power of two and remain constant throughout the project.

- The sampling frequency to remain constant throughout the project.

- All signal examples for a specific "class" to be grouped in the same input file (for example, all "nominal" regimes in one file and all "abnormal" regimes in another for anomaly detection).

General considerations for input file format:

- .txt / .csv files

- Numerical values only, no headers

- Uniform separators (single space, tab, comma, or semicolon)

- Decimal values formatted with a period (.)

- Fewer than 16,384 values per line

- Consistent number of numerical values on each line

- Minimum of 20 lines per sensor axis

- Fewer than ~100,000 total lines

- File size less than ~1 Gbit

Specific formatting rules:

- Anomaly detection, classification and outlier detection projects share general rules.

- Extrapolation projects require target values for extrapolation.

2.4.3.3. Basic format

Following the rules from the previous part, the format for anomaly detection, classification or outlier detection is the same and is described in the example below:

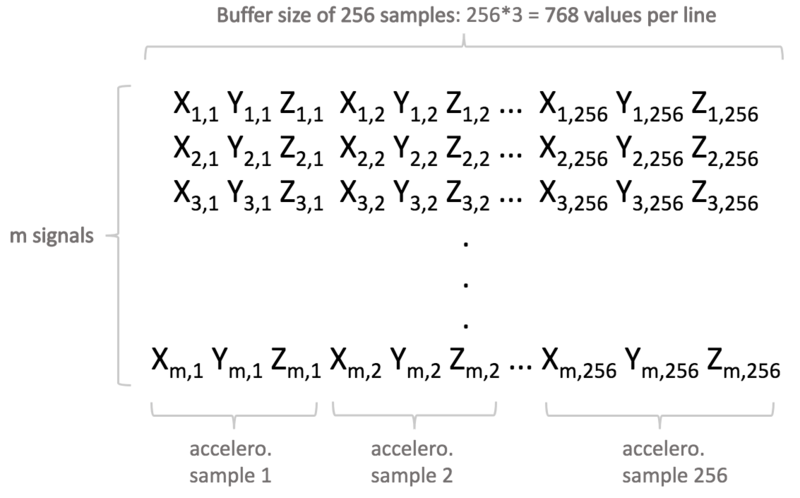

You want to collect 3-axis accelerometer data to monitor a motor. You estimate the highest-frequency component of this vibration to be below 500 Hz, therefore choose a sampling frequency of 1000 Hz for the sensor. You want to get signals that represent 250 ms, which mean that you collect a buffer of 256 samples as 256/1000 (nb sample / data rate) = 256 ms.

In this example all the parameters considered are:

- Number of axes: 3 axes

- Number of samples: 256 samples

- Buffer size: 3*256 = 768

- Data rate: 1000 Hz

- Temporal length: 256 ms

All these parameters hold a major importance in the result that you get in NanoEdge AI Studio. Find more on how to select them here.

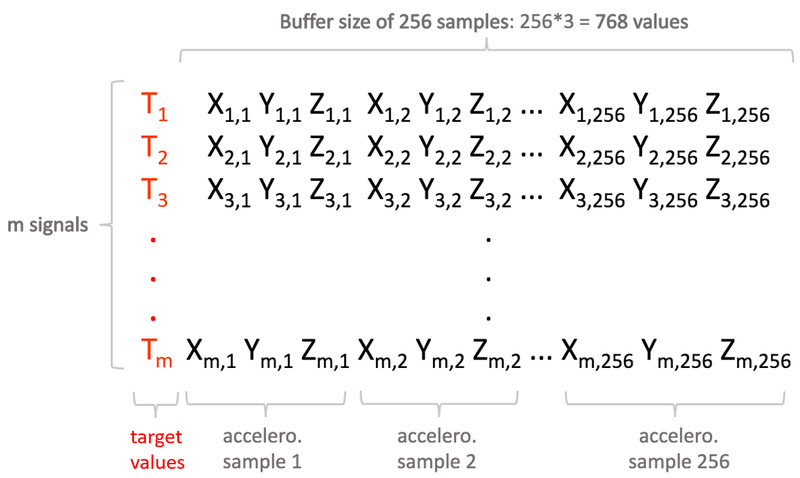

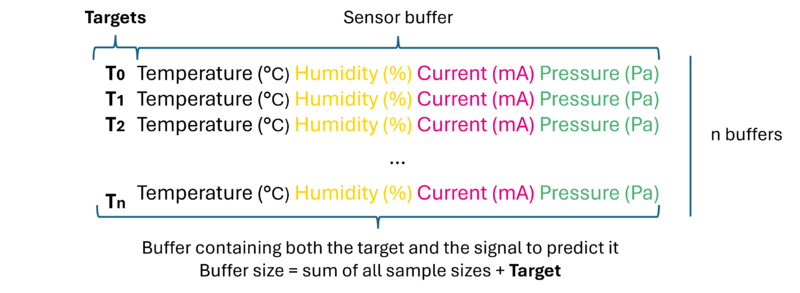

2.4.3.4. Variant format: Extrapolation projects

In extrapolation (or regression) the file format is slightly different.

The goal of extrapolation models is to predict a numerical value, called target in NanoEdge based on an input buffer. To train such models, you need a dataset with a large variety of target and the corresponding buffer.

When trained, you will then give a buffer as input to the model that will output the target that you are trying to predict.

Lets say that you want to predict the speed of a motor based on its vibration. Here, your target is a speed corresponding to vibration, which is 3-axis accelerometer data. In this case, you collect buffers of accelerometer data and for each buffer, associate the corresponding speed (the speed at the start of the acquisition, the end, or an average depending on your use case):

The format is the same as in other project but with one more column thatmust be the first one.

This time, the buffer size in the dataset used by NanoEdge is:

number of axes * number of samples + target

2.4.4. Cross sectional

When selecting cross sectional in the first step of a project in NanoEdge AI Studio, you have two sensors as options: Time-of-Flight (8x8 or 4x4) or multi-sensor.

Time-of-Flight

If you select Time-of-Flight, each line in the dataset represents an 8x8 or 4x4 matrix. This means that each buffer contains a single sample of either 16 or 64 values.

Multi-sensor:

If you select multi-sensor, you can choose the number of sensors and create buffers with any combination of sensor data. You need to enter the total number of 'dimensions', which is the sum of axes of all your sensors.

In cross sectional projects, there is no temporal link within or between buffers. Each buffer represents the state of the environment at a single point in time. For example, the second buffer might be collected 2 seconds after the first, and the third buffer might be collected 10 minutes after the second. This timing does not affect the project.

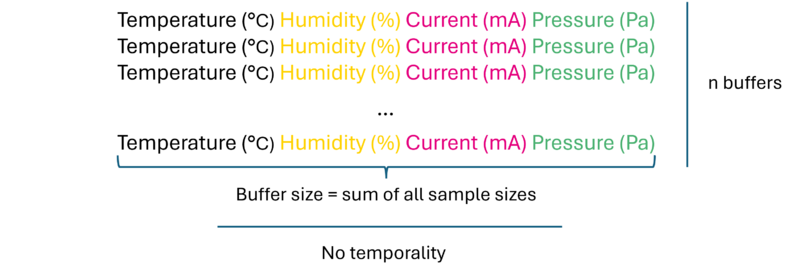

2.4.4.1. Basic format

For anomaly detection, Classification, or Outlier detection in cross sectional projects, the formats are as follows:

Time-of-flight:

As said above, you either have a buffer of size 16 (4x4 matrix) or size 64 (8x8).

Multi-sensor:

Each buffer can include data from various sensors. For instance, a buffer might contain:

- Temperature

- Humidity

- Current

- Pressure

Each buffer captures the state of the studied environment at a specific point in time and is independent of other buffers. There is no temporal link between them.

If you want to include more data in each buffer, such as additional accelerometer measurements (first, average, max), you can do so along with other sensor data.

2.4.4.2. Extrapolation projects

For extrapolation projects, the format is similar to that of time series projects but with a key difference: each buffer contains a single sample associated with a target value. Using the previous example:

2.4.5. Designing a relevant sampling methodology

Compared to traditional machine learning approaches that might need hundreds of thousands of signal examples, NanoEdge AI Studio requires significantly less data—typically around 100-1000 buffers per class, depending on the use case.

However, the data must be qualified, containing relevant information about the physical phenomena to be monitored. Designing a proper sampling methodology is crucial to ensure that all desired characteristics of the physical phenomena are accurately captured and translated into meaningful data.

Key considerations:

- Study the right physical phenomenon: Ensure that the data captures the correct physical events.

- Define the environment: Match the data collection environment to the real deployment environment.

- Work with buffers, not samples: Buffers provide more context and patterns than individual samples.

- Work with raw data: Use unprocessed data for better accuracy.

- Choose a relevant sampling rate and buffer size: Ensure these settings capture the necessary details.

- Use a temporal window that makes sense: Select a time frame that covers the relevant events.

- Start small and iterate: Begin with a small dataset and refine as needed.

These aspects are essential and greatly impact the results.

For more details, refer to the AI:Datalogging guidelines for a successful NanoEdge AI project.

3. Using NanoEdge AI Studio

3.1. Studio home screen

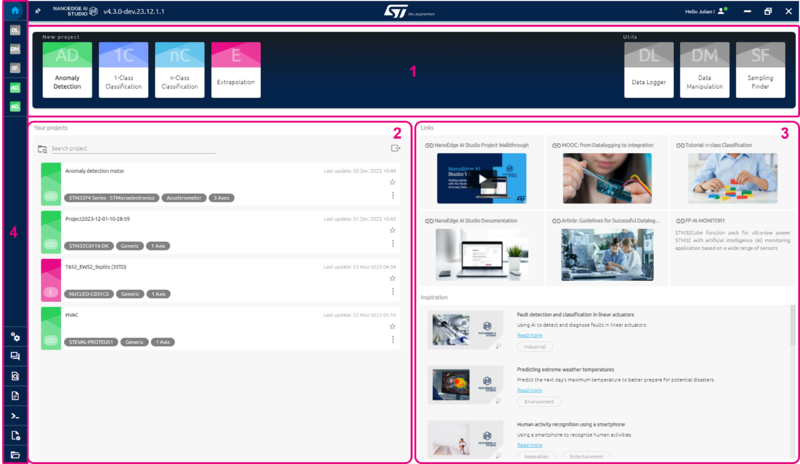

The Studio main (home) screen has four main elements:

Project creation bar (top):

Create new projects

Access Tools:

- Data Logger Generator

- Data Manipulation

- Sampling Finder

- Feature Importance

Existing projects list (left side):

Load, import/export, or search existing NanoEdge AI projects.

Useful links and inspiration (right side):

Access resources like MOOC, documentation, and links to the use case explorer data portal for downloadable datasets and performance summaries.

Toolbar (left extremity) provides quick access to:

- Datalogger

- Data manipulation

- Sampling finder

- Studio settings (port, workspace folder path, license information, and proxy settings)

- NanoEdge AI documentation

- NanoEdge AI license agreement

- CLI (command-line interface client) download

- Studio log files (for troubleshooting)

- Studio workspace folder

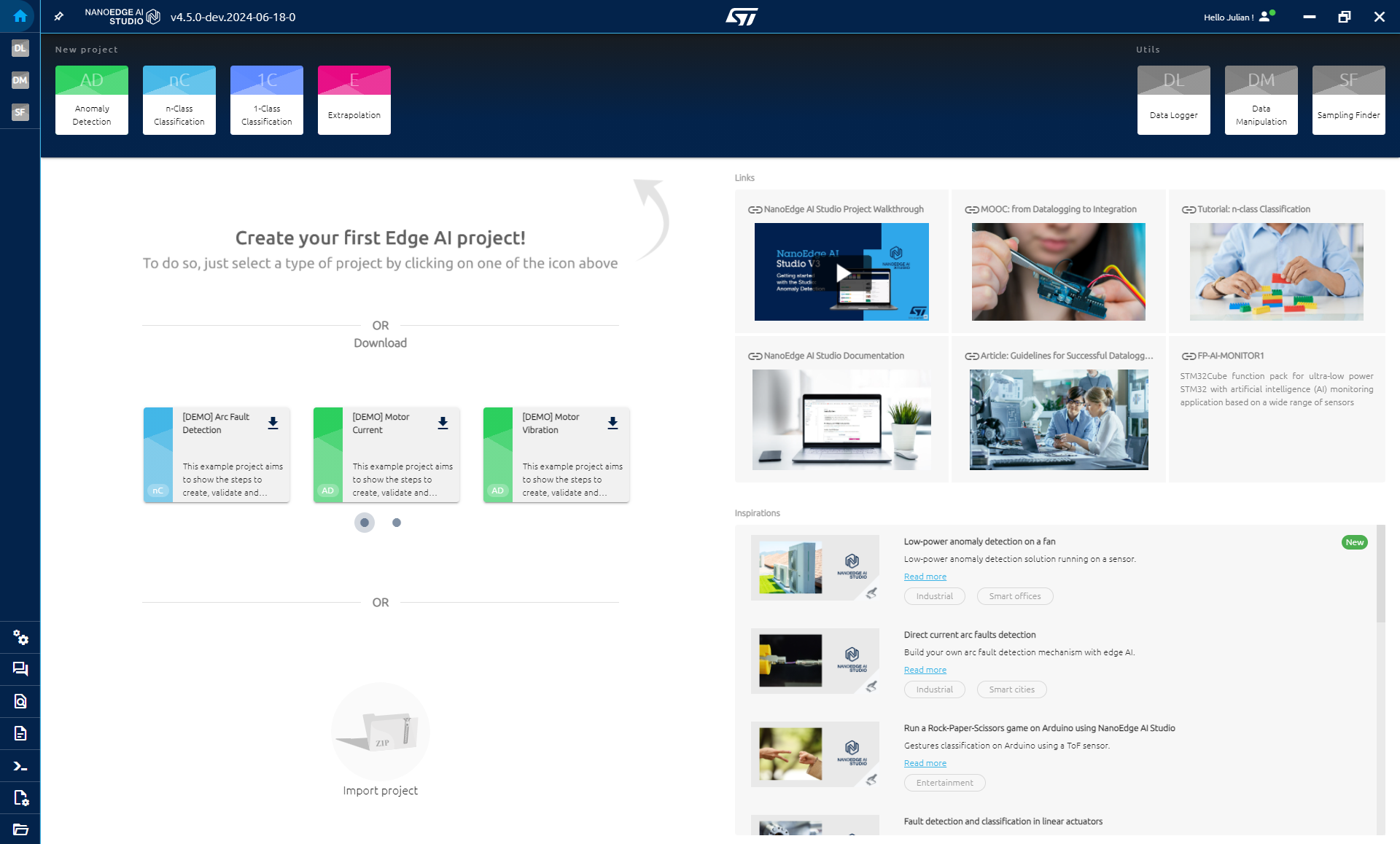

3.1.1. Project creation

To get started with NanoEdge AI Studio, you need to either create a project or download one of our example projects.

Create project

In the Home page, click NEW PROJECT then select one of the 4 kinds of projects available:

- Anomaly Detection

- Classification

- Outlier Detection

- Extrapolation

Then click CREATE.

Demo project

Five demo projects are available in NanoEdge AI Studio:

- Arc fault classification

- Rock paper scissors classification

- Motor vibration anomaly detection

- Motor current anomaly detection

- Pump vibration anomaly detection

All projects contain a description about the use case and the collected data.

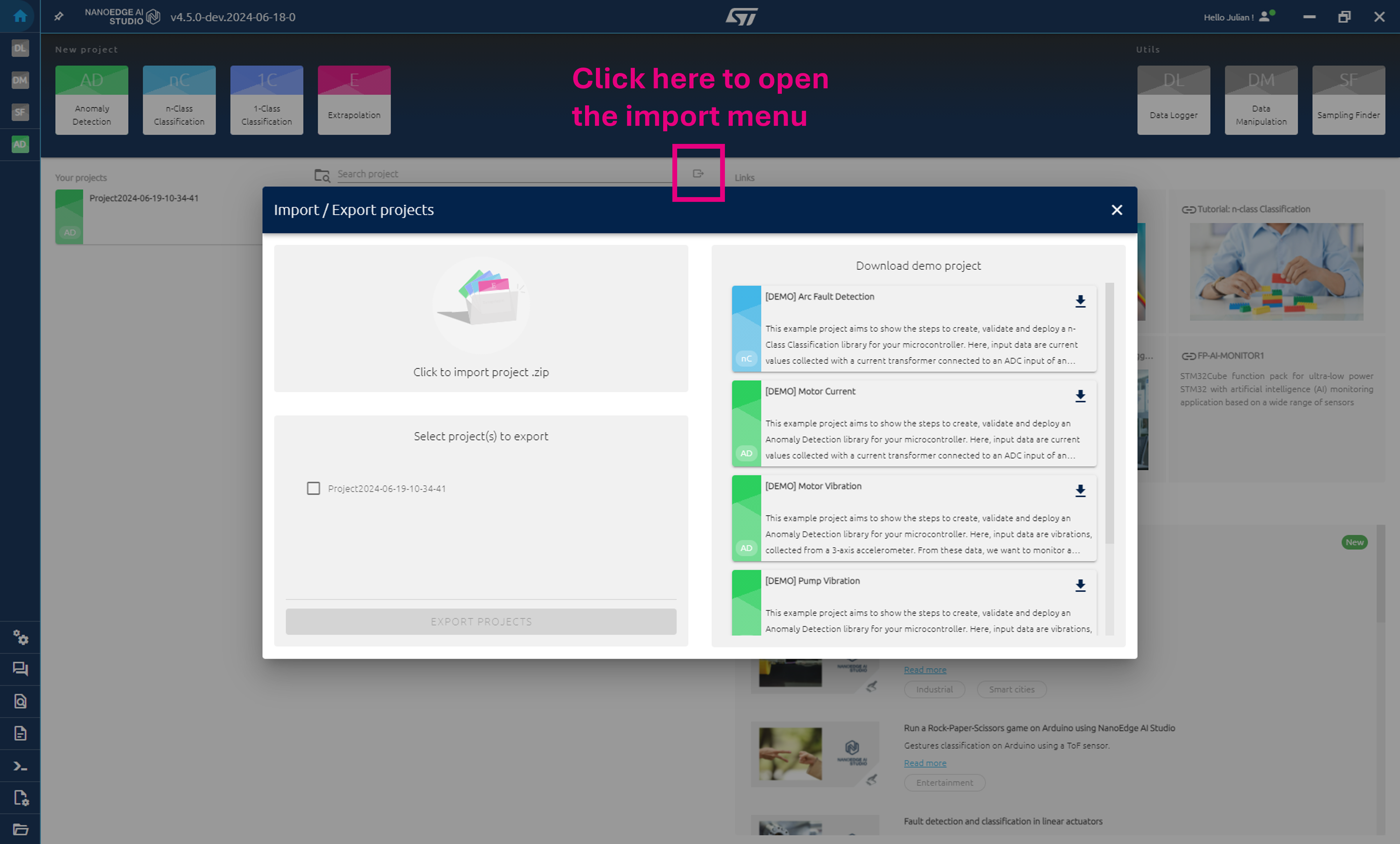

To download these example projects, you can do it directly on the Home page if you do not have a project yet (see previous screenshot). Else you need to open the import menu:

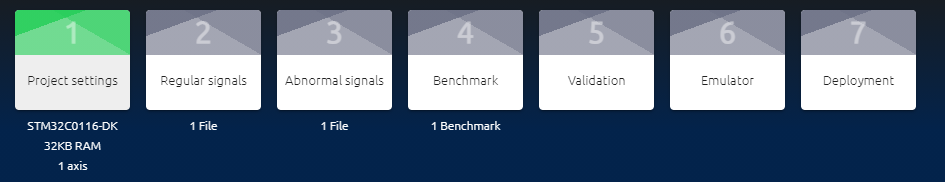

3.1.2. Project steps

Each project is divided into five successive steps:

- Project settings, to set the global project parameters.

- Signals, to import signal examples that are used for library selection.

Note: this step is divided in two substeps in anomaly detection projects (regular signals / abnormal signals). - Optimize & benchmark, where the best NanoEdge AI Library is automatically selected and optimized.

- Validation to validate the library selected by testing through files or serial, one or multiple libraries.

- Deployment, to compile and download the best library and its associated header files, ready to be linked to any C code.

A helper tool providing tips is available in the bottom right corner of the screen when in a project. We highly recommend you complete the tasks given and read the documentation highlighted by it!

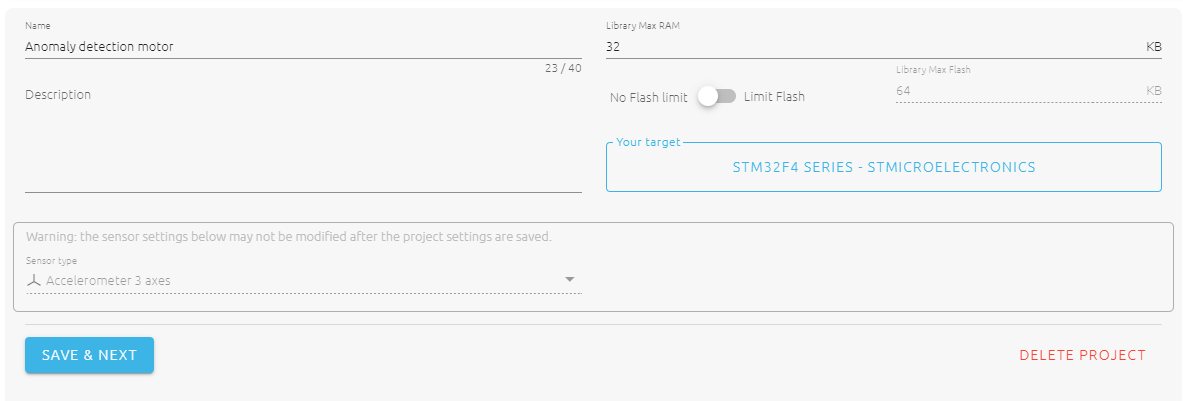

3.2. Project settings

The first step in any project isproject settings.

Here, the following parameters are set:

- Project name

- Description (optional)

- Max RAM: this is the maximum amount of RAM memory to be allocated to the AI library. It does not consider the space taken by the sensor buffers.

- Limit flash / no flash limit: this is the maximum amount of flash memory to be allocated to the AI library.

- Sensor type: the type of sensor used to gather data in the project, the type of data and their dimension (number of axes).

- Target: this is the type of board or microcontroller on which the final NanoEdge AI Library is deployed.

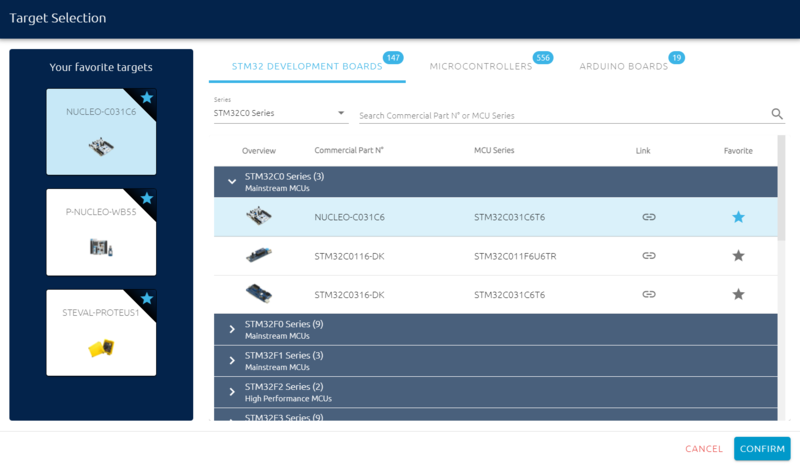

3.2.1. Target selection

NanoEdge AI Studio allows compilation on a large variety of targets. These targets are separated in three tabs:

Development boards: 140+ targets,

greats for education or proof of concepts as development board generally embed sensors.

Microcontrollers: 550+ targets

- Any STM32 Arm® Cortex®-M MCU: All STM32 families with a Arm® Cortex®-M MCU can be selected as target.

- A large variety of non-ST Arm® Cortex®-M MCUs for development purposes only.

Arduino boards: 19 targets

Arduino boards with Arm® Cortex®-M MCUs for development purposes only.

For non-ST target and production purposes, contact ST.

It is possible to add a board as favorite by clicking on the star. Then, the board is always displayed on the left.

3.2.2. Types of sensors in NanoEdge AI Studio

NanoEdge AI Studio and its output NanoEdge AI Libraries are compatible with any sensor type: NanoEdge is sensor-agnostic. NanoEdge tries to extract the information in the data being vibrations, current, sound, or anything else. As explained in data format, sensors are divided in two distinct groups based on the format of data:

Time series refers to data that contains information of multiple points in time. The available sensors are:

- Accelerometer (possibility to generate synthetic data for anomaly detection project)

- Current

- Hall effect

- Microphone

- Time-of-Flight

- Generic

Cross sectional refers to data that contain information at a single point in time. The available sensors are:

- Time-of-Flight

- Multi-sensor

For more information on time series or cross sectional, check the part of the documentation about data format.

3.2.2.1. Generic and multi-sensor

Generic and multi-sensor are useful if you want to use multiple sensors data. Generic is for the time series case and multi sensor if for the cross sectional case.

Generic (time series):

Generic sensor is useful if you want to work with data representing the evolution in time of a sensor that is not listed in NanoEdge or that is the combination of multiple sensors.

If you work with a 3-axis accelerometer and a current sensor, for example, you can tell NanoEdge to use the data as a "four axes generic sensor" being the 3 axes from the accelerometer and the one axis current. You need to set the same data rate on both sensors to always have four values samples in your temporal buffers.

Multi-sensor (cross sectional):

Multi-sensor is the equivalent of generic but if working with cross sectional data. At a single point in time, you want to get the values from all the sensors. You are not interested in the evolution of the values of each sensor.

Let us say that you have a lot of sensors in a car and you want to predict the temperature of the motor. You found that the evolution of the parameters is irrelevant to you. Then multi sensor is your way to go, you will at some point in time collect all the sensor's data and make the prediction of the temperature.

The example of the home price is also a good example if you consider each characteristic of the house as different sensors values.

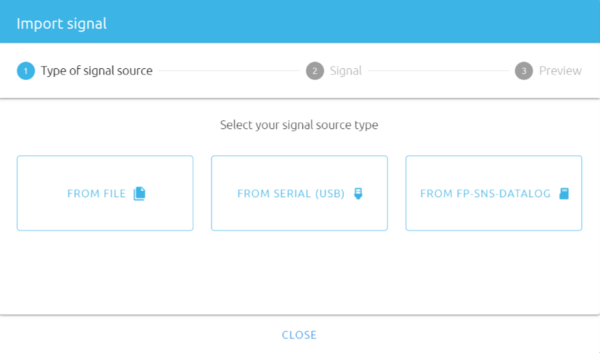

3.3. Signals

3.3.1. How to import signals

There are two ways to import datasets in NanoEdge AI Studio:

- From a file (in .txt / .csv format)

- From the serial port (USB) of a live datalogger

1. From a file:

- Click IMPORT SIGNAL FROM FILE, and select the input file to import.

- Or drag and drop any file directly.

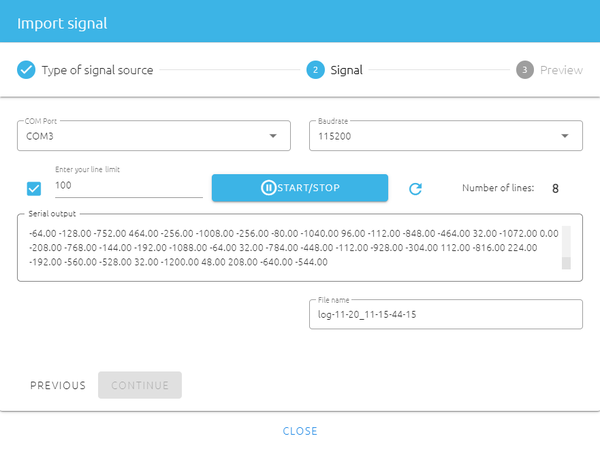

2. From the serial port:

- Select the LOG SIGNAL FROM SERIAL USB where your datalogger is connected and select the correct baud rate.

- Select the right COM PORT and Baud rate

- If needed, tick the checkbox enter a maximum number of lines to be imported.

- Click START/STOP to record the desired number of signal examples from your datalogger.

- Click IMPORT.

3.3.2. Which signals to import

1. Anomaly detection:

In anomaly detection, you need to collect two types of data: regular (nominal) data and abnormal data:

- Regular data: Represents the normal states of a machine (for example, different operating regimes of a normally functioning motor).

- Abnormal data: Represents the abnormal states of a machine (for example, motor misalignment, pump clogging).

- Synthetic data: If you are not able to log abnormal data, you can skip importing such data and run a benchmark with regular data and generated abnormal data (only for if accelerometer is select as sensor in project settings).

When logging data, ensure that the setup closely matches the real deployment environment. Collect a wide variety of data. If the library performs poorly under specific conditions, log new data, add it to the training set, and rerun the benchmark. This is an iterative process.

Example:

To detect anomalies in a 3-speed fan using an accelerometer to monitor vibration patterns, I recorded signals for various behaviors:

Nominal examples:

- 30 for "speed 1"

- 25 for "speed 2"

- 35 for "speed 3"

- 30 for "fan turned off"

Some signals include "transients" like fan speeding up or slowing down.

Abnormal examples:

- 30 for "fan airflow obstructed at speed 1"

- 35 for "fan orientation tilted by 90 degrees"

- 25 for "tapping on the fan with my finger"

- 25 for "touching the rotating fan with my finger"

2. Classification:

For Classification, since the version 5.0.0, it is possible to import multiple file for a same class whereas before, one file was corresponding to one class. You can create as many class as you want and import as many files per class as you also want.

To run a benchmark, select manually the classes or specific files you want to use.

Example: For the identification of types of failures on a motor, five classes can be considered, each corresponding to a behavior, such as:

- Normal behavior

- Misalignment

- Imbalance

- Bearing failure

- Excessive vibration

This results in the creation of five distinct classes (import one .txt / .csv file for each), each containing a minimum of 20-50 signal examples of said behavior.

3. Outlier detection:

For outlier detection, all the imported data needs to be regular (nominal) data representing all the situations considered normal. The benchmark will then use these data to try to define what is the normality and its limit.

4. Extrapolation:

For extrapolation, all signal examples must be gathered into the same input file.

This file contains all target values to be used for learning, along with their associated buffers of data (representing the known parameters).

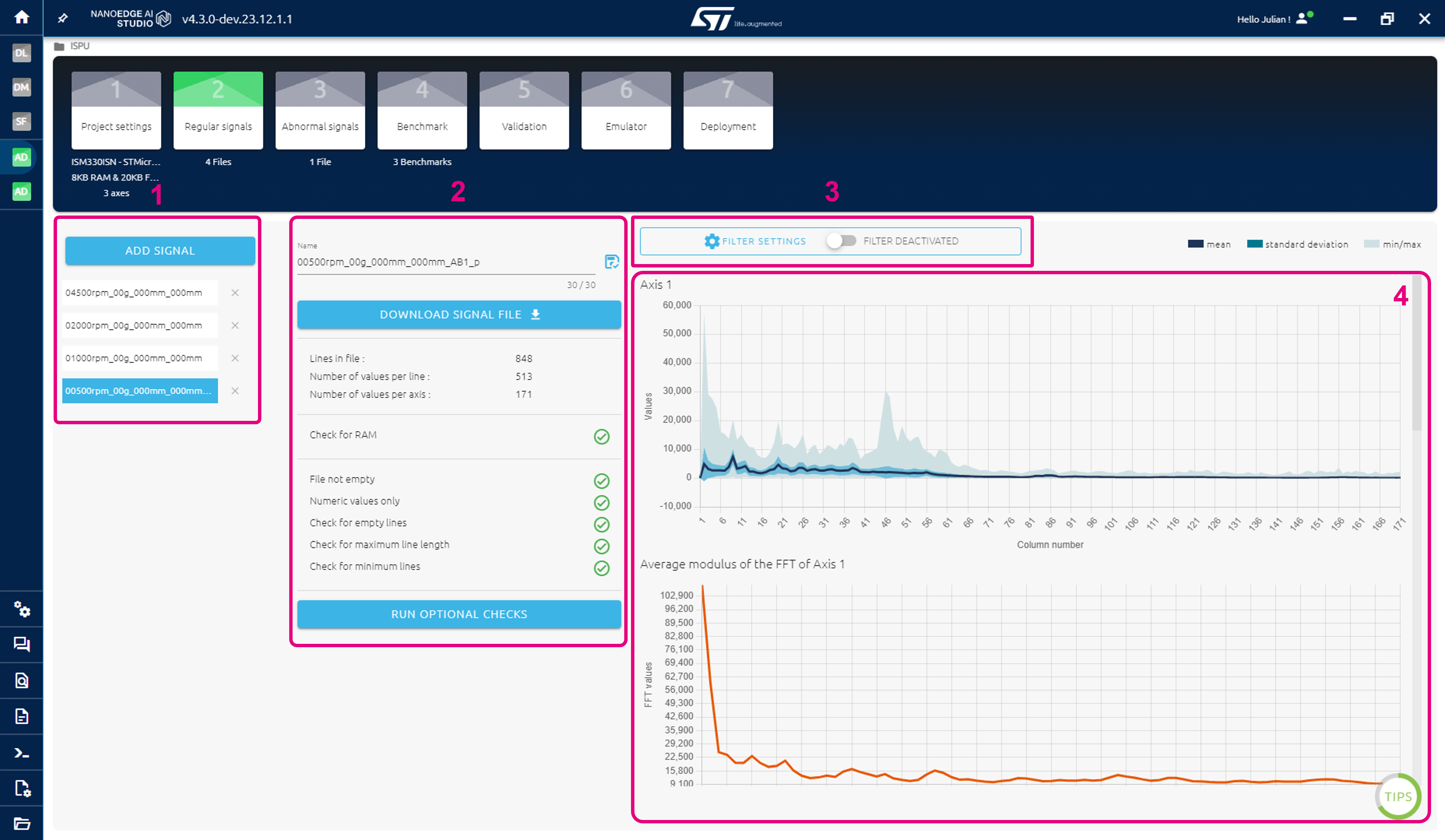

3.3.3. Signal summary screen

The signals step contains a summary of all information related to the imported data:

- List of classes and imported dataset

- A variety of plots for all the imported datasets.

For anomaly detection, outlier detection and extrapolation, the groups, or classes, are predetermined and correspond to the data needed to train models:

- Regular and Abnormal signals for anomaly detection

- Regular signals only for outlier detection

- Signals for extrapolation

On the other hand, for classification, you can create as much class as you like and rename them to better organize yourself.

This step is also made to visualize your data thanks to a few features regarding the displayed plots:

- Drag and drop any plot to organize the page as you would like

- Choose the plots to display or not and their organization

- Add new plots

To add a new plot, click on the plus icon on the middle right extremity of the NanoEdge AI Studio window. A new window will open with all the kind of plots that NanoEdge offers you to create. With newer update, we plan on adding more and more kind of plots for you to better analyze your dataset.

You can find another clickable icon next to the previous one to organize the way plots are displayed. You can select which kind of plot to display and how to organize them.

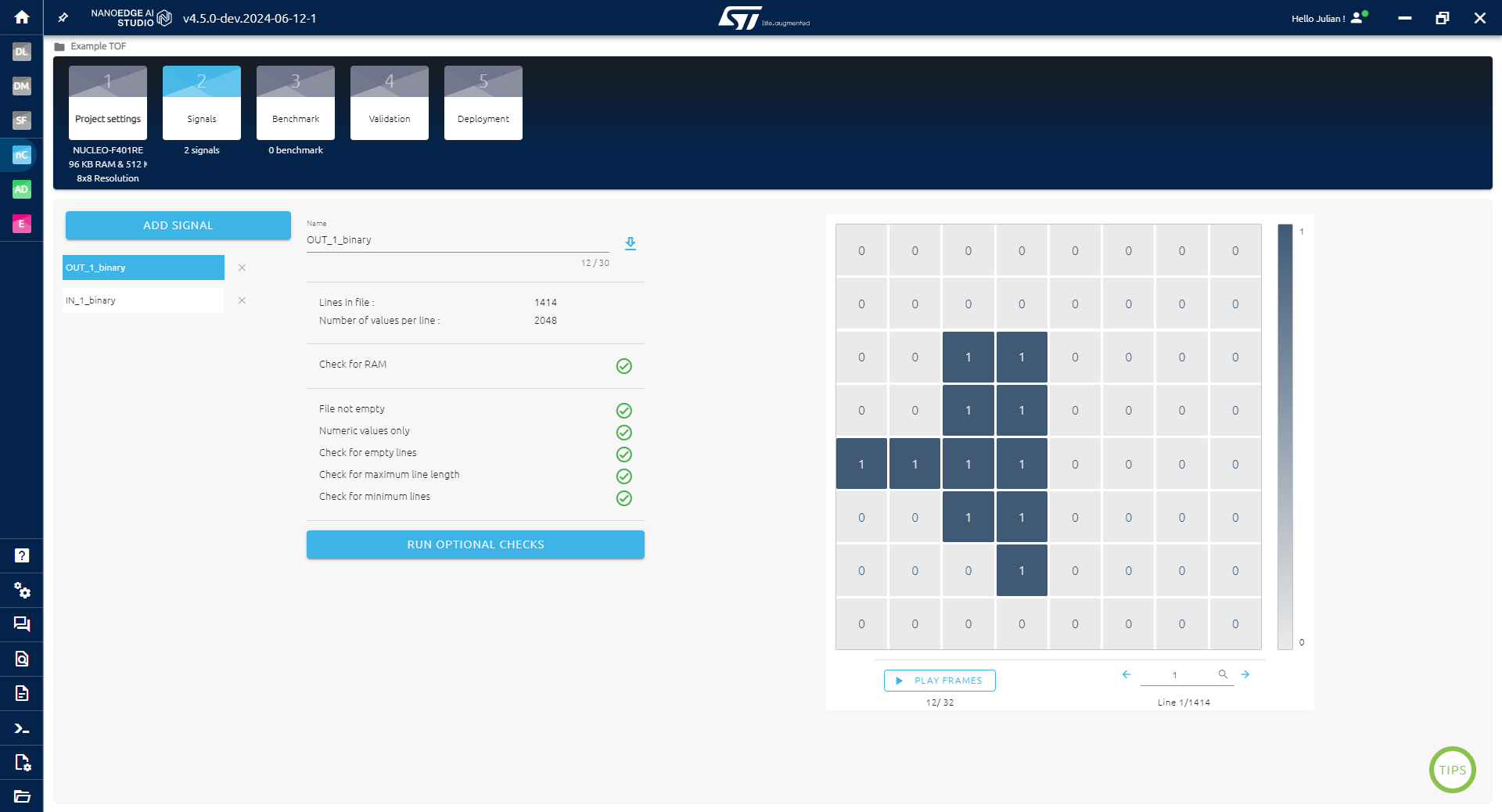

3.3.3.1. Signal step for Time-of-Flight

If Time-of-Flight was selected as the sensor for the project, the signal step display changes. Instead of plotting a signal and FFT for each axis, it instead displays the Time-of-Flight result matrix.

It is possible to display the matrix corresponding to any line (buffer) in the dataset.

Additionally, if in the project settings, the Time-of-flight sensor was selected as time series (not cross sectional), then it is possible to play an animation displaying the consecutive matrix collected.

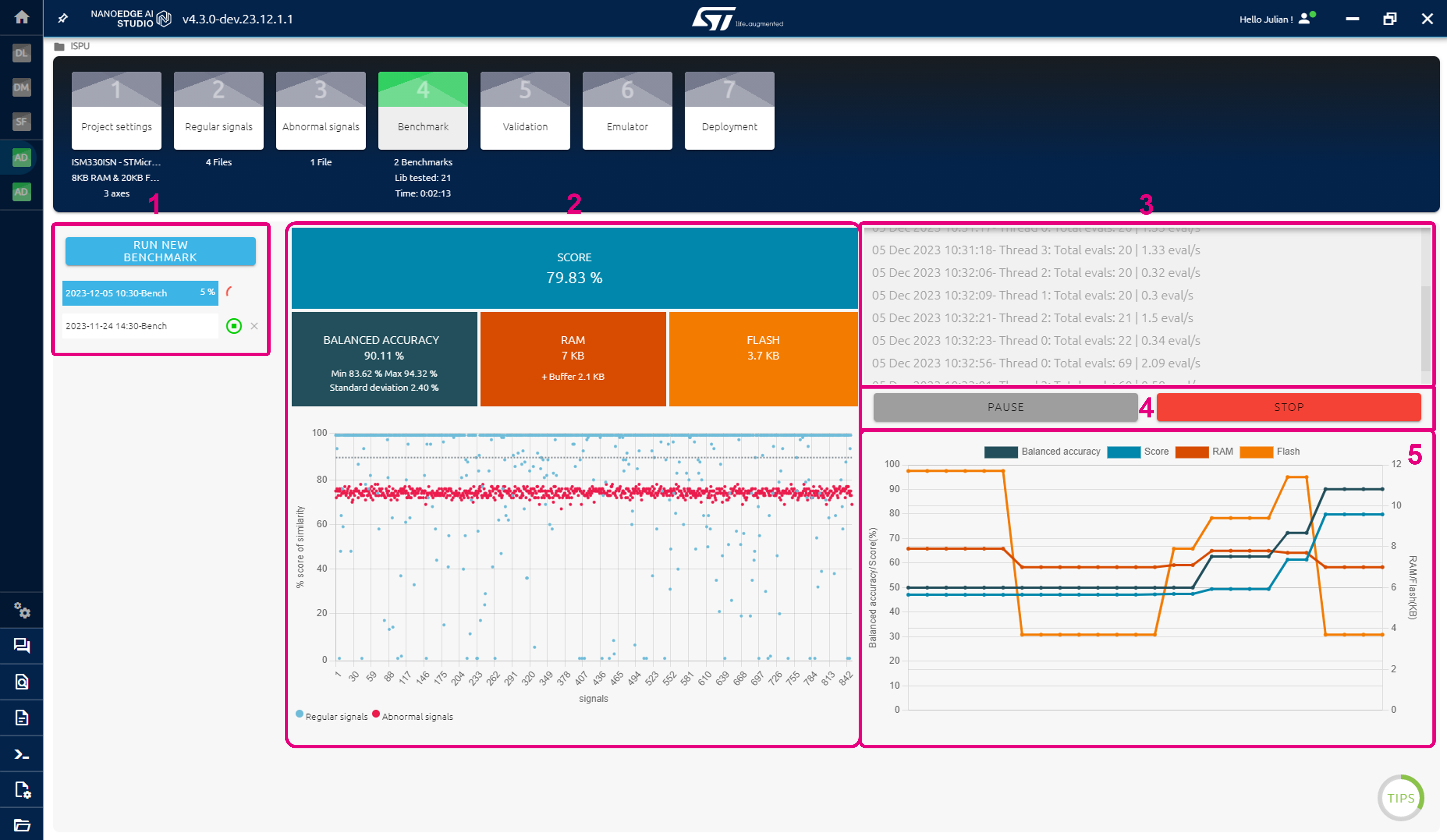

3.4. Benchmark

Then benchmark is the heart of NanoEdge AI Studio. It will iteratively look for the best combination of preprocessing of your data, the architecture of models and parameters for these models to output the best possible library.

Run a benchmark:

On the left side, you will find a New benchmark button to launch a new search and the list of all the previous ran benchmarks. While a benchmark is running you can freely pause it and resume it as you like.

It is also possible run benchmark one after an other. They will be placed in a queue and launched automatically when the previous one finishes.

To run a new benchmark, click on New benchmark button and select the classes or datasets to import:

If doing anomaly detection and using an accelerometer (only in this specific case), it is possible to run a benchmark without abnormal data imported. We introduced a way to generate abnormal vibration data based on the regular data imported to help you create a library.

To do so, don't import abnormal data and click New benchmark. You will see that an option to use synthetic data appearing:

3.4.1. Benchmarking process

Each candidate library is composed of a signal preprocessing algorithm, a machine learning model, and some hyperparameters. Each of these three elements can come in many different forms, and use different methods or mathematical tools, depending on the use case. This results in a very large number of possible libraries (many hundreds of thousands), which need to be tested, to find the most relevant one (the one that gives the best results) given the signal examples provided by the user.

In a nutshell, the Studio automatically:

- Divides all the imported signals into random subsets (same data, cut in different ways),

- Uses these smaller random datasets to train, cross-validate, and test one single candidate library many times,

- Takes the worst results obtained from step #2 to rank this candidate library, then moves on to the next one,

- Repeats the whole process until convergence (when no better candidate library can be found).

Therefore, at any point during benchmark, only the performance of the best candidate library found so far is displayed (and for a given library, the score shown is the worst result obtained on the randomized input data).

3.4.2. Performance indicators

During benchmark, all libraries are ranked based on one primary performance indicator called "score", which is itself based on several secondary indicators. Which secondary indicators are used depends on the type of project created. Below is the list of secondary indicators involved in the calculation of the "score" (more information about available here).

Anomaly detection:

- Balanced accuracy (BA)

- Functional margin

- RAM & flash memory requirements

Classification:

- Balanced accuracy (BA)

- Accuracy

- F1-score

- Matthews correlation coefficient (MCC)

- A custom measurement that estimates the degree of certainty of a classification inference

- RAM & flash memory requirements

Outlier detection:

- Recall

- A custom measurement that considers the radius of the hypersphere containing nominal signals, and the recall obtained on the training dataset

- RAM & flash memory requirements

Extrapolation:

- R² (R-squared)

- SMAPE (Symmetric mean absolute percentage error)

- RAM & flash memory requirements

The main secondary indicators are "balanced accuracy" for anomaly detection and Classification, "recall" for outlier detection, and "R²" for extrapolation. Like the score, these metrics are being constantly optimized during benchmark and are displayed for information.

- Balanced accuracy (anomaly detection, classification) is the library's ability to correctly attribute each input signal to the correct class. It is the percentage of signals that have been correctly identified.

- Recall (outlier detection) quantifies the number of correct positives predictions made, out of all possible positive predictions.

- R² (extrapolation) is the coefficient of determination, which provides a measurement of how well the observed outcomes are replicated by the model, based on the proportion of total variation of outcomes explained by the model.

Additional metrics related to memory footprints:

- RAM (all projects) is the maximum amount of RAM memory used by the library when it is integrated into the target microcontroller.

- Flash (all projects) is the maximum amount of flash memory used by the library when it is integrated into the target microcontroller.

3.4.3. Benchmark plots

In version 5.0.0, the benchmark was overhauled to display much more information about the current running benchmark than before throughout a variety of plots. These plots are widgets freely moveable. You can also delete them or add new one using the plus clickable icon on the middle right extremity of the screen.

- Benchmark Progression: displays the number of tested libraries in realtime, the time elapsed since launched and the CPU usage.

- Best Library Performance: Displays a set of metrics such as the Balanced accuracy, the RAM and Flash memory footprint and a Quality index that is a combination of all the previous ones of the best library found in the current benchmark.

- Benchmark Results Evolution: Display in real time all the metrics for libraries found.

- Signals: A simple widget to display which files where used for the selected benchmark.

- Best Library Results: Differs depending on the kind of project selected in NanoEdge. But the goal is to showcase the result of every signals inferred by the best library found. See below for more information.

- Best Library Confusion Matrix: The classical confusion matrix that you find on any data science related application.

- Search space: This plot allows you to see what combination of preprocessing and model are being tested in real time. The blue line is the current best combination.

- Best Library Learning Curve (anomaly detection only): Displays the theorical performance of a retrained model for each number of learning iterations.

- Libraries Tested by Model: it summarize the total number of libraries found filtered by kind of models.

- Cross Validation Results: Displays multiple standard data sciences metrics obtained on cross validation datasets. (the number of cross validation is set by kind of projects).

- Real-Time Performance Evolution By Model: Displays in real time the balanced accuracy of every libraries filtered by models.

Best Library Results variations:

TheBest Library Results plot is different in each kind of projects in NanoEdge AI Studio:

- Theanomaly detection plot (top left) shows similarity score (%) vs. the signal number. The threshold (decision boundary between the two classes, "nominal" and "anomaly") sets at 90% similarity, is shown as a gray dashed line.

- The outlier detection plot (top right) shows a 2D projection of the decision boundary separating regular signals from outliers. The outliers are the few (~3-5%) signals examples, among all the signals imported as "regular" which appear to be most different from the rest (~95-97%) of the others.

- The extrapolation plot (bottom left) shows the extrapolated value (estimated target) vs. the real value, which was provided in the input files.

- The Classification plot (bottom right) shows probability percentage of the signal (the % certainty associated with the class detected) vs. the signal number.

3.4.4. Possible cause for poor benchmark results

If you keep getting poor benchmark results, try the following:

- Increase the "max RAM" or "max flash" parameters (such as 32 Kbytes or more).

- Adjust your sampling frequency; make sure it is coherent with the phenomenon you want to capture.

- Change your buffer size (and hence, signal length); make sure it is coherent with the phenomenon to sample.

- Make sure your buffer size (number of values per line) is a power of two (exception: Multi-sensor).

- If using a multi-axis sensor, treat each axis individually by running several benchmarks with a single-axis sensor.

- Increase (or decrease, more is not always better) the number of signal examples (lines) in your input files.

- Check the quality of your signal examples; make sure they contain the relevant features / characteristics.

- Check that your input files do not contain (too many) parasite signals (for instance no abnormal signals in the nominal file, for anomaly detection, and no signals belonging to another class, for classification).

- Increase (or decrease) the variety of signal examples in your input files (number of different regimes, classes, signal variations, or others).

- Check that the sampling methodology and sensor parameters are kept constant throughout the project for all signal examples recorded.

- Check that your signals are not too noisy, too low intensity, too similar, or lack repeatability.

- Remember that microcontrollers are resource-constrained (audio/video, image, and voice recognition might be problematic).

You may also look atguidelines for a successful NanoEdge AI project.

Low confidence scores are not necessarily an indication or poor benchmark performance, if the main benchmark metric (accuracy, recall, R²) is sufficiently high (> 80-90%).

Always use the associated emulator to determine the performance of a library, preferably using data that has not been used before (for the benchmark).

3.5. Validation

In the validation step, you can compare the behavior of all the libraries saved during the benchmark on a new dataset. The goal of this step is to make sure that the best library given by NanoEdge is indeed the best among all the others, but also to see if the libraries behave properly.

The most important features of these steps are:

- Experiment: Select multiple libraries to compare them using the same test files.

- Serial emulator: Test a specific library using serial.

- Execution time: Get an estimation of the library's execution time.

- Report: Get a report containing all the information of the selected library.

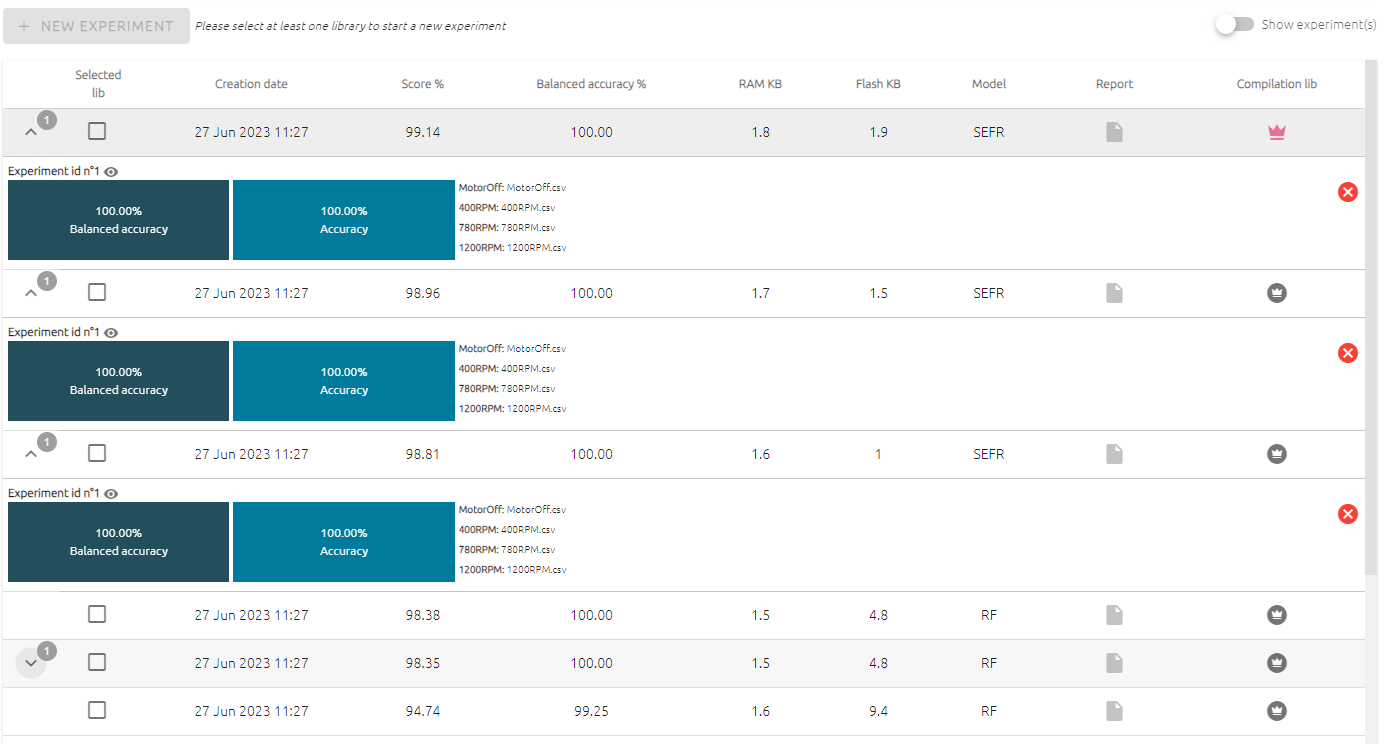

3.5.1. Experiment

The goal of the experiments is to compare libraries between each other to select the one that corresponds best to your needs.

In the page is the list of all the libraries found during the benchmark.

- Select several libraries.

- Click 'new experience'.

- Import the test datasets (preferably dataset not used in the benchmark).

Each time a new experiment is done, an id is associated. It permits the user to create multiple experiences, with different files and libraries and keep track, thanks to the ids, of the results of all those experiences.

You can filter the results of an experience by clicking the eye icon next to an experience id.

3.5.2. Serial emulator

The serial emulator is made to specifically test a library and its behavior using serial. Each project type has its own emulator (see below) but they share some common aspect:

- COM port: You need to select the right COM port (left).

- Serial result: You can download afterward the data logged used for the test (right).

- Executables: You can download Windows or Linux .exe emulators (right).

The emulator can be used within the Studio interface, ordownloaded as a standalone .exe (Windows®) or .deb (Linux®) to be used in the terminal through the command-line interface. This is especially useful for automation or scripting purposes.

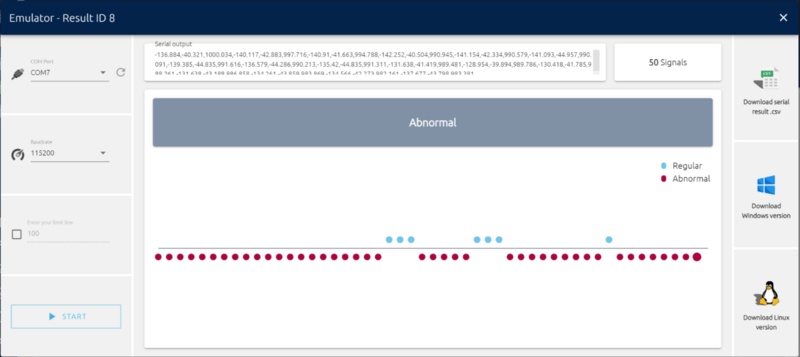

Anomaly detection:

Anomaly detection models are made in a way to be retrainable directly in microcontrollers. To do so, they are trained using only the nominal and tested on nominal and abnormal data.

To use the emulator, you need to first retrain the model logging nominal data. A minimum number of learning is displayed and corresponds to the minimum number of learning computed during the benchmark to ensure the same level of performance displayed in the benchmark. Once finished, click on go to detection.

In detection is displayed:

- The last inference similarity: The similarity score is a value between 0 and 100% representing the percentage of chance for the signal to be nominal. Generally, we set a threshold at 90% where below we consider the signal being abnormal.

- The similarity plot: A plot to represent the similarity score of all the signals of the current simulation. It is possible to change the threshold to distinguish nominal from abnormal.

- The total repartition pie chart: Another plot to display the repartition of nominal and abnormal detection based on the set threshold.

Outlier detection:

In the emulator in outlier detection is displayed if the signal is a regular signal (nominal) or an outlier (abnormal).

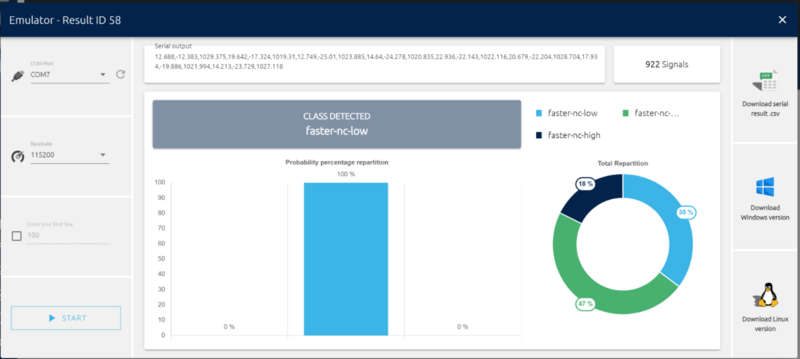

Classification:

In Classification is displayed:

- The detected class: The class with the highest probability.

- The probability percentage repartition: A plot displaying the probability of being of each class. It gives more details than just the class, you can see if the model is sure about its decision or if it is hesitating between two classes or more.

- The total repartition pie chart: Another plot to display the total number of detections of each class during the current emulation.

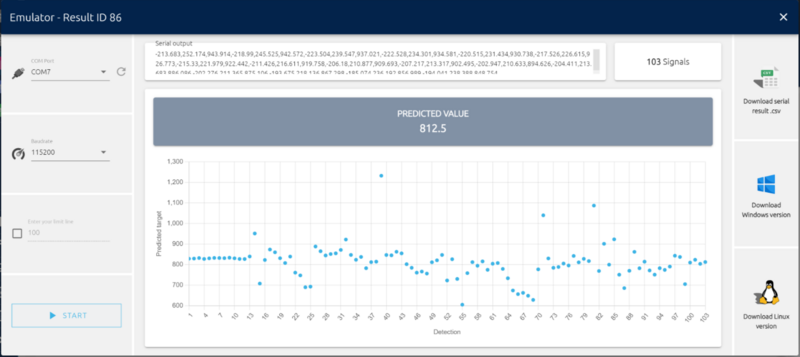

Extrapolation:

The emulator in extrapolation displays the current predicted value and plots all the predicted values below.

3.5.3. Possible causes of poor emulator results

Here are possible reasons for poor anomaly detection or classification results:

- The data used for library selection (benchmark) is not coherent with the one you are using for testing via emulator/library. The signals imported in the Studio must correspond to the same machine behaviors, regimes, and physical phenomena as the ones used for testing.

- Your main benchmark metric was well below 90% or your confidence score was too low to provide sufficient data separation.

- The sampling method is inadequate for the physical phenomena studied, in terms of frequency, buffer size, or duration for instance.

- The sampling method has changed between benchmark and emulator tests. The same parameters (frequency, signal lengths, buffer sizes) must be kept constant throughout the whole project.

- The machine status or working conditions might have drifted between benchmark and emulator tests. In that case, update the imported input files, and start a new benchmark.

- [Anomaly detection]: You have not run enough learning iterations (your machine learning model is not rich enough), or this data is not representative of the signal examples used for benchmark. Do not hesitate to run several learning cycles, as long as they all use nominal data as input (only normal, expected behavior must be learned).

3.5.4. Execution time

NanoEdge AI Studio allows for the estimation of execution time for any library encountered during the benchmark with the STM32F411 simulator provided by Arm, utilizing a hardware floating-point unit.

The estimation is an average of multiple calls to the NanoEdge AI library functions tested. The tool does not use directly the user data but data of a similar range to make the estimation.

It is important to note that while this estimation mimics real hardware conditions, it must be treated as such, and variations in the exact signal may impact execution time. Keep in mind that using another hardware can lead to significant changes in execution time.

3.5.5. Validation report

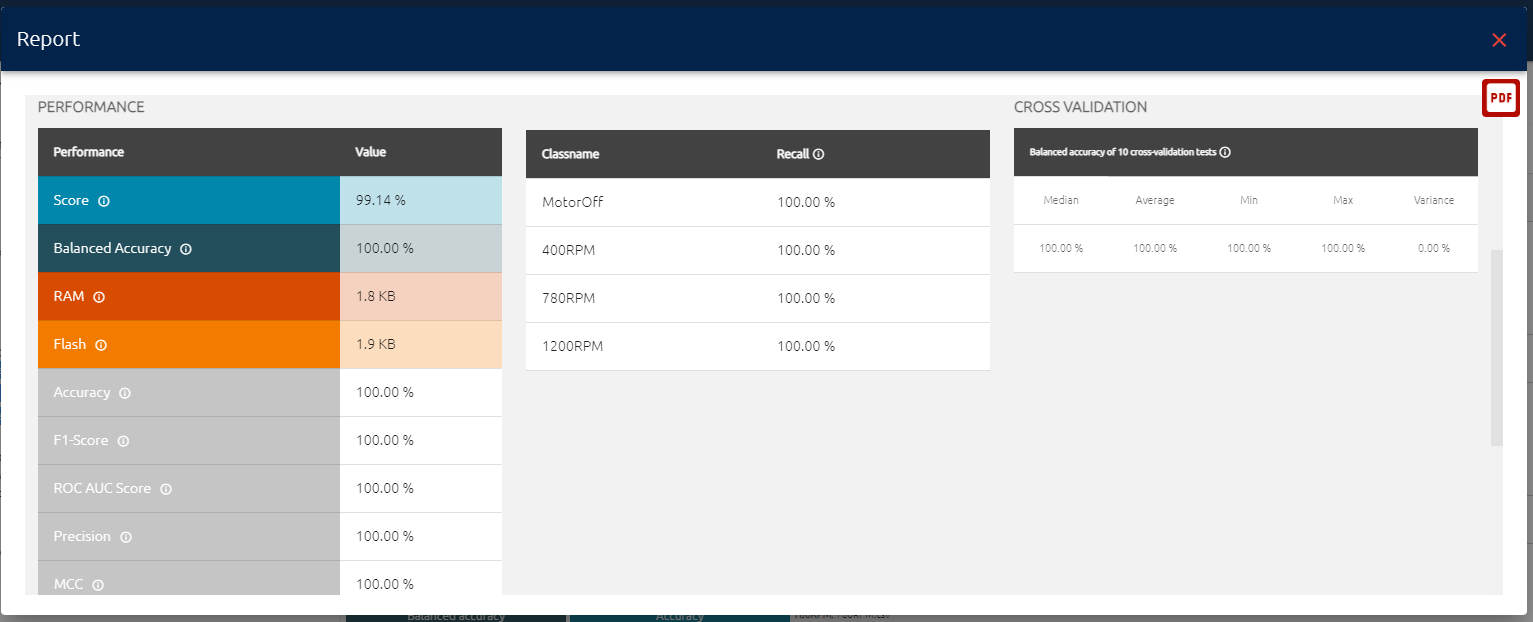

For each library displayed, the user can click on the blank sheet of paper to open the validation report.

The validation report contains information about:

- The data:

- The name of each file used for the benchmark

- The data sensor type: sound, vibration, and others

- The signal length (with the total signal length and the signal length per axis)

- The number of signals in each file

- The data repartition score (goes up to five stars)

The performance:

- The main metrics: Score, balanced accuracy, RAM, and flash memory usage

- More specific metrics: Accuracy, f1 score, ROC AUC score, precision, MCC

- The recall per class: To know which class performs well, which class performs badly

- The nested cross validation results (10 test): Show how the model performed on 10 test datasets (to monitor overfitting)

An algorithm flowchart:

- The entry data shape

- The preprocessing applied to the data

- The model architecture name

The user can export the summary page as a PDF using the top right red PDF button.

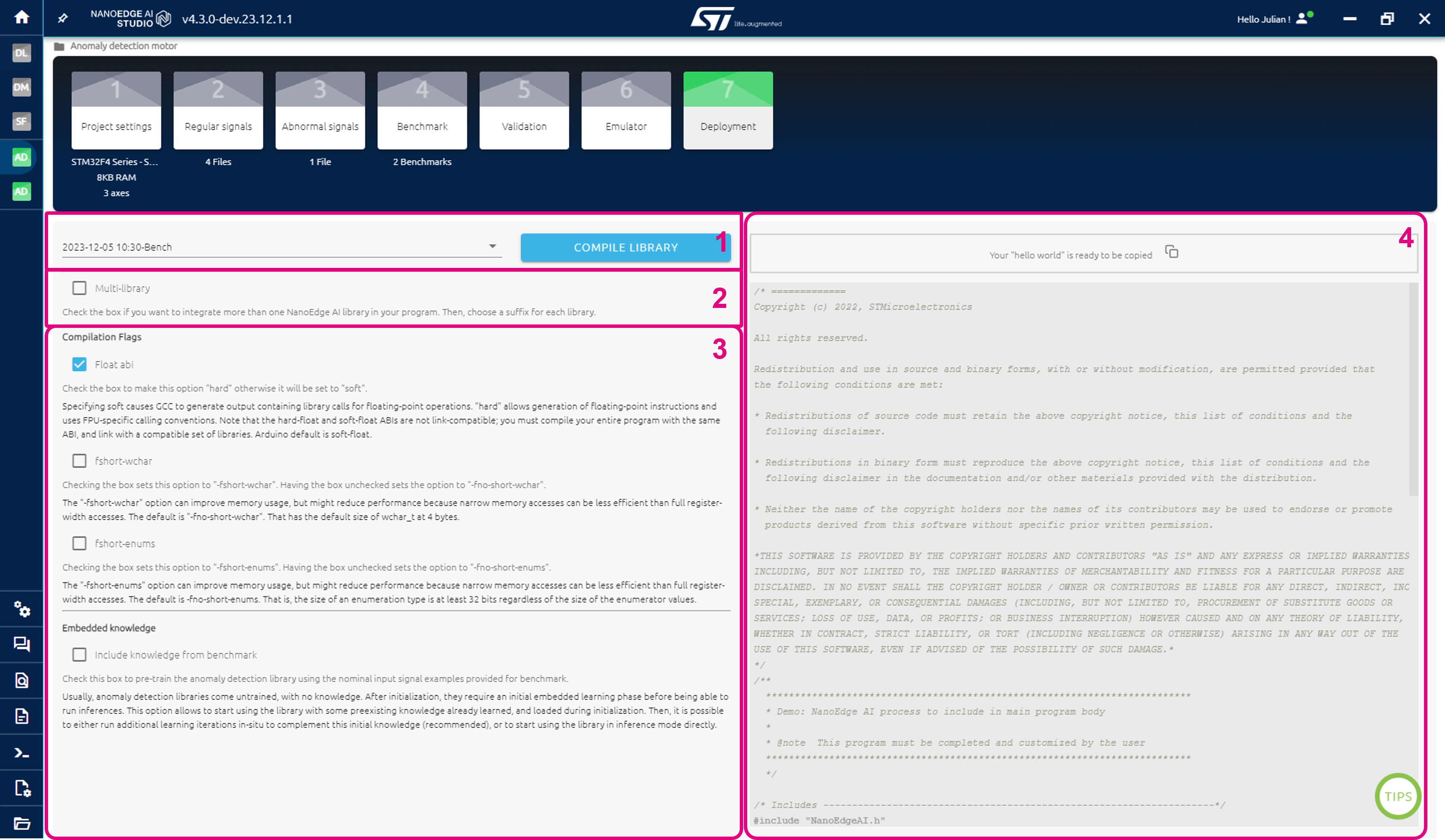

3.6. Deployment

The last step is the Deployment step, were you get everything ready to be deployed to the target microcontroller to build the embedded application.

Thedeployment screen is intended for four main purposes:

- Select a benchmark and compile the associated library by clicking the COMPILE LIBRARY button.

- Optional: Select multi-library, to deploy multiple libraries to the same MCU. More information below.

- Optional: Select compilations flags to be considered during library compilation.

- Optional: Copy a "Hello, World!" code example, to be used for inspiration.

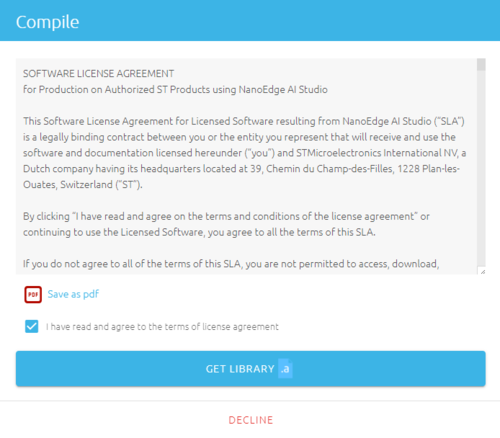

If the target selected at the beginning of the project is a production ready board, you need to agree to the license agreement:

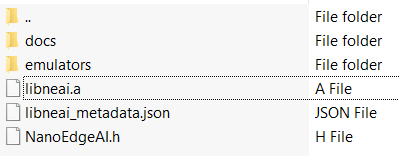

After clicking compile and selecting your library type, a .zip file is downloaded to your computer.

it contains:

libneai.a: the static precompiled NanoEdge AI library file, in other words, the algorithm found in NanoEdge AI Studio.NanoEdgeAI.h: the header containing the NanoEdge AI Studio function for you to use in your embedded project.knowledge.h: the parameters of the model. By default, in Classification, Outlier and Extrapolation, this file is present because they are static models (the weight will never change). In Anomaly detection, it is recommended to use the learn function to retrain the model (sort of recreating a new knowledge). If needed, an option is available to get the knowledge in Anomaly detection.- The NanoEdge AI emulators (both Windows® and Linux® versions)

metadata.json: Some library metadata information.

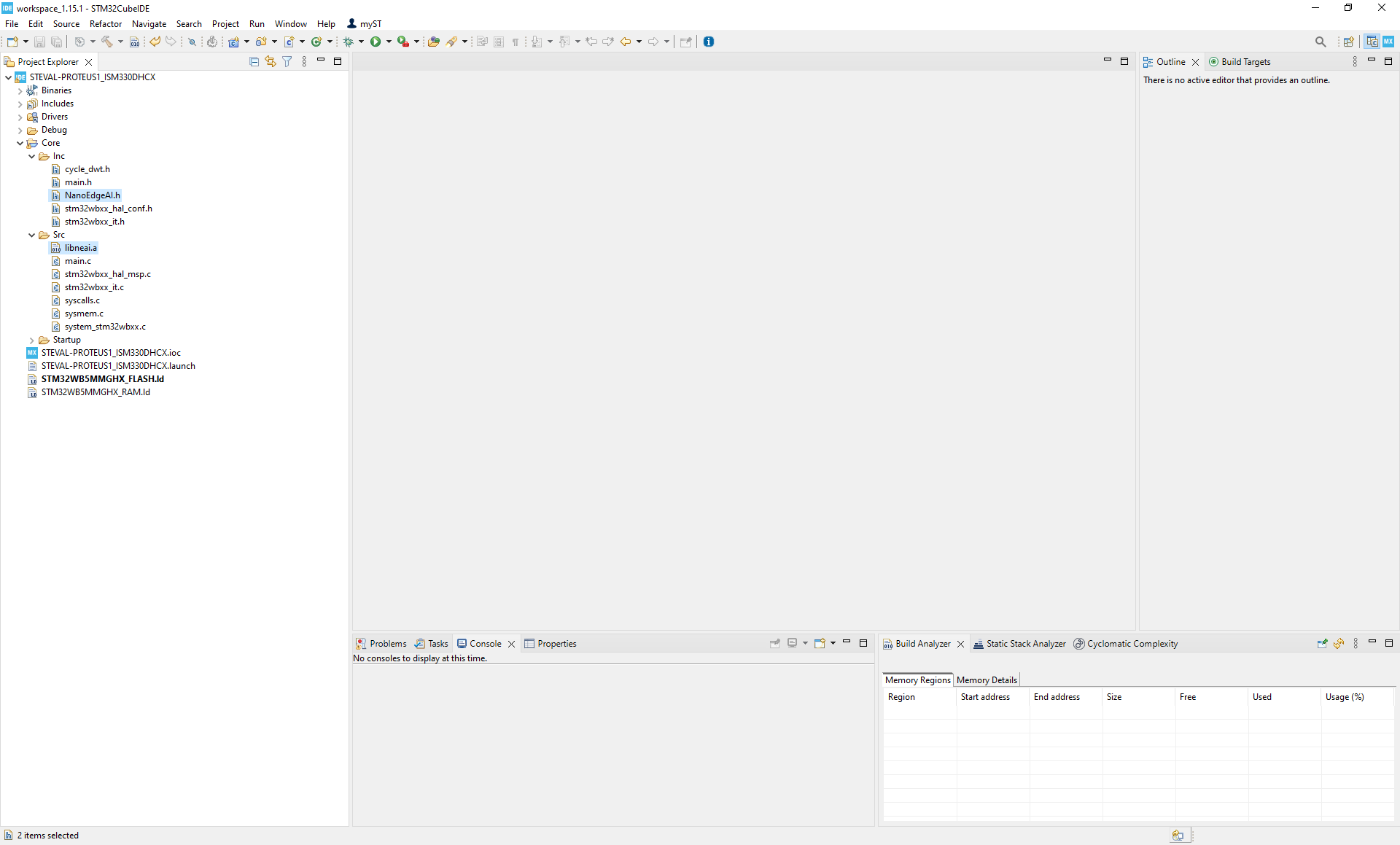

3.6.1. Add NanoEdge AI Library to a STM32CubeIDE project

To use the AI library, you need to :

- Add

libneai.ainCore/Src. - Add

NanoEdgeAI.hinCore/Inc/. - Add

knowledge.hinCore/Inc/(For Anomaly detection it is recommended to use the learn function instead of the knowledge).

Then you need to add#include "NanoEdgeAI.h" and #include "knowledge.h" in your main.c and link libneai.a. To link the library:

- Right click on your project (in my case STEVAL-PROTEUS1_ISM330DHCX).

- Open the Properties.

- Then go to

C/C++ build > Settings > MCU GCC Linker > Libraries. - Add

neaiinLibraries (-l). - Add

"${workspace_loc:/${ProjName}/Core/Src}"inLibrary search path (-L).

Finally, you are ready to use the NanoEdge AI Studio functions. In one word, you need to use an initialization function once and make sure it return NEAI_OK and then fill a buffer and use another function to do an inference. Then it is up to you to create your application using these blocks.

To learn more about the NanoEdge AI libraries, refer to their documentations:

- Anomaly detection library

- One-class classification library

- N-class classification library

- Extrapolation library

3.6.2. Arduino target compilation

If an Arduino board was selected in project settings, the .zip obtained in compilation contains an additional arduino folder. This folder contains another .zip that can be imported directly in the Arduino IDE to use NanoEdge.

In the Arduino IDE:

- Click sketch > include library > add .zip library....

- Select the .zip in the arduino folder.

If you already used a NanoEdge AI library in the past, you might get an error saying that you already have a library with the same name. To solve it, go to Document/arduino/libraries and delete thenanoedge folder. Try importing your library again in the Arduino IDE.

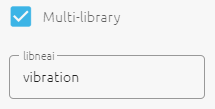

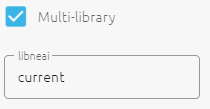

3.6.3. Multi-library

The multi-library feature can be activated on the deployment screen just before compiling a library.

It is used to integrate multiple libraries into the same device / code, when there is a need to:

- Monitor several signal sources coming from different sensor types, concurrently, independently.

- Train the machine learning models and gather knowledge from these different input sources.

- Decide based on the outputs of the machine learning algorithms for each signal type.

For instance, one library can be created for3-axis vibration analysis, and suffixed vibration:

Later on, a second library can be created later on, for1-axis electric current analysis, and suffixed current:

All the NanoEdge AI functions in the corresponding libraries (as well as the header files, variables, and knowledge files if any) are suffixed appropriately, and are usable independently in your code. See below the header files and the suffixed functions and variables corresponding to this example:

3.6.4. Offline Compilation

To compile a library, you must have an internet connection to reach the compilation server. If you are on a pc that has no access to the internet, in the deployment step, NanoEdge will give you a compilation string:

You need to find a way to use this compilation string on a pc with an internet connection. Then you can get your library by copying the compilation string on ourcompilation website. Simply copy the string, build the library and download it.

4. Integrated NanoEdge AI Studio tools

This section presents the tools integrated in NanoEdge AI Studio to help the realization of a project. They are:

- The datalogger generator, to create code to collect data on a development board in a few clicks.

- The sampling finder, to estimate the data rate and signal length to use for a project in a few seconds.

- The data manipulation tool, to reshape the dataset easily.

These tools can be accessed:

- In the main NanoEdge AI Studio screen.

- At any time, in the left vertical bar.

4.1. Datalogger

Importing signals from serial (USB) is available when creating a project in the NanoEdge AI Studio provided the user has created a datalogger in the code beforehand (specific to the board and the sensor used).

The datalogger screen automatically generates that part for the user. The user only needs to select a board among the ones available and choose which sensor to use and its parameters.

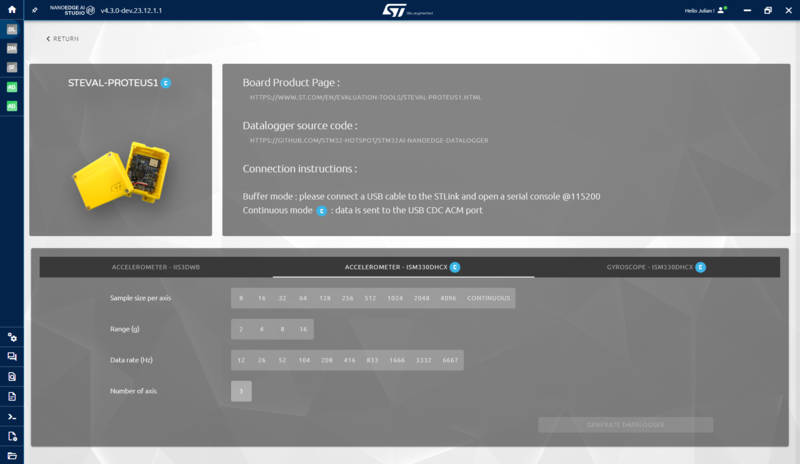

This is the datalogger screen. It contains all the compatible boards that the Studio can generate a datalogger for:

Click on a board to access the page to choose a sensor and its parameters.

The parameterization screen contains:

- The selected board

- The list of sensors available on the board

- The list of parameters specific to the sensor selected

- A button to generate the datalogger

The user must select the options corresponding to the data to be used in a project and click generate datalogger. The Studio generates a zip file containing a binary file. The user only needs to load the binary file on the microcontroller to be able to import signals for a project directly fromserial (USB).

4.1.1. Continuous datalogger

For some sensors on some development boards, the "continuous" option is available as a parameter for the data rate (Hz). If selected, the datalogger records data continuously at the maximum data rate available on the sensor.

When the continuous data rate is selected, the "sampling size per axis" parameter disappears, as the new signal size is simply the number of axes. For example, if you have a 3-axis accelerometer, each line of your file contains three values.

For speed reasons, continuous data loggers use CDC USB instead of UART. In practice, this means that we use the board's USB port to send data to the PC instead of using the ST-Link port. So, make sure you connect the card to the PC using its USB port, otherwise you will not get any data from the datalogger. This also means that once you have flashed the datalogger, you no longer need the ST-Link to record data.

4.1.2. Arduino data logger

NanoEdge data logger also allows to generate code for Arduino boards. Because of how Arduino works, we do not need to select a specific board. Only the sensor that we want to use.

To be able to use the code generated, here are the steps to follow:

- Select Arduino in the data logger generator.

- Select the sensor and set its parameters.

- Click generate and get the .zip containing the code from NanoEdge.

- Extract the .ino file from the NanoEdge's .zip.

- Open it with Arduino IDE.

- Select the board that you are using: Tools > board.

- Include all the libraries called in the C code (generally the sensor and wire.h): Sketch > include library > manage library...

- Flash the code.

You are now ready to log data via serial.

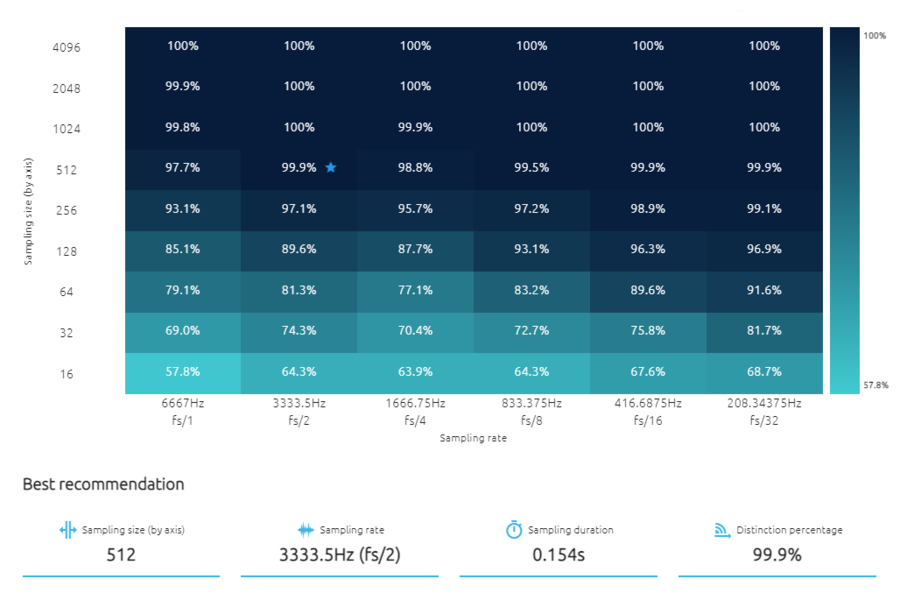

4.2. Sampling finder

The sampling rate and sampling size are two parameters that have a considerable impact on the final results given by NanoEdge. A wrong choice can lead to very poor results, and it is difficult to know which sampling rate and sampling size to use.

The tool is designed to help users make informed decisions regarding the sampling rate and data length, leading to accurate and efficient analysis of time-series signals in IoT applications.

How does the sampling finder works:

The sampling finder needs continuous datasets logged with the highest data rate possible. The signals in a dataset need to have only one sample per line, meaning, for example, three values per line if working with a 3-axis accelerometer.

The tool reshapes these data to create buffers of multiple sizes (from 16 to 4096 values per axis).

When creating the buffers, the sampling finder also skips values to simulate data logged with a lower sampling rate. The range of frequencies tested goes from the base frequency to the frequency divided by 32. For example, to create a buffer with halve the initial data rate, the sampling finder only use one value every two values.

With the all the combinations of sampling sizes and sampling rates, the tool then applies features extraction algorithms to extract the most meaningful information from the buffers. Working with features instead of the whole buffers permits it to be much faster.

The tool tries to distinguish all the imported files using fast machine learning algorithms and estimate a score. The final recommend combination is the one that worked best, with both a good score and a small sampling duration.

How to use the sampling finder:

To use the sampling finder tool, first import continuous datasets with the highest data rate possible (see the file format above):

- If working with anomaly detection, import one file of nominal signals and one file of abnormal signals.

- If working with Classification, import one file of each class.

The steps to use the sampling finder are the following:

- Import the files to distinguish.

- Enter the number of axes in the files.

- Enter the sampling frequency used.

- Choose the minimum frequency to test (the maximum number of subdivisions of the base sampling frequency).

- Start the research.

The sampling finder fill the matrix with the results, giving an estimation for each combination of sampling rate and sampling size and make a recommendation.

After that, the next step is to log data at the sampling rate and sampling size - recommended by the tool and create a new project in NanoEdge using these data.

4.3. Feature Importance

Some input features (columns) have little impact on a machine learning algorithm’s output. This tool helps identify the most valuable features in your dataset and the most useful sensors based on your setup.

The idea is to find which features seem useful and try to modify your data to create new projects and get better results. Benefits include reduced memory usage, lower computational requirements, and prevention of bandwidth saturation during data logging, which lowers production costs. Extracting relevant features maintains the algorithm’s performance while improving memory efficiency when benchmarking your NanoEdgeAI libraries.

How to use the Feature Importance tool:

As this tool is made to extract the importance of each feature in a signal, it is made for cross sectional project. 1 column = 1 feature.

To compute the importance of each feature of being of a certain class, we at least need datasets representing two different classes:

- Import a dataset for a first class (or more)

- Import a dataset for a second class (or more)

- Continue if you have more classes

- Click Start Feature Importance

The way the tool work is that it:

- Computes new metrics based on your signals

- Studies the correlation between your features

- Trains a model to study the importance of the features

After a bit of time, the tool will output the importance percentage of every feature in your signals and plot it as a result.

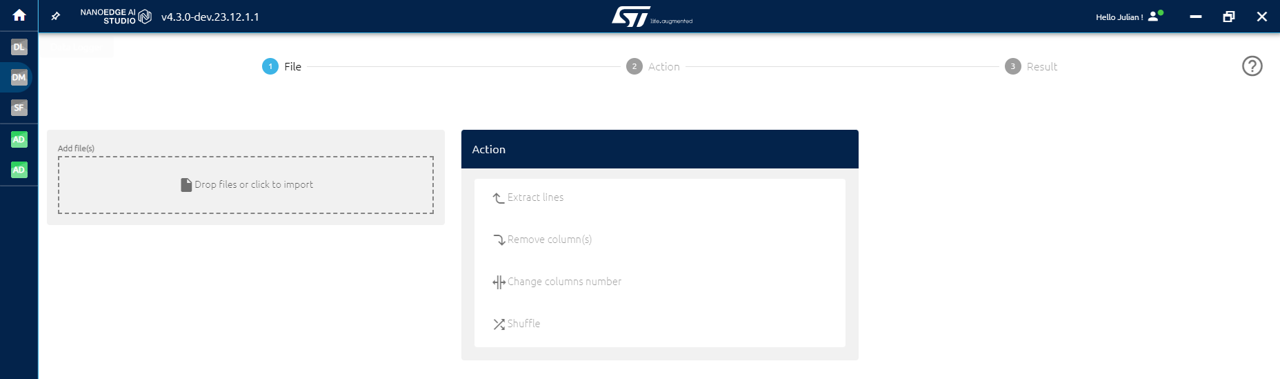

4.4. Data manipulation

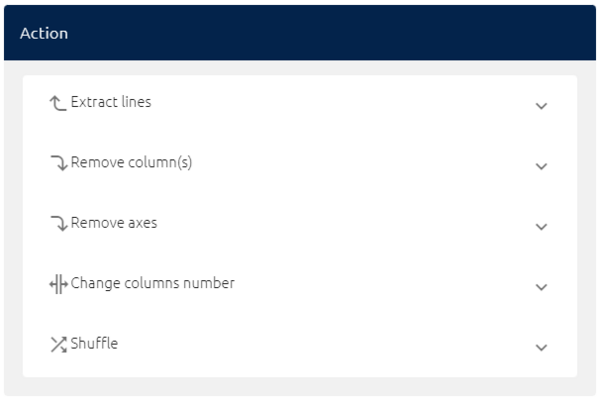

NanoEdge AI Studio provides the user a screen to manipulate data. This page is composed of:

- The file section: This part is used to manage your import.

- The actions section: This part is used to choose a modification to apply on your imported files.

- The result section: This column displays your files after being modified.

File section:

Thefile section contains all the imported files displayed in this column:

- A button drop files or click to import to import one or multiple files at once.

- All the imported files are displayed in columns. The name of the file and the number of lines and columns are displayed by default. Additionally, you can preview your entire file by clicking the arrow in the right down corner.

Concatenate: If you import multiple files, a concatenate option appears on top of the file column. You can concatenate files two ways:

- Row-Wise: If you have a file A with 100 lines and a file B with 50 lines, the concatenate file has 150 lines. You must have the same number of columns.

- Column-Wise: If you have a file A with 30 columns and a file B with 50 columns, the concatenate file has 80 columns. If you do not have the same number of lines, it concatenates what it can, meaning that you risk having lines of different column sizes.

To concatenate, you need to run an action with the concatenate option active. It is recommended to use the extract lines action to do the concatenate.

Action section:

Theaction section contains all the action to modify your file, the following actions are available:

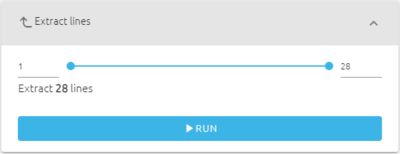

- Extract lines: Truncate lines at the beginning and at the end of a file. Enter in the fields, or by using the blue bar, the lines to extract.

- Remove columns: Delete the selected columns. Select a single column, a range of columns (example 10-20) or multiple columns (example 10,23,32) to delete them.

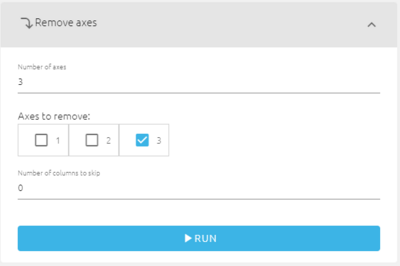

- Remove axes: Enter the file number of axis, select the axes to delete. You can enter an offset (optional).

The difference between remove columns and remove axes is that with remove axes you can easily delete the third axis of a 3-axis accelerometer data just by entering 3 as the number of axes and check the third case. Do to the same with remove columns, you need to manually check every column corresponding to the third axis.

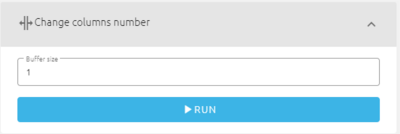

- Change columns number: Reshape (change) the size of the signals in a file (the buffer size). Enter a signal length for the data to be reshaped as.

For example, the user can modify signals of size 4096 to smaller signals of size 1024. After applying the data manipulation, all signals of size 4096 are divided into four signals of size 1024 (meaning that the user has four times more signals of size 1024 than of size 4096 previously).

- Shuffle: Randomly mix all the lines of the imported files, just click on run.

Result section:

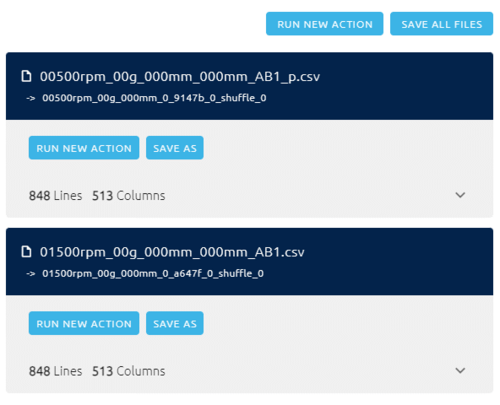

Theresult section contains files that went through a data manipulation. For each imported file, a new result file is generated after performing any action. All the generated result files are displayed in columns:

- For each result file, a name containing information about the action performed is generated. There is also between parentheses the name of the original file. The number of lines and columns are also displayed. You can click the arrow in the down left corner to show more information about the file.

- You can save any file in the result column by clicking the save as button. Additionally, you can choose to perform new actions on a result file by clicking the run new action button. This results in moving the file from the result column to the file column (the imported files).

- Save all files appears if there are at least two result files. The user can use it to save all the result files at once.

5. Resources

Recommended: NanoEdge AI Datalogging MOOC.

Documentation

All NanoEdge AI Studio documentation is available here.

Tutorials

Step-by-step tutorials to use NanoEdge AI Studio to build a smart device from A to Z.