1. Introduction

Bluetooth® Low Energy audio is the new feature enabling the audio streaming over Bluetooth® Low Energy.

Almost every Bluetooth® device is now using the Bluetooth® Low Energy. Except for the audio streaming devices that require a dual mode controller for streaming audio over classic Bluetooth®. The Bluetooth® Special Interest Group (Bluetooth® SIG) thought of a solution to enable and enhance the streaming audio over Bluetooth® Low Energy.

Classic Bluetooth® (BR/EDR) Audio is already the most used audio wireless system in the world today. Bluetooth® Low Energy audio is coming as the next generation of it. Comparing to classic Bluetooth®, Bluetooth® Low Energy reduces the power consumption and allows a whole new world of possibilities to the audio stream.

One of them is the Auracast™ broadcast audio. Auracast™ is the new Bluetooth® standard. It enables listeners to share audio to multiple users. It is defined over these three fundamentals:

- Share your audio: Auracast™ broadcast lets you invite others to share in your audio experience bringing us closer together

- Unmute the world: Auracast™ broadcast audio enables you to enjoy fully television in public spaces unmuting what was once silent and creating a more complete watching experience

- Hear your best: Auracast™ broadcast allows you to hear the best in the places you go.

More information here about Bluetooth® Low Energy audio and Auracast™: [1] [2] [3] [4]

The STM32WBA launched by STMicroelectronics enables Bluetooth® Low Energy audio over the STM32. With the STM32WBA55G-DK development kit, users can access the embedded Bluetooth® Low Energy audio software, allowing users to test and work with it. There are four available demonstrations, which can be found in the Use case profile. These applications are included in the STM32CubeWBA MCU package.

|

Figure 1.2 STM32WBA55G-DK1 |

2. LC3 codec

Bluetooth® Low Energy audio is based on a new audio codec: LC3 for Low complexity communication codec. It has been designed by Fraunhofer. This codec is mandatory and free to use in Bluetooth® Low Energy audio products, meaning Bluetooth® Low Energy audio is fully interoperable, not depending on vendor specific codecs.

LC3 brings a new quality, higher than the mandatory codec used on classic Bluetooth® audio. The codec has been mostly designed for embedded products with its low complexity and its low memory footprint, but still has a high quality.

The LC3:

- is channel independent: can be configured/used for mono or stereo streams

- is suitable for both music and voice (from narrow band: 8kHz to full band: 48kHz)

- interworks perfectly with mobile codec (it reduces audio losses).

- permits a really low latency (which is defined in the audio profiles)

Comparing LC3 to the typical codecs of classic Bluetooth® audio:

- LC3 has a much higher quality than SBC with some more complexity

- LC3 has a better quality than OPUS with less complexity

- LC3 has a lower quality than LDAC or aptX (and their lossless versions) as their complexity is much higher.

Relevant information about the LC3 and its integration in our solution can be found here: LC3 codec.

And if you need more information, here are some studies done by the Bluetooth® SIG about the LC3: [5] [6]

3. Link Layer and isochronous channels

Bluetooth® Low Energy audio is also based on a Bluetooth® 5.2 optional feature: Isochronous channels. This is a link layer feature. This feature enables the transmission of a stream over a new connection: the isochronous stream.

There are two types of isochronous streams:

- Connected isochronous stream (CIS): enabling the user to have an audio stream over a Bluetooth® connection. This is the classic way of doing Bluetooth® Low Energy audio (one connection for one device, like a headset). With two CIS, the user can stream synchronized audio streams to two different devices (for earbuds or hearing aids)

- Broadcast isochronous stream (BIS): enabling a user to broadcast one or two audio streams to any receiver able to receive it. It does not need a connection meaning there could be an infinite number of receivers.

These two types of isochronous streams add new features to Bluetooth® Low Energy:

- It is defining the latency for the system for the whole streaming time (without any drift). This latency is lower than classic Bluetooth® audio).

- It is also defining the retransmissions at the same time. The application can request a system that prioritizes the lowest possible latency (which may result in missing some packets due to minimal retransmissions), or a system that prioritizes better quality (which ensures that all packets are received but may result in higher latency).

Comparing an isochronous stream to the classic Bluetooth® audio, some important differences can be noted:

| HFP/HSP | A2DP | Bluetooth® LE Audio with BAP |

|---|---|---|

| Data is sent over SCO (Synchronous Connection Oriented) | Data is sent over ACL (Asynchronous Connection Oriented) | Data is sent over Isochronous data stream (CIS or BIS) |

| Data is continuous | Data is continuous | Data is synchronized on a timestamp |

| There is no retransmission in SCO | All packets are transmitted, leading to an infinity of retransmissions if the link has bad quality | Data can be retransmitted, but has a validity in time, becoming obsolete after a certain time |

| Latency is always the same but is not defined | Latency is not defined and can have some lags or drifts. | Latency is always defined in the system and cannot change during the stream. |

It enables the sender to "stream" audio as a radio. To initiate a Unicast Audio Stream over the ACL connection, the Unicast Client and Server exchange information regarding the stream quality, retransmissions, number of audio channels (mono/stereo), and latency..

Apart from the isochronous channels, Bluetooth® Low Energy audio needs two more features:

- Extended advertising: it enables advertising more information needed to expose some audio capabilities for example

- Periodic advertising: this feature enables advertising, which is periodic and not random. An observer can be synchronized to get some information, and see any changes during time.

4. Audio profiles

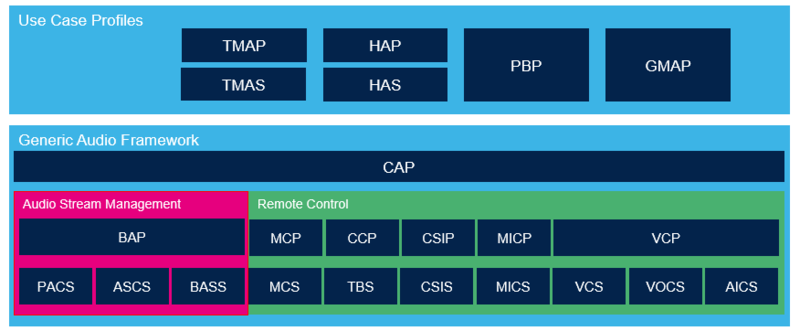

Now that the base of the Bluetooth® Low Energy audio (LC3 and isochronous channels) is enabled, the Bluetooth® SIG developed a whole new framework to be able to configure all types of audio streams:

The base of the audio framework is the audio stream management, defined by the basic audio profile (BAP)[7]. It is the mandatory profile for Bluetooth® Low Energy audio. It defines the stream types, the configurations, the capabilities, the quality, the latency, etc.

BAP is used with three services:

- Published audio capabilities service (PACS): made to expose the audio capabilities of the device.

- Audio stream control service (ASCS): enable to configure the Unicast stream.

- Broadcast audio scan service (BASS): permits to solicit for clients to scan on behalf of the server for broadcast audio streams.

BAP defines two types of streams: Unicast and Broadcast, listed below.

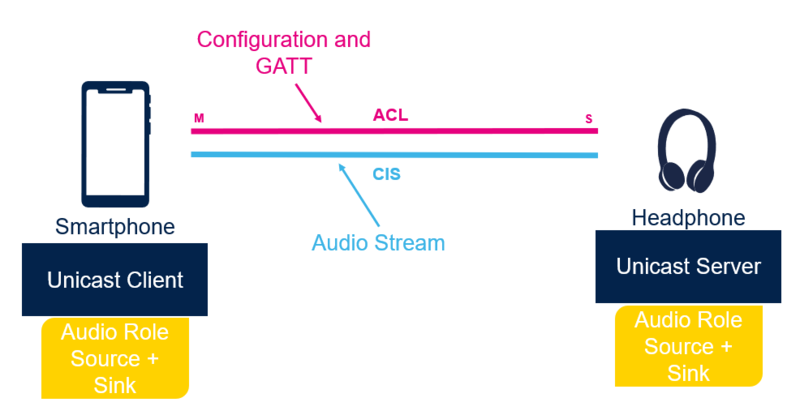

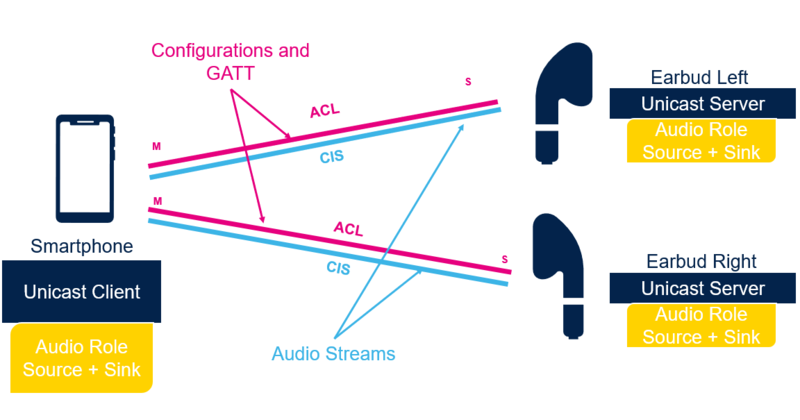

5. Unicast

Unicast is the connected audio stream, based on the connected isochronous stream (CIS). It means that with Unicast you can create connected audio streams over CIS. To create a Unicast stream, the first need is an ACL connection to exchange all the useful information over GATT and LLCP. Unicast is divided in two roles:

- Unicast client: Establishes connection to Unicast server, discovers its capabilities and configure the audio stream. This role is used by a smartphone, a laptop, a television etc.

- Unicast server: Advertises its role, exposes its capabilities, accepts the Unicast server to configure the audio stream. This role can be used by a headphone, a speaker, some hearing aids, some earbuds, or even a microphone.

Moreover, the Unicast client can be able to stream to two Unicast server synchronously.

To initiate a Unicast audio stream over the ACL connection, the Unicast client and server exchange information regarding the stream quality, retransmissions, number of audio channels (mono/stereo), and latency.

The stream can be

- Unidirectional (for music) or bidirectional (for calls). This is called the topology of the audio stream. They are selected by the Unicast client from what the Unicast server exposes. All the possible topologies are listed in the BAP specification.[7]

- Low quality or high quality. The quality is also selected by the Unicast client from what the Unicast server exposes. All these possibilities are also listed in the BAP Specification.[7]

- Low latency or high reliability. This is exchanged between Unicast client and Unicast server. This is described in the BAP specification.[7]

The principal use cases are:

- 1 phone connected to 1 headset/speaker (see figure 5.1)

- 1 phone connected to 2 earbuds/hearing aids (see figure 5.2)

6. Broadcast

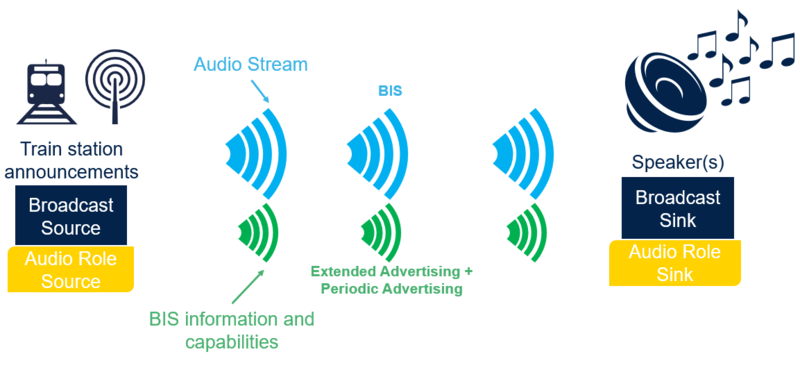

Broadcast is a nonconnected audio stream, based on broadcast isochronous stream (BIS).The first device is the sender, it broadcasts audio data over BIS, and exposes its broadcast information over extended advertising and periodic advertising. Any receiver capable of scanning and getting synchronized to this audio stream can hear it. This is a new use case allowing an infinity number of users to listen to the same audio stream.

It can be music on a phone to share with friends, or an announcement like a conference translated in another language directly, or a train announcement to receive it directly in the earbuds.

This stream is public but it can be encrypted and a password is needed to synchronize. As the stream is broadcasted, it can only be unidirectional.

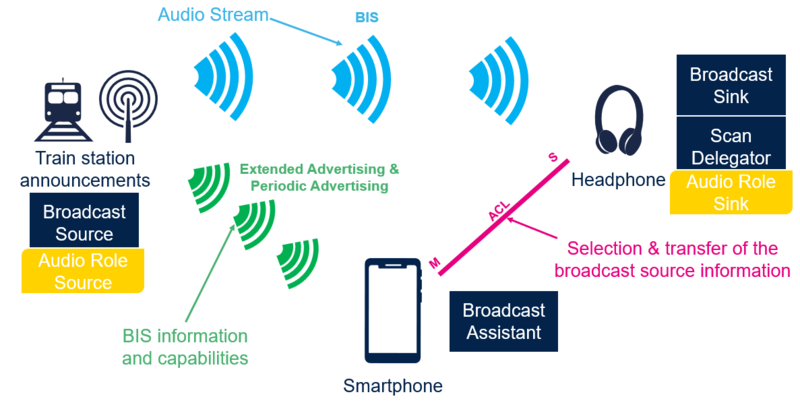

BAP also adds another feature. As the broadcast is not connected, the user needs a way to synchronize to the stream directly on the receiver. But most of the time these devices do not have any screen or too few buttons to efficiently select the audio stream. This role is called a broadcast assistant that would be a phone, for example, controlling a hearing device. The phone would scan and send the selected stream's information to the hearing device.

Broadcast defines four roles:

- Broadcast source: establishes a BIS and advertise all the information over extended advertising and periodic advertising.

- Broadcast sink: scan advertising to find the broadcast source, and get synchronized to receive the audio stream

- Scan delegator: exposes its capabilities and wait for a broadcast assistant to receive some information of a broadcast source and commands. The scan delegator also has the broadcast sink role to get synchronized to the selected broadcast source.

- Broadcast assistant: scan advertising to find broadcast source, discovers the scan delegator capabilities. It sends the information of the broadcast source to the scan delegator.

The principal use cases are:

- 1 phone/laptop/television sending the same audio to multiple audio devices.

- 1 announcement in an public space for anyone wanting to receive through Bluetooth®.

- 1 video or conference broadcasted for people with hearing loss, or translated in other languages.

Below are examples of an announcement in a train station broadcasted to anyone. The speaker (or any audio devices) can be synchronized itself to the broadcast source (see figure 6.1). Or thanks to a smartphone with the broadcast assistant role (see figure 6.2).

7. Remote control profiles

The generic audio framework also defines new profiles to control some audio linked processes:

Now that the audio stream is up, the device needs other profiles to configure the listening part. Are we listening to music, having a call? This is the role of these other profiles, adding even more interoperability with any device.

- Media control profile (MCP) and its services ((Generic) media control service - GMCS & MCS): The peripheral device would be able to control the central with any action: start, pause, play, stop the media, and read some information as track name, track position, next track etc.[8]

- Call control profile (CCP) and its services ((Generic) telephone bearer Service - GTBS & TBS): The peripheral would be able to do any action the phone can do: answer, decline, hold, terminate the call, and also see some information as caller name, signal, provider, etc.[9]

- Coordinated set identification profile (CSIP) and its service (Coordinated set identification service - CSIS): It enables multiple devices to identify as a coordinated set (earbuds or sound system for example). With this kind of information, the central is able to connect to all devices of this set and configure them together, for audio streams and controls.[10]

- Microphone control profile (MICP) and its service (Microphone control service - MICS): It allows the user to control the audio input selection and its properties such as the volume offset, mute, and unmute etc.[11]

- Volume control profile (VCP) and its services (Volume control service - VCS, volume offset control service - VOCS, audio input control Service - AICS): It allows the user to control the volume of the audio stream, with the offset, the current volume position, the mute, and unmute, etc. It also permits to select the audio output of the audio stream.[12]

The Common audio profile (CAP) is made to be able to control BAP with all the profiles below, creating a full framework to handle the audio streams. It enables to link some commands of calls or media with the start/stop of the audio stream, or read its information (music title, caller name ...) It enables also to link commands together when they need to be sent to multiple peripherals inside one coordinated set (like two earbuds).[13]

More details of the profile and its parameters/possibilities are listed inside Architecture and integration.

8. Use case profile

Above the CAP, there are the use case profiles. They define some capabilities and procedures to be able to interact with the most devices possible.

- Public broadcast profile (PBP): Made for public broadcast (such as announcements) and receivers (any user). It uses only the broadcast streams.[14]

- 2 applications made with PBP are already available: STM32WBA public broadcast profile

- Telephony and media audio profile (TMAP): Made for call and media use cases, it can use broadcast and unicast.[15]

- 2 applications made with TMAP are already available: STM32WBA telephony & media audio profile

- Hearing aid profile (HAP): Made for hearing aids use cases.[16]

- Gaming audio profile (GMAP): Made for gaming use cases.[17]

9. References

- ↑ Auracast™ | Bluetooth®

- ↑ Auracast™ Broadcast audio: The next-generation ALS

- ↑ How Auracast™ broadcast audio sparks a new wave of audio innovation

- ↑ LE audio: The future of Bluetooth® audio

- ↑ performance characterization of the Low complexity communication codec

- ↑ The LC3 difference

- ↑ Jump up to: 7.0 7.1 7.2 7.3 BAP specification

- ↑ MCP specification

- ↑ CCP specification

- ↑ CSIP specification

- ↑ MICP specification

- ↑ VCP specification

- ↑ CAP specification

- ↑ PBP specification

- ↑ TMAP specification

- ↑ HAP specification

- ↑ GMAP specification