This article provides details about the support of quantized model by X-CUBE-AI.

1. Quantization overview

The X-CUBE-AI code generator can be used to deploy a quantized model. In this article, “Quantization” refers to the 8-bit linear quantization of an NN model (Note that X-CUBE-AI provides also a support for the pretrained Deep Quantized Neural Network (DQNN) model, see Deep Quantized Neural Network (DQNN) support article).

Quantization is an optimization technique[ST 4] to compress a 32-bit floating-point model by reducing the size (smaller storage size and less memory peak usage at runtime), by improving the CPU/MCU usage and latency (including power consumption) with a small degradation of accuracy. A quantized model executes some or all of the operations on tensors with integers rather than floating point values. It is an important part of various optimization techniques, which can be applied to address the resource-constrained runtime environment: topology-oriented technique, features-map reduction, pruning, weights compression, or other techniques.

There are two classical quantization methods: post-training quantization (PTQ) and quantization aware training (QAT). The first one is relatively easier to use. It allows the quantization of a pretrained model with a limited and representative data set (also called calibration data set). Quantization aware training is done during the training process and is often better for model accuracy.

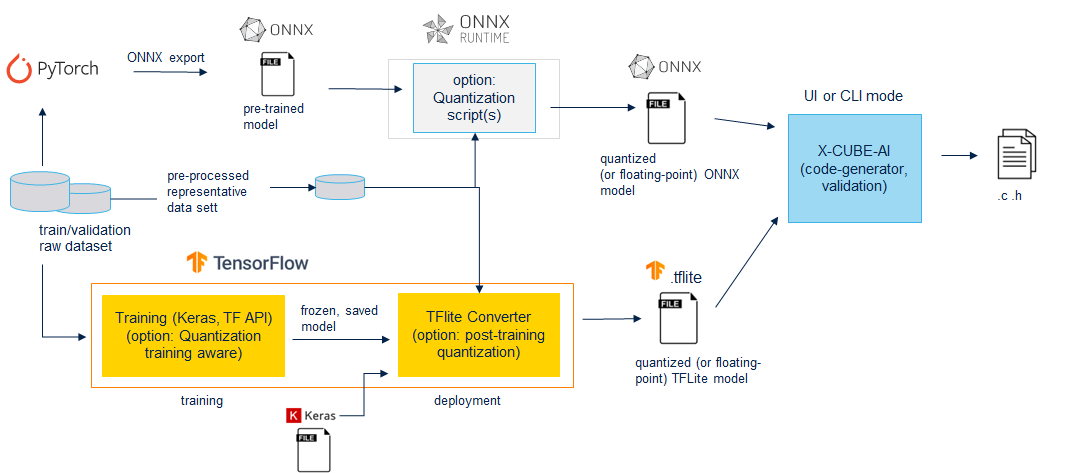

X-CUBE-AI can import different types of quantized model:

- a quantized TensorFlow™ Lite model generated by a post-training or training aware process. The calibration has been performed by the TensorFlow™ Lite framework, principally through the “TFLite converter” utility exporting a TensorFlow™ Lite file.

- a quantized ONNX model based on the operator-oriented (QOperator) or the tensor-oriented (QDQ; Quantize and DeQuantize) format. The first format is dependent of the supported QOperators (also called QLinearXXX operators), and the second one is more generic. The DeQuantizeLinear(QuantizeLinear(tensor)) operators are inserted between the original operators (in float) to simulate the quantization and dequantization process. Both formats can be generated with the ONNX runtime services.

Figure 1: quantization flow

For optimization and performance reasons, the deployed C-kernel (specialized C-implementation of the deployed operators) supports only the quantized weights and quantized activations. If this pattern is not inferred by the optimizing and rendering passes of the code generator, a floating-point version of the operator is deployed (fallback to 32-b floating point with inserted QUANTIZE/DEQUANTIZE operators, also known as fake/simulated quantization). Subsequently:

- the dynamic quantization approach allowing the computation of the the quantization parameters during the execution is NOT supported to limit the overhead and the cost of the inference in term of computation and memory peak usage

- support of the mixed models is considered.

The “analyze”, “validate” and “generate” commands can be used without limitations.

stm32ai analyze -m <quantized_model_file>.tflite stm32ai validate -m <quantized_model>.tflite -vi test_data.npz stm32ai analyze -m <quantized_model_file>.onnx

2. Quantized tensors

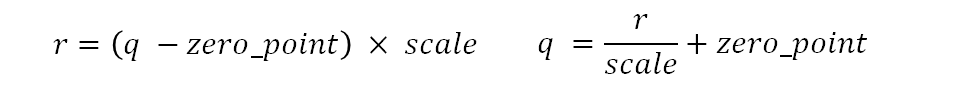

X-CUBE-AI supports the 8b integer-base (int8 or uint8 data type) arithmetic for the quantized tensors that are based on the representative convention used by Google® for the quantized models [ST 5]. Each real number r is represented in function of the quantized value q, a scale factor (arbitrary positive real number) and a zero_point parameter. Quantization scheme is an affine mapping of the integers q to real numbers r. zero_point has the same integer C-type like the q data.

The precision depends on a scale factor and the quantized values are linearly distributed around the zero_point value. In both cases, resolution/precision is constant vs. floating-point representation.

Figure 2: integer precision

2.1. Per-axis (or per-channel or channelwise) vs. per-tensor (or layerwise)

Per-tensor means that the same format (the scale/zero_point format) is used for the entire tensor (weights are activations). Per-axis for conv-base operator means that there is one scale and/or zero_point per filter, independent of the others channels, ensuring a better quantization (accuracy point of view) with negligible computation overhead.

Per-axis approach is currently the standard method used for quantized convolutionnal kernels (weight tensors). Activation tensors are always in per-tensor. Design of the optimzed C-kernels is mainly drived by this approach.

2.2. Symmetric vs. asymmetric

Asymmetric means that the tensor can have zero_point anywhere within the signed 8b range [-128, 127] or unsigned 8b range [0, 255]. Symmetric means that the tensor is forced to have zero_point equal to zero. By enforcing zero_point to zero, some kernel optimization implementations are possible to limit the cost of the operations (such as off-line pre-calculation). By nature, the activations are asymmetric, consequently symmetric format for the activations is not supported. For the weights/bias, asymmetric and symmetric format are supported.

2.3. Signed integer vs. unsigned integer - supported schemes

Signed or unsigned integer types can be defined for the weights, for the activations, or for both. However all requested kernels are not implemented or relevant to support the different optimized combinations related to the symmetric and asymmetric formats. This implies that only the following integer schemes or combinations are supported:

| scheme | weights | activations |

|---|---|---|

| ua/ua | unsigned and asymmetric | unsigned and asymmetric |

| ss/sa | signed and symmetric | signed and asymmetric |

| ss/ua | signed and symmetric | unsigned and asymmetric |

3. Quantize TensorFlow™ models

X-CUBE-AI is able to import the quantization training-aware and post-training quantized TensorFlow™ Lite models. Post-training quantized models (TensorFlow™ v1.15 or v2.x) are based on the “ss/sa” and per-channel scheme. Activations are asymmetric and signed (int8), weights/bias are symmetric and signed (int8). Previous quantized training-aware models are based on the “ua/ua” scheme. Now, the “ss/sa” and per-channel scheme is also the privileged scheme to address efficiently the Coral Edge TPU™ or TensorFlow Lite for Microcontrollers runtime.

3.1. Supported/recommended methods

- Post-training quantization: https://www.tensorflow.org/lite/performance/post_training_quantization

- Quantization aware training: https://www.tensorflow.org/model_optimization/guide/quantization/training

| method/option | supported/recommended |

|---|---|

| Dynamic range quantization | not supported, only static approach is considered |

| Full integer quantization | supported, representative dataset must be used for the calibration |

| Integer with float fallback (using default float input/output) | supported, mixed model, representative dataset must be used for the calibration |

| Integer only | recommended, representative dataset must be used for the calibration |

| Weight-only quantization | not supported |

| Input/output data type | 'uint8', 'int8' and 'float32' can be used |

| Float16 quantization | not supported, only float32 model are supported |

| Per channel | default behavior, can not be modified |

| Activation type | 'int8', “ss/sa” scheme |

| Weight type | 'int8', “ss/sa” scheme |

3.2. “Integer only” method

The following code snippet illustrates the recommended TFLiteConverter options to enforce full integer scheme (post-training quantization for all operators including the input/output tensors).

def representative_dataset_gen():

data = tload(...)

for _ in range(num_calibration_steps):

# Get sample input data as a numpy array in a method of your choosing.

input = get_sample(data)

yield [input]

converter = tf.lite.TFLiteConverter.from_saved_model(<saved_model_dir>)

# converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.representative_dataset = representative_dataset_gen

# This enables quantization

converter.optimizations = [tf.lite.Optimize.DEFAULT]

# This ensures that if any ops can't be quantized, the converter throws an error

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

# These set the input and output tensors to int8

converter.inference_input_type = tf.int8 # or tf.uint8

converter.inference_output_type = tf.int8 # or tf.uint8

quant_model = converter.convert()

# Save the quantized file

with open(<tflite_quant_model_path>, "wb") as f:

f.write(quant_model)

...

3.3. “Full integer quantization” method

As the mixed models are supported by X-CUBE-AI, the full integer quantization method can be used. However, it is also preferable to enable the TensorFlow™ Lite ops (TFLITE_BUILTINS) as only a limited number of TensorFlow™ operators are supported.

...

converter = tf.lite.TFLiteConverter.from_saved_model(<saved_model_dir>)

# converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.representative_dataset = representative_dataset_gen

# This enables quantization

converter.optimizations = [tf.lite.Optimize.DEFAULT]

# This optional option, ensures that TF Lite operators are used.

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS]

# These set the input and output tensors to float32

converter.inference_input_type = tf.float32 # or tf.uint8, tf.int8

converter.inference_output_type = tf.float32 # or tf.uint8, tf.int8

quant_model = converter.convert()

...

3.4. Warning - Usage of the tf.lite.Optimize.DEFAULT

This option enables the quantization process. However, to be sure to have the quantized weights and quantized activations, the representative_dataset attribute must be always used. Else only the weights/params are quantized, reducing the size of the generated file by ~4. But in this case, as for the deprecated option (OPTIMIZE_FOR_SIZE), the tflite file is deployed as a fully floating-point c-model with the weights in float (dequantized values).

Note that this option must not be used to convert a TensorFlow™ model.

4. Quantize ONNX models

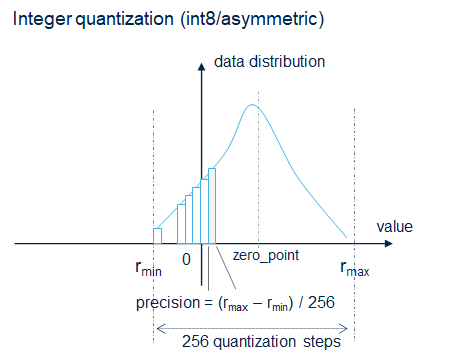

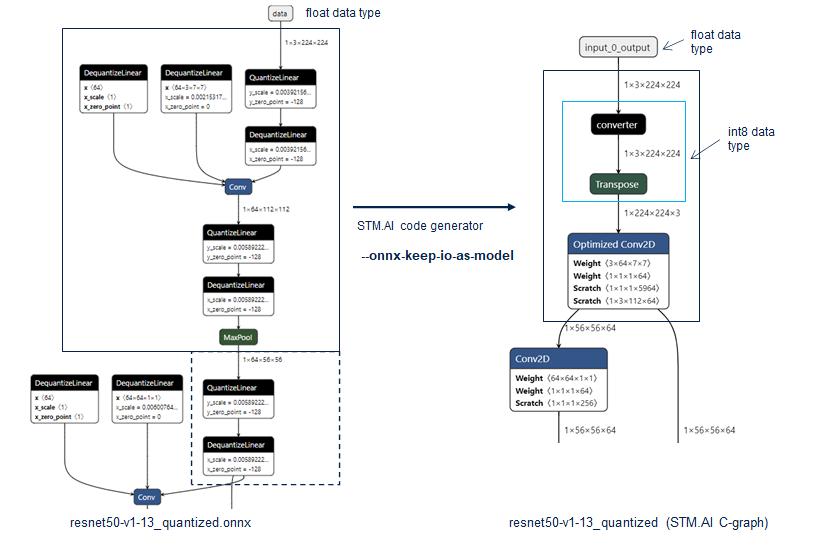

To quantize an ONNX model, it is recommended to use the services from the ONNX runtime module. The tensor-oriented (QDQ; Quantize and DeQuantize) format is privileged, as illustrated by the following figure, the additional DeQuantizeLinear(QuantizeLinear(tensor)) between the original operators is automatically detected and removed to deploy the associated optimized C-kernels.

Figure 3: resnet 50 example

Comments about the illustrated example:

- merging of the Batch Normalization operators is automatically done by the quantize function. The model can be also previously optimized for inference before its quantization (ONNX Simplifier can be also used)

python -m onnxruntime.quantization.preprocess --input model.onnx --output model-infer.onnx

- as the deployed C-kernels are channel last (“hwc” data format) and to respect the original input data representation, a "Transpose" operator has been added. Note that this operation is done by software and the cost can be significant. To avoid this situation, the option ‘–no-onnx-io-transpose’ can be used, allowing direct processing without transpose operation.

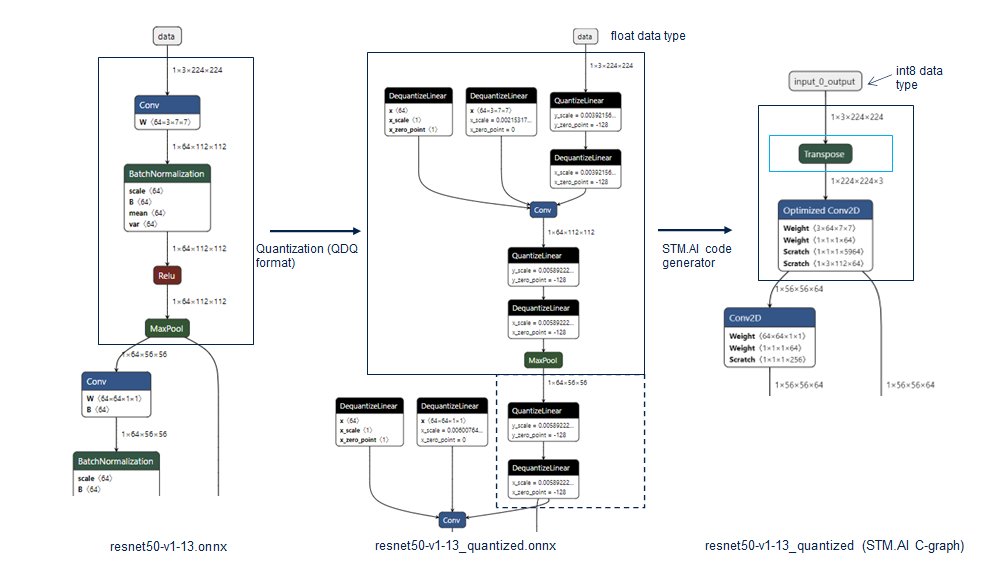

- by default, the IO data type (float32) of the original ONNX model is not preserved if they is a quantization by the first QuantizeLinear operator (respectively a dequantization by the last DeQuantizeLinear operator). As illustrated in the following figure, to keep the IO in float, the option '--onnx-keep-io-as-model' can be used.

Figure 4: resnet 50 example with option '--onnx-keep-io-as-model'

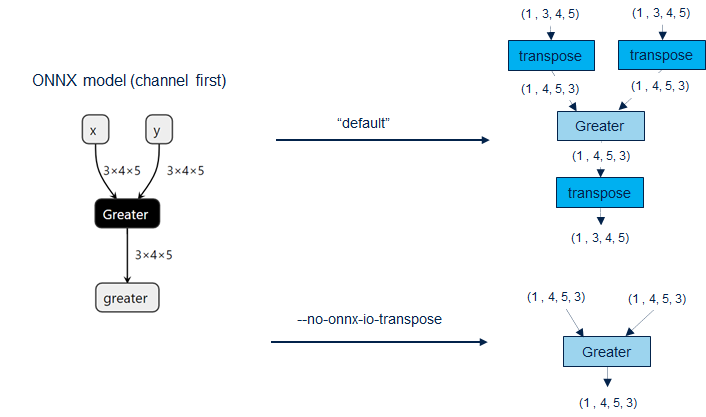

4.1. Channel first support for ONNX model

Data format of the generated C-model are always channel last (or NHWC data format). To preserve the original data arrangement, by default the transpose operators are added if:

- model is channel first

- the number of input channels is greater than 1

This default behavior can be disabled with the option: --no-onnx-io-transpose

Figure 5: ONNX channel first support

4.2. Supported/recommended methods/options

| method/option | supported/recommended |

|---|---|

| Dynamic quantization | not supported, only static approach is considered |

| Static quantization | recommended, representative dataset must be used for the calibration |

| Quant format | 'QuantFormat.QDQ' is recommended, 'QuantFormat.QOperator' is not recommended (not tested, and limited supported QLinearXXX operators, [ONNX]) |

| Activation type | 'QuantType.QInt8' is recommended (default), 'QuantType.QUInt8' is not recommended and not tested |

| Weight type | 'QuantType.QInt8' is recommended (default), 'QuantType.QUInt8' is not recommended and not tested |

| Calibration method | 'CalibrationMethod.MinMax', 'CalibrationMethod.Entropy' and 'CalibrationMethod.Percentile' can be used |

| Per channel | recommended: True, per tensor (= False) is also supported |

| nodes_to_exclude/nodes_to_quantize | supported, the mixed models can be deployed by X-CUBE-AI |

4.3. “Static Quantization” method

The following code snippet illustrates a typical Python™ script (post-training quantization) to quantize an NN model processing the images (classifier or object-detector applications). It is based on the end-to-end example from “Quantize ONNX Models” article. Note that only the inputs (images used for the calibration) are requested and the associated data can be transposed (hwc to chw) to conform to the expected input data representation.

import numpy

import onnxruntime

from onnxruntime.quantization import QuantFormat, QuantType, StaticQuantConfig, quantize, CalibrationMethod

from onnxruntime.quantization import CalibrationDataReader

from PIL import Image

input_model_path = 'my_model.onnx'

output_model_path = 'my_model_quantized.onnx'

calibration_dataset_path = '/path/to/data/for/calibration'

def _preprocess_images(images_folder: str, height: int, width: int):

"""

Load a batch of images and preprocess them..

"""

image_names = os.listdir(images_folder)

batch_filenames = image_names

unconcatenated_batch_data = []

for image_name in batch_filenames:

image_filepath = images_folder + "/" + image_name

pillow_img = Image.new("RGB", (width, height))

pillow_img.paste(Image.open(image_filepath).resize((width, height)))

input_data = numpy.float32(pillow_img) - numpy.array(

[123.68, 116.78, 103.94], dtype=numpy.float32

)

nhwc_data = numpy.expand_dims(input_data, axis=0)

nchw_data = nhwc_data.transpose(0, 3, 1, 2) # ONNX Runtime standard

unconcatenated_batch_data.append(nchw_data)

batch_data = numpy.concatenate(

numpy.expand_dims(unconcatenated_batch_data, axis=0), axis=0

)

return batch_data

class XXXDataReader(CalibrationDataReader):

def __init__(self, calibration_image_folder: str, model_path: str):

self.enum_data = None

# Use inference session to get input shape.

session = onnxruntime.InferenceSession(model_path, None)

(_, _, height, width) = session.get_inputs()[0].shape

# Convert image to input data

self.nhwc_data_list = _preprocess_images(

calibration_image_folder, height, width)

self.input_name = session.get_inputs()[0].name

self.datasize = len(self.nhwc_data_list)

def get_next(self):

if self.enum_data is None:

self.enum_data = iter(

[{self.input_name: nhwc_data} for nhwc_data in self.nhwc_data_list]

)

return next(self.enum_data, None)

def rewind(self):

self.enum_data = None

dr = XXXDataReader(

calibration_dataset_path, input_model_path

)

conf = StaticQuantConfig(

calibration_data_reader=dr,

quant_format=QuantFormat.QDQ,

calibrate_method=CalibrationMethod.MinMax,

optimize_model=True,

activation_type=QuantType.QInt8,

weight_type=QuantType.QInt8,

# nodes_to_exclude=['resnetv17_dense0_fwd', ..],

# nodes_to_quantize=['resnetv17_dense0_fwd', ..],

per_channel=True)

quantize(infer_model,

output_model_path, conf)

Note that for a quick evaluation in term of inference time and memory footprint, the XXXDataReader object can be updated to generate the fake image with the random data.

import numpy

class XXXDataReader(CalibrationDataReader):

def __init__(self, calibration_image_folder: str, model_path: str):

self.enum_data = None

# Use inference session to get input shape.

session = onnxruntime.InferenceSession(model_path, None)

(_, chnannel, height, width) = session.get_inputs()[0].shape

# Generate the random data in the half-open interval [0.0, 1.0).

self.nhwc_data_list = [np.random.random_sample((1, chnannel, height, width)).astype(np.float32)

for i in range(20)]

self.input_name = session.get_inputs()[0].name

self.datasize = len(self.nhwc_data_list)

def get_next(self):

if self.enum_data is None:

self.enum_data = iter(

[{self.input_name: nhwc_data} for nhwc_data in self.nhwc_data_list]

)

return next(self.enum_data, None)

def rewind(self):

self.enum_data = None

4.4. Requested ONNX Opset

Models must be opset10 or higher to be quantized. Models with opset < 10 must be reconverted to ONNX from their original framework using a later opset. However, to perform some advanced optimizations (such as BN folding), it is recommended to use opset13.

import onnx

original_model_path = 'original_model.onnx'

new_model_path = 'new_model.onnx'

new_opset = 13

onnx_model = onnx.load(original_model_path)

converted_model = onnx.version_converter.convert_version(onnx_model, new_opset)

onnx.save(converted_model, new_model_path)

4.5. ONNX Simplifier

Before quantizing the ONNX model, it is possible to simplify the model to have an efficient ONNX inference model before quantization or deployment.

onnxsim my_model.onnx my_simplified_model.onnx --overwrite-input-shape 1,3,224,224

4.6. Known limitation

- ONNX QuantizeLinear and ONNX DequantizeLinear are supported only when axis attribute corresponds to channel dimension. In this case, the generated code can be incorrect and not explicitly reported by the STM.AI. If the following assertion is trigged during the validation of the model, a possible work-around is to quantize the ONNX model per tensor (per_channel=False).

Assertion failed: n_channel_in == ( (ai_shape_dimension*)((((&((p_tensor_weights)->shape))))->data) )[(0x0)],

file <root_file_location>\layers_conv2d_stm32_integer.c, line 3628

5. Quantize Python™ models

Python™ models (Python™ and .pth files) are not natively supported by X-CUBE-AI. To be able to deploy them, the models must be converted to the ONNX format (with fixed dimensions, batch size = 1, see the following code snippet). To quantize a Python™ model, it is currently recommended to use the ONNX runtime services. Python™ provides an initial beta version of a quantization API but the generated quantized model can be not currently exported to a “standard” ONNX format importable by X-CUBE-AI.

import torch.onnx

from torch import nn

torch_model = MyPytorchModel(..)

dummy_input = torch.randn(1, 3, 224, 224) # fixed dimension

input_names = [ "actual_input" ]

output_names = [ "output" ]

torch.onnx.export(

torch_model, # pytorch model (with the weights)

dummy_input, # model input (or a tuple for multiple inputs)

"my_model.onnx", # where to save the model

do_constant_folding=True, # whether to execute constant folding for optimization

input_names=input_names, # the model's input names

output_names=output_names, # the model's output names

opset_version=13, # the ONNX version to export the model to

export_params=True, # store the trained parameter weights

verbose=False

)

6. STMicroelectronics references

See also: