| Comming Soon! |

FP-AI-MONITOR1 runs learning and inference sessions in real-time on SensorTile Wireless Industrial Node development kit (STEVAL-STWINKT1B), taking data from onboard sensors as input. FP-AI-MONITOR1 implements a wired interactive CLI to configure the node.

The article discusses the following topics:

- Pre-requisites and setup,

- Presentation of Human Activity Recognition (HAR) application,

- Integrating a new HAR model,

- Check the new (HAR) model with FP-AI-MONITOR1,

This article explains the very few steps needed to replace the existing HAR AI Model with a completely different one.

For completeness, readers are invited to refer to FP-AI-MONITOR1 User Manual.

1. Prerequisites and setup

1.1. Hardware

To use the FP-AI-MONITOR1 function pack on STEVAL-STWINKT1B, the following hardware items are required:

- STEVAL-STWINKT1B development kit board,

- Windows® powered laptop/PC (Windows® 7, 8, or 10),

- Two Micro-USB cables, one to connect the sensor-board to the PC, and another one for the STLINK-V3MINI, and

- an STLINK-V3MINI.

1.2. Software

1.2.1. FP-AI-MONITOR1

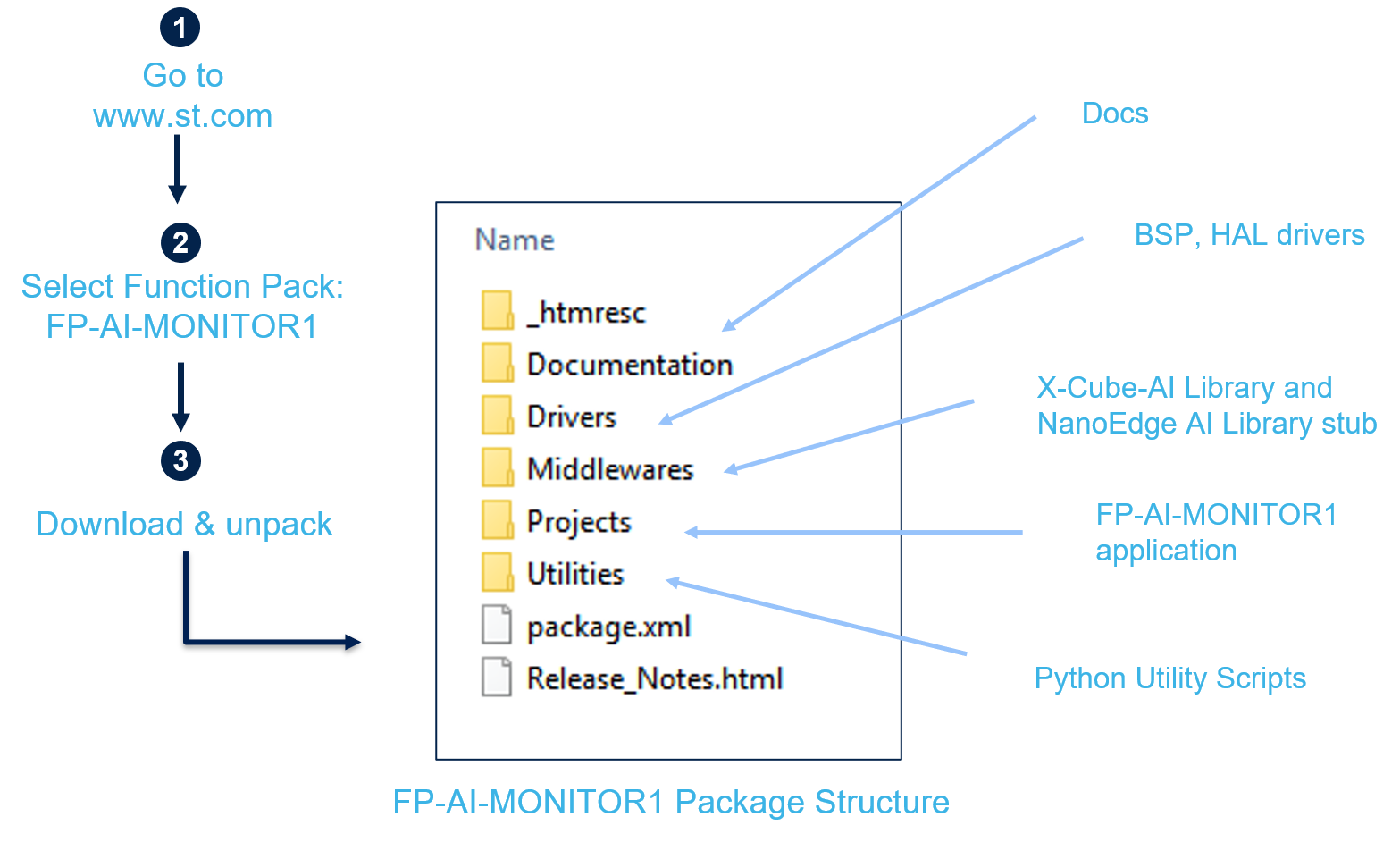

Download the FP-AI-MONITOR1 package from ST website, extract and copy the contents of the .zip file into a folder on your PC. Once the pack is downloaded, unpack/unzip it and copy the content to a folder on the PC.

The steps of the process along with the content of the folder are shown in the following image.

1.2.2. IDE

- Install one of the following IDEs:

- STMicroelectronics STM32CubeIDE version 1.9.0,

- IAR Embedded Workbench for Arm (EWARM) toolchain version 9.20.1 or later, or

- RealView Microcontroller Development Kit (MDK-ARM) toolchain version 5.32.

1.2.3. TeraTerm

- TeraTerm is an open-source and freely available software terminal emulator, which is used to host the CLI of the FP-AI-MONITOR1 through a serial connection.

- Download and install the latest version available from TeraTerm.

2. Human Activity Recognition (HAR) application

The CLI application comes with a prebuilt Human Activity Recognition model.

Running the $ start ai command starts doing the inference on the accelerometer data and predicts the performed activity along with the confidence. The supported activities are:

- Stationary,

- Walking,

- Jogging, and

- Biking.

Note that the provided HAR model is built with a dataset created using the IHM330DHCX_ACC accelerometer with the following parameters:

- Output Data Rate (ODR) set to 26 Hz ,

- Full Scale (FS) set to 4G.

3. Integrating a new HAR model

FP-AI-MONITOR1 thus provides a pre-integrated HAR model along with its preprocessing chain. This model is exemplary and by definition cannot fit the required performances or use case. It is proposed to show how this model can be easily be replaced by another that could differ in many ways :

- input sensor,

- window size,

- preprocessing,

- feature extraction step,

- topology,

- number of classes

It is assumed that a new model candidate is available and has been pre-integrated as described in user manual.

We will guide the reader through all the little modifications required for adapting the code to accommodate the new model in its specificities

3.1. New model differences

3.2. Code Adaptation

3.2.1. Change Sensor and settings

/**

******************************************************************************

* @file AppController.c*

... */

sys_error_code_t AppController_vtblOnEnterTaskControlLoop(AManagedTask *_this)

{

/* ... */

/* Enable only the accelerometer of the combo sensor "ism330dhcx". */

/* This is the default configuration for FP-AI-MONITOR1. */

SQuery_t query;

SQInit(&query, SMGetSensorManager());

sensor_id = SQNextByNameAndType(&query, "ism330dhcx", COM_TYPE_ACC);

if (sensor_id != SI_NULL_SENSOR_ID)

{

SMSensorEnable(sensor_id);

SMSensorSetODR(sensor_id, 1666);

SMSensorSetFS(sensor_id, 4.0);

p_obj->p_ai_sensor_obs = SMGetSensorObserver(sensor_id);

p_obj->p_neai_sensor_obs = SMGetSensorObserver(sensor_id);

}

else

{

res = SYS_CTRL_WRONG_CONF_ERROR_CODE;

}

/* ... */

3.2.2. Adapt input shape

Align data shape definition in all SW modules

/**

******************************************************************************

* @file AITask.c

...

*/

/* ... */

#define AI_AXIS_NUMBER 3

#define AI_DATA_INPUT_USER 48

/* ... */

and in

/**

******************************************************************************

* @file AiDPU.h

...

*/

#define AIDPU_NB_AXIS (3)

#define AIDPU_NB_SAMPLE (48)

#define AIDPU_AI_PROC_IN_SIZE (AI_HAR_NETWORK_IN_1_SIZE)

#define AIDPU_NAME "har_network"

/* ... */

3.2.3. Change preprocessing

/**

******************************************************************************

* @file AiDPU.c

...

*/

sys_error_code_t AiDPU_vtblProcess(IDPU *_this)

{

/* ... */

if ((*p_consumer_buff) != NULL)

{

GRAV_input_t gravIn[AIDPU_NB_SAMPLE];

/* GRAV_input_t gravOut[AIDPU_NB_SAMPLE]; */

assert_param(p_obj->scale != 0.0F);

assert_param(AIDPU_AI_PROC_IN_SIZE == AIDPU_NB_SAMPLE*AIDPU_NB_AXIS);

assert_param(AIDPU_NB_AXIS == p_obj->super.dpuWorkingStream.packet.shape.shapes[AI_LOGGING_SHAPES_WIDTH]);

assert_param(AIDPU_NB_SAMPLE == p_obj->super.dpuWorkingStream.packet.shape.shapes[AI_LOGGING_SHAPES_HEIGHT]);

float *p_in = (float *)CB_GetItemData((*p_consumer_buff));

float scale = p_obj->scale;

for (int i=0 ; i < AIDPU_NB_SAMPLE ; i++)

{

gravIn[i].AccX = *p_in++ * scale;

gravIn[i].AccY = *p_in++ * scale;

gravIn[i].AccZ = *p_in++ * scale;

/* gravOut[i] = gravity_suppress_rotate (&gravIn[i]); */

}

/* call Ai library. */

p_obj->ai_processing_f(AIDPU_NAME, (float *)/*gravOut*/ gravIn, p_obj->ai_out);

/* ... */

}

3.2.4. Change the number of classes

/**

******************************************************************************

* @file AppController.c

...

*/

#define CTRL_HAR_CLASSES 6

/* ... */

/**

* Specifies the label for the four classes of the HAR demo.

*/

static const char* sHarClassLabels[] = {

"Downstairs",

"Jogging",

"Sitting",

"Standing",

"Upstairs",

"Walking",

"Unknown"

};