| The delivery of this wiki page is under construciton |

This article aims to provide a step-by-step guide to set up a vibration-based condition monitoring solution for a USB fan using FP-AI-MONITOR1 and an STEVAL-STWINKT1B board. The anomaly detection AI libraries to be used in this tutorial will be generated using NanoEdgeTM AI Studio and the software used to program the sensor board is provided as a function pack that can be downloaded from the ST website.

In this article you learn:

- using high-speed datalogger binary to log the vibration data on microSD card on STEVAL-STWINKT1B,

- generating the AI library for condition monitoring using NanoEdgeTM AI Studio,

- integrating the generated libraries to FP-AI-MONITOR1, and programming the sensor-node for condition monitoring, and

- performing the condition monitoring on the USB fan setup using live vibration data.

1. Requirements

To follow and reproduce the steps provided in this article the reader requires the following parts.

1.1. Hardware

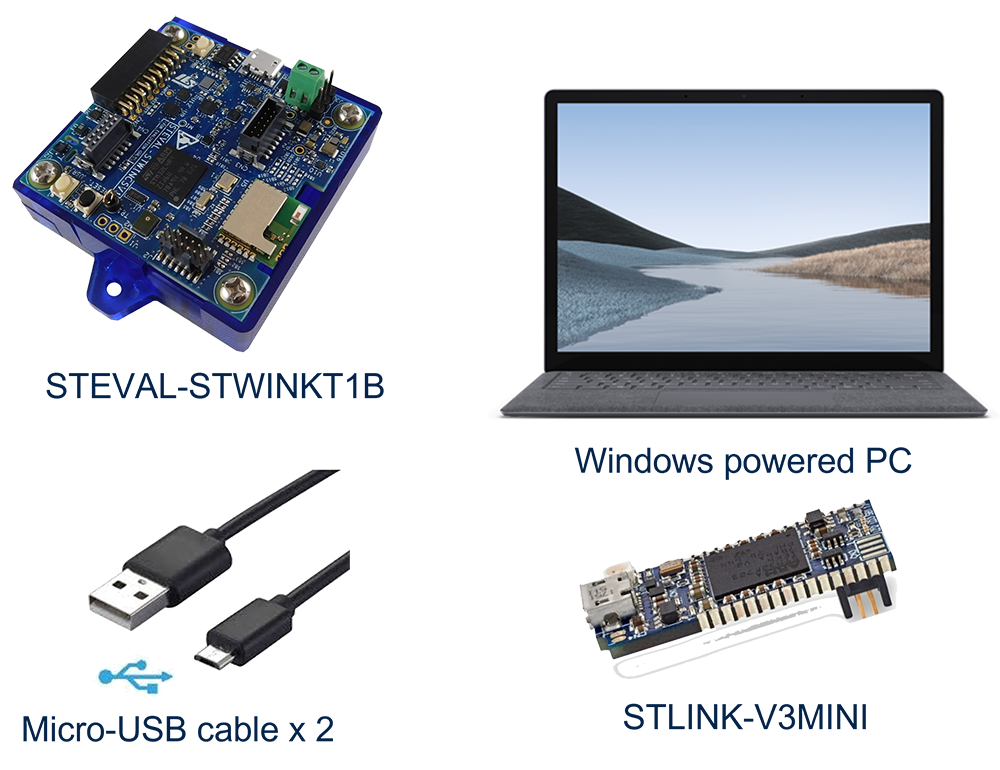

The hardware required to reproduce this tutorial includes:

- STEVAL-STWINKT1B

- The STWIN SensorTile wireless industrial node (STEVAL-STWINKT1B) is a development kit and reference design that simplifies prototyping and testing of advanced industrial IoT applications such as condition monitoring and predictive maintenance. For details visit the link.

- STEVAL-STWINKT1B

- Miscellaneous

- STLINK-V3MINI to program the STWIN,

- MicroSDTM card formatted as FAT32-FS for the data logging operation,

- A USB MicroSDTM card reader to read the data from the MicroSDTM card to the computer.

- 2 x Micro USB cables to connect the motor control board, STLINK-V3Mini and STWIN

1.2. Software

- FP-AI-MONITOR1

- Download the FP-AI-MONITOR1, package from ST website, extract and copy the .zip file contents into a folder on the PC. The package contains binaries and source code, for the sensor-board STEVAL-STWINKT1B.

- FP-AI-MONITOR1

- One of the following three IDEs

- STMicroelectronics - STM32CubeIDE version 1.5.1

- IAR Embedded Workbench for Arm (EWARM) toolchain V8.50.5 or later

- RealView Microcontroller Development Kit (MDK-ARM) toolchain V5.31

- One of the following three IDEs

- Tera Term

- Tera Term is an open-source and freely available software terminal emulator, which is used to host the CLI of the FP-AI-MONITOR1 through a serial connection. Users can download and install the latest version available from Tera Term.

- NanoEdgeTM AI Studio 2.1.*

- The anomaly detection libraries used in this demo are generated by NanoEdge AI Studio. For this, the user needs to generate a project in the Studio and get the libraries from it. These libraries then can be embedded in the FP-AI-MONITOR1. The more information on the studio can be found on st.com.

- Tera Term

2. Hardware and firmware setup

2.1. HW prerequisites and setup

To use the FP-AI-MONITOR1 function pack on STEVAL-STWINKT1B, the following hardware items are required:

- STEVAL-STWINKT1B development kit board,

- Windows® powered laptop/PC (Windows® 7, 8, or 10),

- Two MicroUSB cables, one to connect the sensor-board to the PC, and another one for the STLINK-V3MINI, and

- an STLINK-V3MINI.

2.2. Software requirements

2.2.1. X-Cube-AI

X-CUBE-AI is an STM32Cube Expansion Package part of the STM32Cube.AI ecosystem and extending STM32CubeMX capabilities with automatic conversion of pre-trained Artificial Intelligence models and integration of generated optimized library into the user project. The easiest way to use it is to download it inside the STM32CubeMX tool (version 7.0.0 or newer) as described in user manual Getting started with X-CUBE-AI Expansion Package for Artificial Intelligence (AI) (UM2526). The X-CUBE-AI Expansion Package offers also several means to validate the AI models (both Neural Network and Scikit-Learn models) both on desktop PC and STM32, as well as measure performance on STM32 devices (Computational and memory footprints) without ad-hoc handmade user C code.

2.2.2. NanoEdge™ AI Studio

The function pack supports the AI libraries generated by NanoEdge™ AI Studio. It facilitates users to log the data, prepare and condition it to generate the libraries from the NanoEdge™ AI Studio and then embed these libraries in the FP-AI-MONITOR1. NanoEdge™ AI Studio is available from www.st.com/stm32nanoedgeai under several option such as personalized support based sprints and trial or full version.

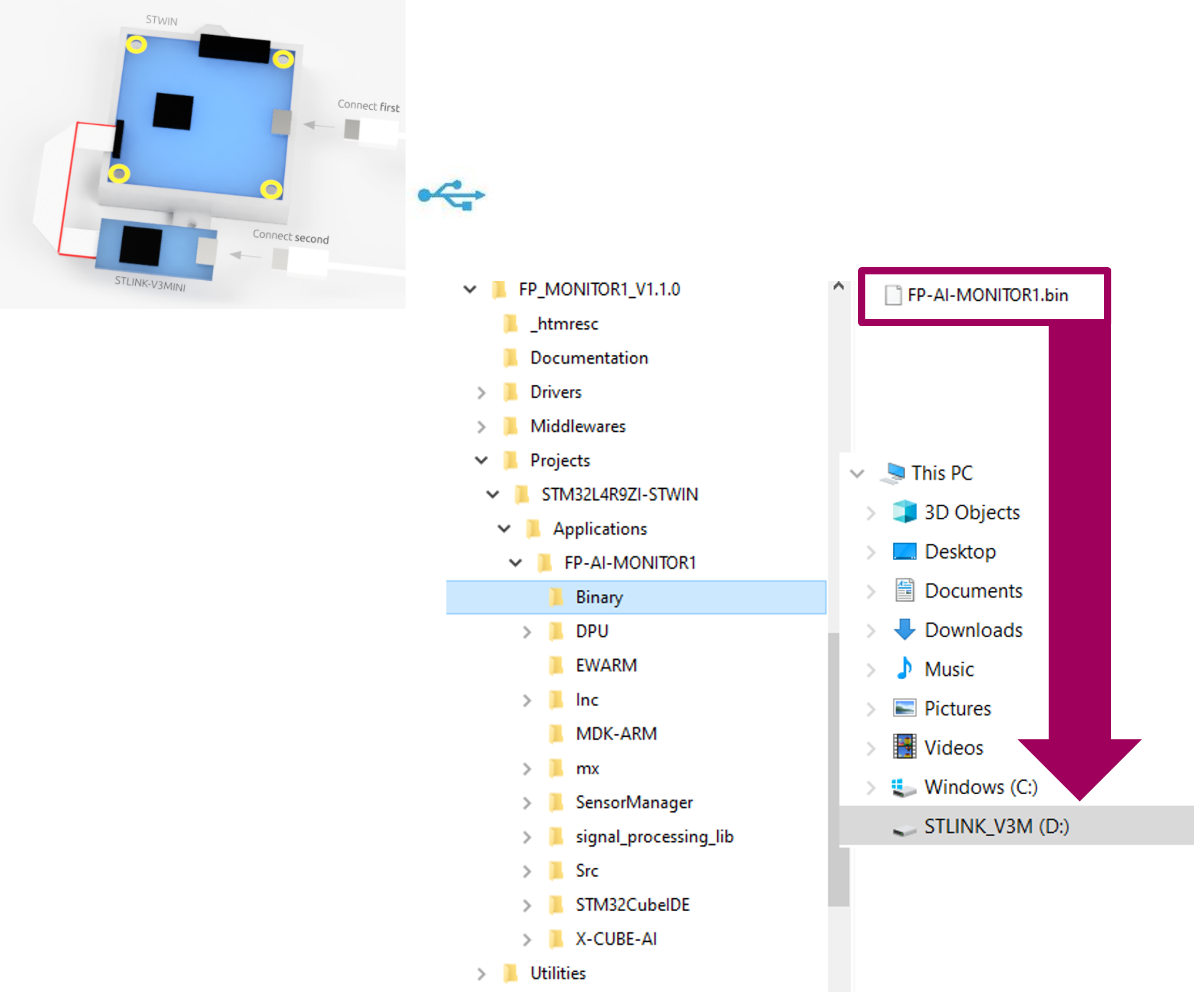

2.3. Program firmware into the STM32 microcontroller

This section explains how to select binary firmware and program it into the STM32 microcontroller. Binary firmware is delivered as part of the FP-AI-MONITOR1 function pack. It is located in the FP-AI-MONITOR1_V1.0.0/Projects/STM32L4R9ZI-STWIN/Applications/FP-AI-MONITOR1/Binary/ folder. When the STM32 board and PC are connected through the USB cable on the STLINK-V3E connector, the related drive is available on the PC. Drag and drop the chosen firmware into that drive. Wait a few seconds for the firmware file to disappear from the file manager: this indicates that the firmware is programmed in the STM32 microcontroller.

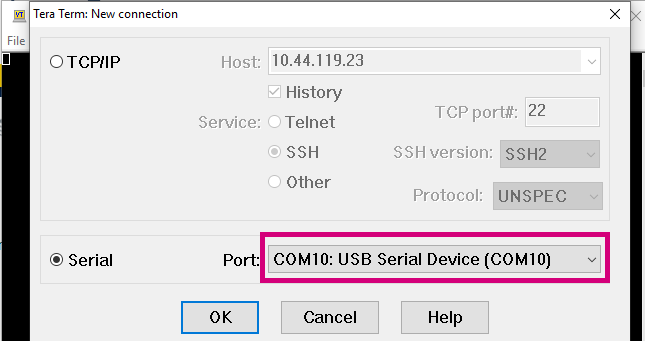

2.4. Using the serial console

A serial console is used to interact with the host board (Virtual COM port over USB). With the Windows® operating system, the use of the Tera Term software is recommended. Following are the steps to configure the Tera Term console for CLI over a serial connection.

2.4.1. Set the serial terminal configuration

Start Tera Term, select the proper connection (featuring the STMicroelectronics name),

Set the parameters:

- Terminal

- [New line]:

- [Receive]: CR

- [Transmit]: CR

- [New line]:

- Serial

- The interactive console can be used with the default values.

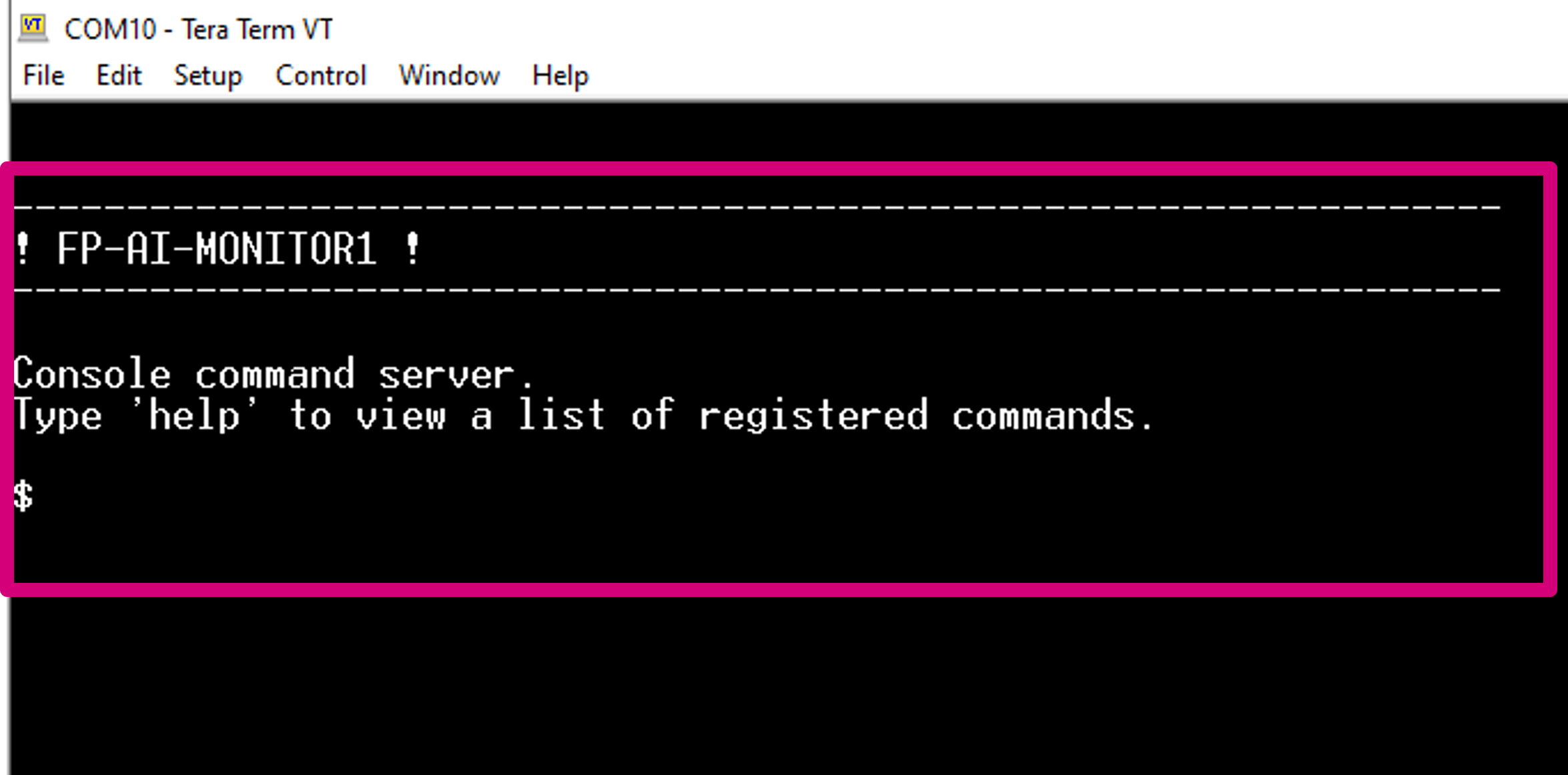

2.4.2. Start FP-AI-MONITOR1 firmware

Restart the board by pressing the RESET button. The following welcome screen is displayed on the terminal.

From this point, start entering the commands directly or type help to get the list of available commands along with their usage guidelines.

Note: The firmware provided is generated with a HAR model based on SVC and users can test it straight out of the box. However for the NanoEdge™ AI Library a stub is provided in place of the library. The user must generate the library with the help of the NanoEdge™ AI Studio, replace the stub with this library, and rebuild the firmware. These steps will be described in detail in the article later.

3. Button-operated modes

This section provides details of the button operated mode for FP-AI-MONITOR1. The purpose of the extended mode is to enable the users to operate the FP-AI-MONITOR1 on STWIN even in the absence of the CLI console.

In button operated mode, the sensor node can be controlled through the user button instead of the interactive CLI console. The default values for node parameters and settings for the operations during auto-mode are provided in the FW. Based on these configurations, different modes such as ai, neai_detect, and neai_learn can be started and stopped through the user-button on the node.

3.1. Interaction with user

The button operated mode can work with or without the CLI and is fully compatible and consistent with the current definition of the serial console and its command line interface (CLI).

The supporting hardware for this version of the function-pack (STEVAL-STWINKT1B) is fitted with three buttons:

- User Button, the only button usable by the SW,

- Reset Button, connected to STM32 MCU reset pin,

- Power Button connected to power management,

and three LEDs:

- LED_1 (green), controlled by Software,

- LED_2 (orange), controlled by Software,

- LED_C (red), controlled by Hardware, indicates charging status when powered through a USB cable.

So, the basic user interaction for button-operated operations is to be done through two buttons (user and reset) and two LEDs (Green and Orange). Following provides details on how these resources are allocated to show the users what execution phases are active or to report the status of the sensor-node.

3.1.1. LED Allocation

In the function pack four execution phases exist:

- idle: the system waits for user input.

- ai detect: all data coming from the sensors are passed to the X-CUBE-AI library to detect anomalies.

- neai learn: all data coming from the sensors are passed to the NanoEdge™AI library to train the model.

- neai detect: all data coming from the sensors are passed to the NanoEdge™AI library to detect anomalies.

At any given time, the user needs to be aware of the current active execution phase. We also need to report on the outcome of the detection when the detect execution phase is active, telling the user if an anomaly has been detected, or what activity is being performed by the user using HAR application when "ai" context is running.

The onboard LEDs indicate the status of the current execution phase by showing which context is running and also by showing the output of the context (anomaly or one of the four activities in HAR case).

The green LED is used to show the user which execution context is being run.

| Pattern | Task |

|---|---|

| OFF | - |

| ON | IDLE |

| BLINK_SHORT | X-CUBE-AI Running |

| BLINK_NORMAL | NanoEdge™ AI learn |

| BLINK_LONG | NanoEdge™ AI detection |

The Orange LED is used to indicate the output of the running context as shown in the table below:

| Pattern | Reporting |

|---|---|

| OFF | Stationary if in X-CUBE-AI mode, Normal Behavior when in NEAI mode |

| ON | Biking |

| BLINK_SHORT | Jogging |

| BLINK_LONG | Walking |

Looking at these LED patterns the users is aware of the state of the sensor node even when CLI is not connected.

3.1.2. Button Allocation

In the extended autonomous mode, the user can trigger any of the four execution phases. The available modes are:

- idle: the system is waiting for a command.

- ai: run the X-CUBE-AI library and print the results of the live inference on the CLI (if CLI is available).

- neai_learn: all data coming from the sensor is passed to the NanoEdge™ AI library to train the model.

- neai_detect: all data coming from the sensor is passed to the NanoEdge™ AI library to detect anomalies.

To trigger these phases, the FP-AI-MONITOR1 is equipped with the support of the user button. In the STEVAL-STWINKT1B sensor-node, there are two software usable buttons:

- The user button: This button is fully programmable and is under the control of the application developer.

- The reset button: This button is used to reset the sensor-node and is connected to the hardware reset pin, thus is used to control the software reset. It resets the knowledge of the NanoEdge™ AI libraries and reset all the context variables and sensor configurations to the default values.

In order to control the executions phases, we need to define and detect at least three different button press modes of the user button.

Following are the types of the press available for the user button and their assignments to perform different operations:

| Button Press | Description | Action |

|---|---|---|

| SHORT_PRESS | The button is pressed for less than (200 ms) and released | Start AI inferences for X-CUBE-AI model. |

| LONG_PRESS | The button is pressed for more than (200 ms) and released | Starts the learning for NanoEdge™ AI Library. |

| DOUBLE_PRESS | A succession of two SHORT_PRESS in less than (500 ms) | Starts the inference for NanoEdge™ AI Library. |

| ANY_PRESS | The button is pressed and released (overlaps with the three other modes) | Stops the current running execution phase. |

4. Command line interface

The command-line interface (CLI) is a simple method for the user to control the application by sending command line inputs to be processed on the device.

4.1. Command execution model

The commands are grouped into three main sets:

- (CS1) Generic commands

- This command set allows the user to get the generic information from the device like the firmware version, UID, compilation date and time, and so on, and to start and stop an execution phase.

- (CS2) AI commands

- This command set contains commands which are AI specific. These commands enable users to work with the X-CUBE-AI and NanoEdge™ AI libraries.

- (CS3) Sensor configuration commands

- This command set allows the user to configure the supported sensors and to get the current configurations of these sensors.

4.2. Execution phases and execution context

The four system execution phases are:

- X-CUBE-AI: data coming from the sensors is passed through the X-CUBE-AI models to run the inference on the HAR classification..

- NanoEdge™ AI learning: data coming from the sensors is passed to the NanoEdge™ AI library to train the model.

- NanoEdge™ AI detection: data coming from the sensors is passed to the NanoEdge™ AI library to detect anomalies.

Each execution phase can be started and stopped with a user command start <execution phase> issued through the CLI.

An execution context, which is a set of parameters controlling execution, is associated with each execution phase. One single parameter can belong to more than one execution context.

The CLI provides commands to set and get execution context parameters. The execution context cannot be changed while an execution phase is active. If the user attempts to set a parameter belonging to any active execution context, the requested parameter is not modified.

4.3. Command summary

| Command name | Command string | Note |

|---|---|---|

| CS1 - Generic commands | ||

| help | help | Lists all registered commands with brief usage guidelines. Including the list of applicable parameters. |

| info | info | Shows firmware details and version. |

| uid | uid | Shows STM32 UID. |

| reset | reset | Resets the MCU system. |

| start | start <"ai", "neai_learn", or "neai_detect" > | Starts an execution phase according to its execution context, i.e. ai, neai_learn or neai_detect. |

| CS2 - AI specific commands | ||

| neai_init | neai_init | (Re)initializes the NanoEdge™ AI model by forgetting any learning. Used in the beginning and/or to create a new NanoEdge™ AI model. |

| neai_set | neai_set <param> <val> | Sets a PdM specific parameter in an execution context. |

| neai_get | neai_get <param> | Displays the value of the parameters in the execution context. The parameters are "sensitivity", "threshold", "signales", "timer", and "all". |

| ai_get | ai_get info | Displays the information about the embedded AI Model such as "weights", "MAACs", "RAM", "version of X-CUBE-AI" and so on. |

| CS3 - Sensor configuration commands | ||

| sensor_set | sensor_set <sensorID> <param> <val> | Sets the ‘value’ of a ‘parameter’ for a sensor with the sensor ID provided in ‘id’. The setable params are "FS", "ODR", and "enable". |

| sensor_get | sensor_get <sensorID>.<subsensorID> <param> | Gets the ‘value’ of a ‘parameter’ for a sensor with the sensor ID provided in ‘id’. The params are "FS", "ODR", "enable", "FS_List", "ODR_List", and "all". |

| sensor_info | sensor_info | Lists the type and ID of all supported sensors. |

5. Condition monitoring using NanoEdge™ AI Machine Learning library

This section provides a complete anomaly detection method using the NanoEdge™ AI library. FP-AI-MONITOR1 includes a pre-integrated stub which is easily replaced by an AI condition monitoring library generated and provided by NanoEdge™ AI Studio. This stub simulates the NanoEdge™ AI related functionalities, such as running learning and detection phases on the edge.

The learning phase is started by issuing a command $ start neai_learn from the CLI console. Launching this command starts the process of learning and displays a message on the console every time learning is performed on a new signal (see the snippet below):

$ CTRL: ! This is a stubbed version, please install NanoEdge AI library !

NanoEdge AI: learn

{"signal": 1, "status": success}

{"signal": 2, "status": success}

{"signal": 3, "status": success}

:

:

:

End of execution phase

The process is stopped by pressing the ESC key on the keyboard.

The learning is also started by performing a long-press of the user button. Pressing the user button again stops the learning process.

Once the normal conditions are learned, the user starts the condition monitoring process by issuing the command $ start neai_detect command. This command starts the inference phase and checks the similarity of the presented signal with the learned normal signals. If the similarity is less than the set threshold default: 90%, the similarity value is printed showing the occurrence of an anomaly. This behavior is shown in the snippet below:

$ start neai_detect

NanoEdge AI: starting detect phase...

$ CTRL: ! This is a stubbed version, please install NanoEdge AI library !

NanoEdge AI: detect

{"signal": 1, "similarity": 0, "status": anomaly}

{"signal": 2, "similarity": 1, "status": anomaly}

{"signal": 3, "similarity": 2, "status": anomaly}

:

:

{"signal": 90, "similarity": 89, "status": anomaly}

{"signal": 91, "similarity": 90, "status": anomaly}

{"signal": 102, "similarity": 0, "status": anomaly}

{"signal": 103, "similarity": 1, "status": anomaly}

{"signal": 104, "similarity": 2, "status": anomaly}

End of execution phase

or by double-pressing the user button on the sensor board.

The process is stopped by pressing the ESC key on the keyboard or alternatively pressing the user button .

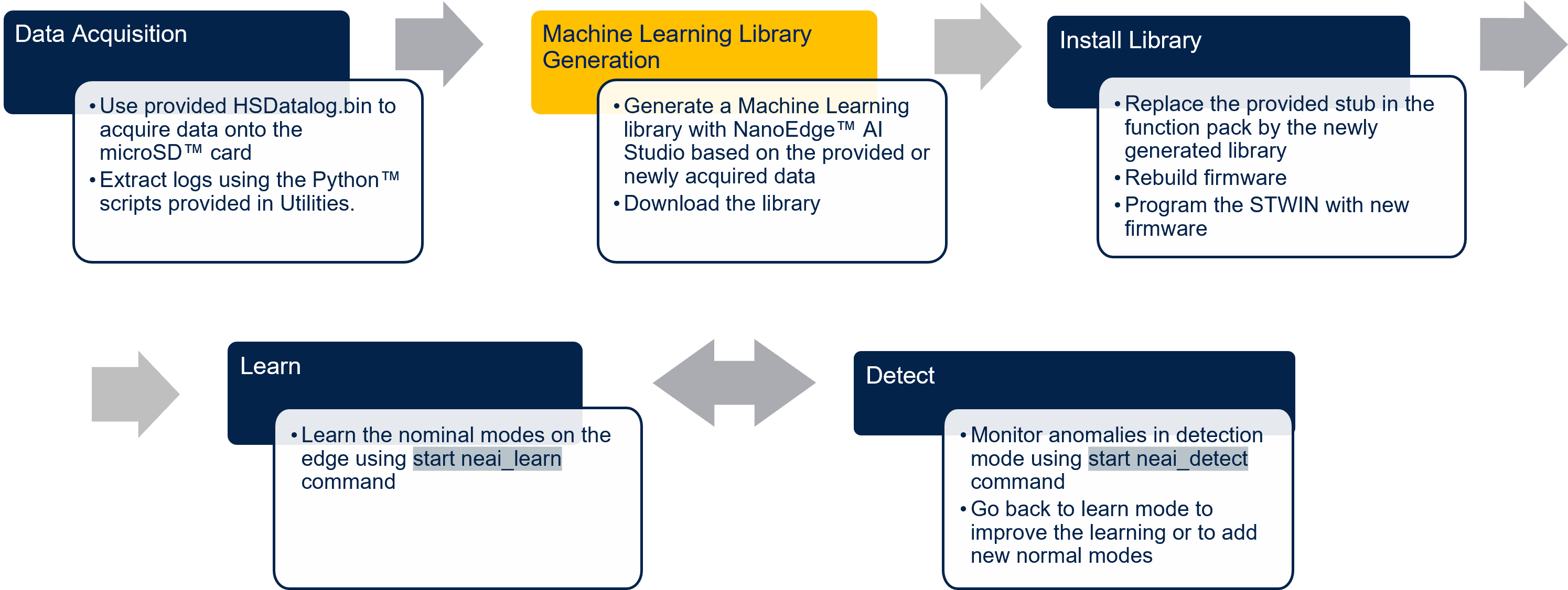

The following section shows how to generate a NanoEdge™ AI library for anomaly detection using NanoEdge™ AI Studio and FP-AI-MONITOR1, and how to set up an application for condition monitoring. The steps are provided with brief details in the following figure.

5.1. Generating a condition monitoring library

This section provides a step-by-step guide on how to generate, and install NanoEdge™ AI libraries by linking them to the provided project in FP-AI-MONITOR1 and then use them to perform the condition monitoring on the edge, by running first learning and then detection mode.

5.1.1. Data logging for normal and abnormal conditions

The details on how to acquire the data are provided in the section Datalogging using HSDatalog.

5.1.2. Data preparation for library generation with NanoEdge™ AI Studio

The data logged through the datalogger is in the binary format and is not user readable nor compliant with the NanoEdge™ AI Studio format as it is. To convert this data to a useful form, FP-AI-MONITOR1 provides Python™ utility scripts on the path /FP-AI-MONITOR1_V1.0.0/Utilities/AI-resources/NanoEdgeAi/.

The Jupyter Notebook NanoEdgeAI_Utilities.ipynb provides a complete example of data preparation for a three speed fan library generation running in normal and clogged condition. In addition there is a HSD_2_NEAISegments.py is provided if user wants to prepare segments for a given data acquisition. This script is used by issuing the following command for a data acquisition with ISM330DHCX_ACC sensor.

python HSD_2_NEAISegments.py ../Datasets/Fan12CM/ISM330DHCX/normal/1000RPM/

This command will generate a file named as ISM330DHCX_Cartesiam_segments_0.csv using the default parameter set. The file is generated with segments of length 1024 and stride 1024 plus the first 512 samples are skipped from the file.

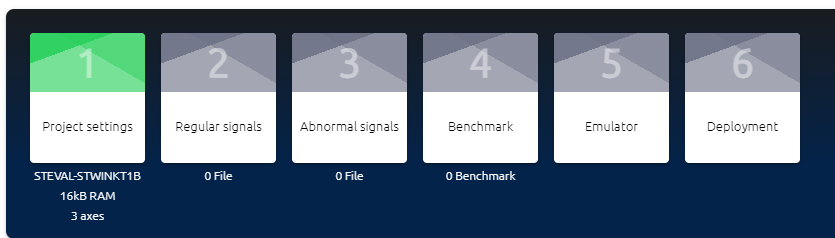

5.1.3. Library generation using NanoEdge™ AI Studio

Running the scripts in Jupyter Notebook will generate the normal_WL1024_segments.csv and clogged_WL1024_segments.csv files. In this section it is described how to generate the libraries using these normal and abnormal segment files.

The process of generating the libraries with NanoEdge™ AI Studio consists of six steps. These steps are shown in the figure below.

- Hardware description

- Choosing the target platform or a microcontroller type: STEVAL-STWINKT1B Cortex-M4

- Maximum amount of RAM to be allocated for the library: Usually a few Kbytes is enough (but it depends on the data frame length used in the process of data preparation, 32 Kbytes is a good starting point).

- Sensor type : 3-axis accelerometer

- Providing the sample contextual data for normal segments to adjust and gauge the performance of the chosen model (

normal_WL1024_segments.csv) - Providing the sample contextual data for abnormal segments to adjust and gauge the performance of the chosen model (

clogged_WL1024_segments.csv). - Benchmarking of available models and choose the one that complies with the requirements.

- Validating the model for learning and testing through the provided emulator which emulates the behavior of the library on the edge.

- The final step is to compile and download the libraries. In this process, the flag

"-mfloat-abi"has to becheckedfor using libraries with hardware FPU. All the other flags can be left to the default state.- Note for using the library with μKeil also check -fshort-wchar in addition to -mfloat-abi.

Detailed documentation on the NanoEdge™ AI Studio.

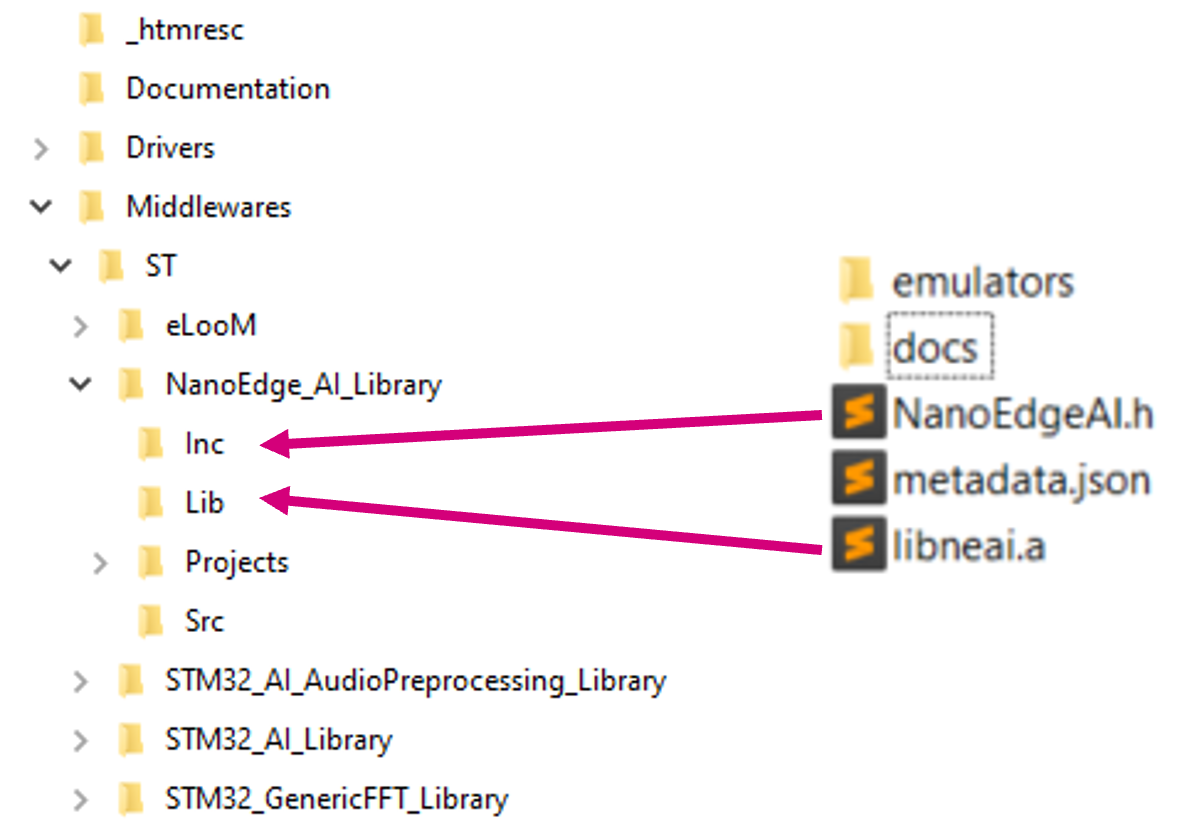

.5.2. Installing the NanoEdge™ Machine Learning library

Once the libraries are generated and downloaded from NanoEdge™ AI Studio, the next step is to link these libraries to FP-AI-MONITOR1 and run them on the STWIN. The FP-AI-MONITOR1, comes with the library stubs in the place of the actual libraries generated by NanoEdge™ AI Studio. This is done to simplify the linking of the generated libraries. In order to link the actual libraries, the user needs to copy the generated libraries and replace the existing stub/dummy libraries and header files NanoEdgeAI.h, and libneai.a files present in the folders Inc, and lib, respectively. The relative paths of these folders are /FP_AI_MONITOR1_V1.0.0/Middlewares/ST/NanoEdge_AI_Library/ as shown in the figure below.

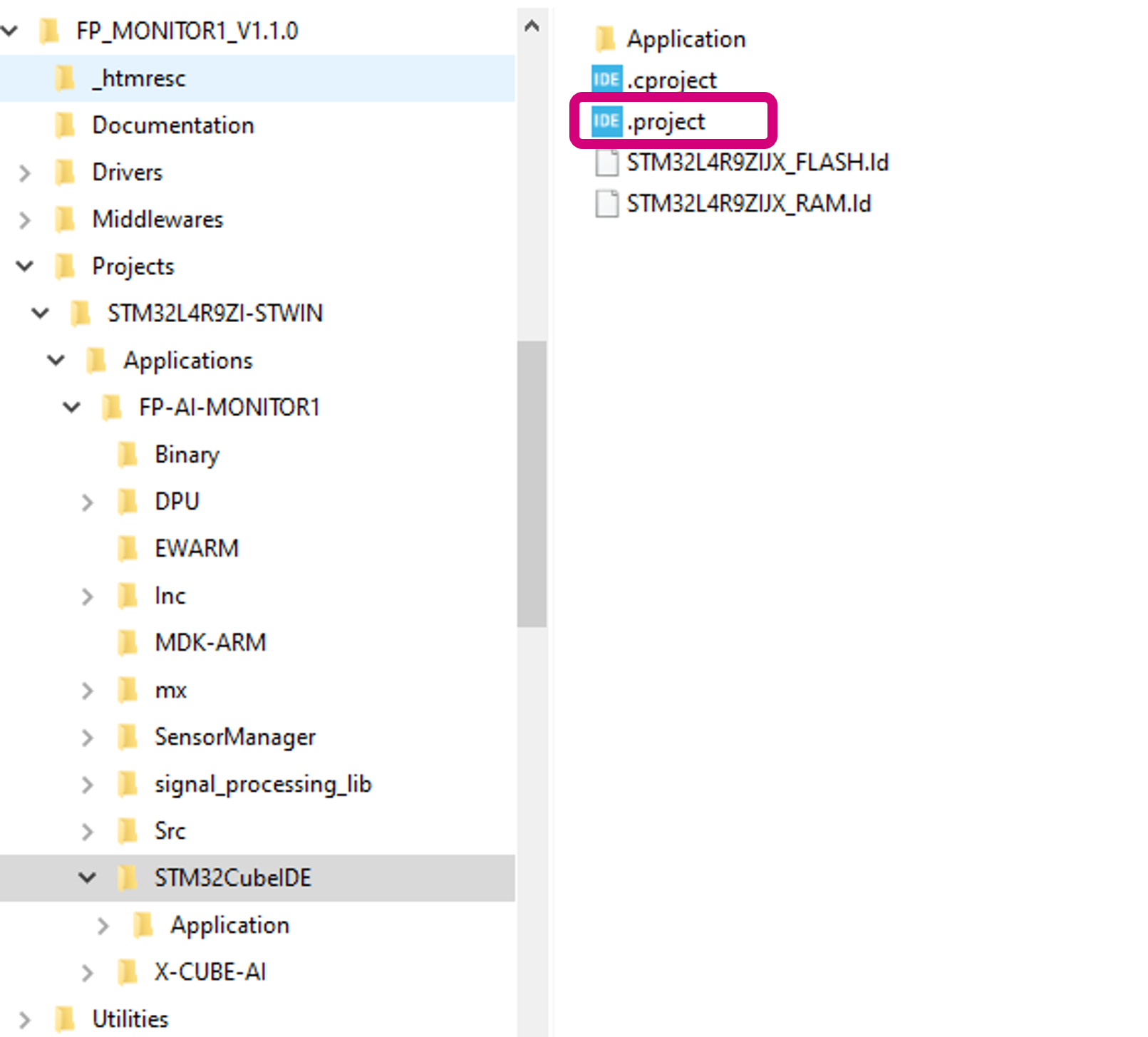

Once these files are copied, the project must be reconstructed and programmed on the sensor board to link the libraries correctly. For this, the user must open the project in STM32CubeIDE located in the /FP-AI-MONITOR1_V1.0.0/Projects/STWINL4R9ZI-STWIN/Applications/FP-AI-MONITOR1/STM32CubeIDE/ folder and double click .project file as shown in the figure below.

To build and install the project click on the play button and wait for the successful download message as shown in the section Build and Install Project.

Once the sensor-board is successfully programmed, the welcome message appears in the CLI (Tera Term terminal). If the message does not appear try to reset the board by pressing the RESET button.

5.3. Testing the NanoEdge™ AI Machine Learning library

Once the STWIN is programmed with the FW containing a valid library, the condition monitoring libraries are ready to be tested on the sensor board. The learning and detection commands can be issued and now the user will not see the warning of the stub presence.

To achieve the best performance, the user must perform the learning using the same sensor configurations which were used during the contextual data acquisition.

5.4. Additional parameters in condition monitoring

For user convenience, the CLI application also provides handy options to easily fine-tune the inference and learning processes. The list of all the configurable variables is available by issuing the following command:

$ neai_get all NanoEdgeAI: signals = 0 NanoEdgeAI: sensitivity = 1.000000 NanoEdgeAI: threshold = 95 NanoEdgeAI: timer = 0

Each of the these parameters is configurable using the neai_set <param> <val> command.

This section provides information on how to use these parameters to control the learning and detection phase. By setting the "signals" and "timer" parameters, the user can control how many signals or for how long the learning and detection is performed (if both parameters are set the learning or detection phase stops whenever the first condition is met). For example, to learn 10 signals, the user issues this command, before starting the learning phase as shown below.

$ neai_set signals 10

NanoEdge AI: signals set to 10

$ start neai_learn

NanoEdgeAI: starting

$ {"signal": 1, "status": success}

{"signal": 2, "status": success}

{"signal": 3, "status": success}

:

:

{"signal": 10, "status": success}

NanoEdge AI: stopped

If both of these parameters are set to "0" (default value), the learning and detection phases will run indefinitely.

The threshold parameter is used to report any anomalies. For any signal which has similarities below the threshold value, an anomaly is reported. The default threshold value used in the CLI application is 90. Users can change this value by using neai_set threshold <val> command.

Finally, the sensitivity parameter is used as an emphasis parameter. The default value is set to 1. Increasing this sensitivity will mean that the signal matching is to be performed more strictly, reducing it will relax the similarity calculation process, i.e. resulting in higher matching values.

For further details on how NanoEdge™ AI libraries work users are invited to read the detailed documentation of NanoEdge™ AI Studio.

6. Data collection

The data collection functionality is out of the scope of this function pack. However, to simplify the task of the users and to allow them the possibility to perform a datalog, the precompiled HSDatalog.bin file for FP-SNS-DATALOG1 is provided in the Utilities which is located under path /FP-AI-MONITOR1_V1.0.0/Utilities/Datalog/. To acquire the data from any of the available sensors on the sensor-board the user programs the sensor-board with this binary using the drag-drop method shown in section 2.3.

Once the sensor-board is programmed log the data using the following instructions.

- Place a

DeviceConfig.jsonfile with the sensors configurations to be used for the datalogging in the root folder of the microSDTM card. Some sample.jsonfiles are provided in the package and are located inFP-AI-MONITOR1_V1.0.0/Utilities/Datalog/Sample_STWIN_Config_Files/. These files are to be precisely namedDeviceConfig.json. - Insert the SD card into the STWIN board.

- Reset the board. Orange LED blinks once per second. The custom sensor configurations provided in

DeviceConfig.jsonare loaded from the file. - Press the [USR] button to start data acquisition on the SD card. The orange LED turns off and the green LED starts blinking to signal sensor data is being written into the SD card.

- Press the [USR] button again to stop data acquisition. Do not unplug the SD card or turn the board off before stopping the acquisition otherwise the data on the SD card will be corrupted.

- Remove the SD card and insert it into an appropriate SD card slot on the PC. The log files are stored in

STWIN_###folders for every acquisition, where###is a sequential number determined by the application to ensure log file names are unique. Each folder contains a file for each active sub-sensor calledSensorName_subSensorName.datcontaining raw sensor data coupled with timestamps, aDeviceConfig.jsonwith specific information about the device configuration, necessary for correct data interpretation, and anAcquisitionInfo.jsonwith information about the acquisition.

- FP-AI-MONITOR1: Multi-sensor AI data monitoring framework on wireless industrial node, function pack for STM32Cube

- User Manual : User Manual for FP-AI-MONITOR1

- STEVAL-STWINKT1B

- STM32CubeMX : STM32Cube initialization code generator

- X-CUBE-AI : expansion pack for STM32CubeMX

- NanoEdge™ AI Studio: NanoEdge™ AI the first Machine Learning Software, specifically developed to entirely run on microcontrollers.

- DB4345: Data brief for STEVAL-STWINKT1B.

- UM2777: How to use the STEVAL-STWINKT1B SensorTile Wireless Industrial Node for condition monitoring and predictive maintenance applications.