| Coming soon |

1. LC3 Codec

The LC3 codec is an algorithm allowing to compress audio data for transmitting over the air.

This codec must be supported by any application build over the Generic Audio Framework and BLE 5.2 isochronous feature. It runs channel independently, has a complexity similar to Opus, and can be used for either voice and music with a better quality.

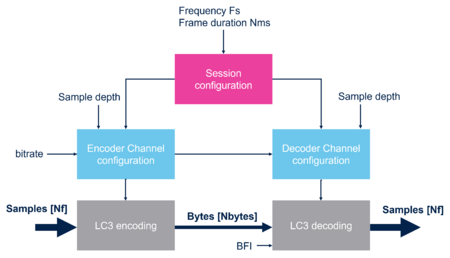

1.1. LC3 configuration

BLE profile configures an LC3 session defined by:

- The sampling frequency : 8, 16, 24, 32 ,44.1 or 48 kHz

- The frame duration : 7.5 or 10ms

The frame duration varies a little with 44.1 kHz, refers to LC3 specification [1] for details

Then it also configures each channels referring to the session with additional information:

- The mode : encoding or decoding

- The PCM sample width : 16, 24 or 32 bits

- The bitrate within a list of recommended bitrates

The LC3 supports bitrate updates, but BLE profiles don't use this feature

Also, the ST LC3 codec embed a Packet Loss Concealment algorithm based on the annex B of the LC3 specification. This algorithm ensure signal continuity and reduced glitches in cases of corrupted/missing packet and is triggered by either an external indicator BFI or an internal frame analysis.

At the encoder side, the data flow is:

- Data Input

- PCM signal buffered into an Nf samples per channel, this size is linked to the frame duration and frequency.

- For example, 10ms frame at 32kHz leads to 320 samples

- Data Output

- An encoded buffer per frame per channel of size Nbytes, this size is directly linked to the bitrate and is within the range of 20 to 400 bytes.

- In our example, 64kbps leads to 80 bytes, so compression factor of 8 for 16bits/sample

1.2. LC3 requirement

Our LC3 implementation is based of floating point numbers and needs to access the FPU for fast computation as well as the support of DSP instructions. Also, since it's an heavy process, it is recommenced to use high core clock with Instruction Cache enabled. The library provided is compiled for cortex M33 with speed optimization.

Provided by the upper layer, the following RAM must be allocated for running the full feature LC3:

- 604 bytes per session

- 4820 bytes per encoding channel

- 8340 bytes per decoding channel

Also, at least 7000 bytes are allocated by the encoder over the stack, or 4700 bytes if the only decoder is used.

The flash footprint of the full codec represents around 120 Kbytes and can be reduced at the linking time if only one mode is used.

Finally here is an overview of the CPU load required Picture of CPU load

This LC3 implementation is SIG certified with the QDID : XXXXX

2. Audio path architecture

2.1. Overview

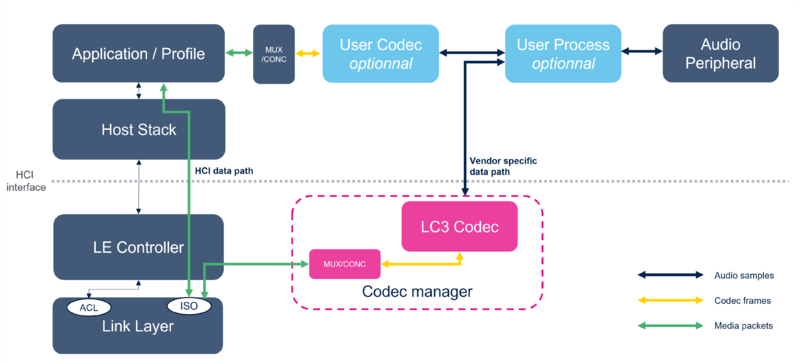

The Bluetooth specification allows various ISO data path. While the legacy path use the HCI interface, a vendor specific data path allows a better handling of processes and latencies. This architecture puts the audio codec bellow the HCI interface that can be configured using standard HCI commands.

2.2. Clock synchronization

2.2.1. Generalities

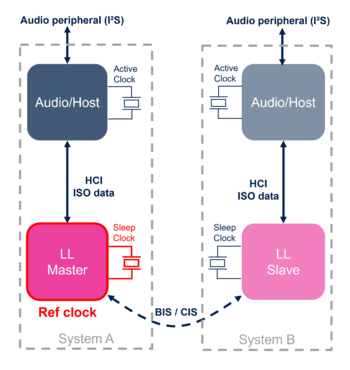

The Bluetooth specification says when use unframed PDU, the upper layer must synchronize generation of its data to the effective transport timings. In BLE, transports timings are defined by the link layer master's sleep clock, and upper layers (including audio peripheral) from both slave and master side have to synchronize to that clock. This statement has also sense when using framed PDU for avoiding glitch generation and having the same data rate from the audio source to the audio sink.

The most constraining role is BLE slave, meaning the device has to synchronize it's audio peripheral clock to the remote link layer.

Beeing BLE master brings less constraint since we only need to synchronize to a local clock. If power consumption is not a deal, the sleep clock can directly be taken from the active clock.

However, being slave on the audio peripheral brings a constraint impossible to respect.

| RF Role | Audio Role | Sleep Clock Source | Synchronization method |

|---|---|---|---|

| Master | Master | LSE | PLL Loop control |

| HSE/1000 | Direct synchronization | ||

| LSI | PLL Loop control (not recommended) | ||

| Slave | Any | None | |

| Slave | Master | Any | PLL Loop control |

| Slave | Any | None |

2.2.2. Implementation

Drift measurement can be done through the link layer and adjustment of the frequency can be done on the fly by updating the fractional N value of the PLL. For that, the PLL corrector provided needs an an approximately 1MHz free running timer, clocked by the PLL output, to measure drifts.

2.3. Audio latency

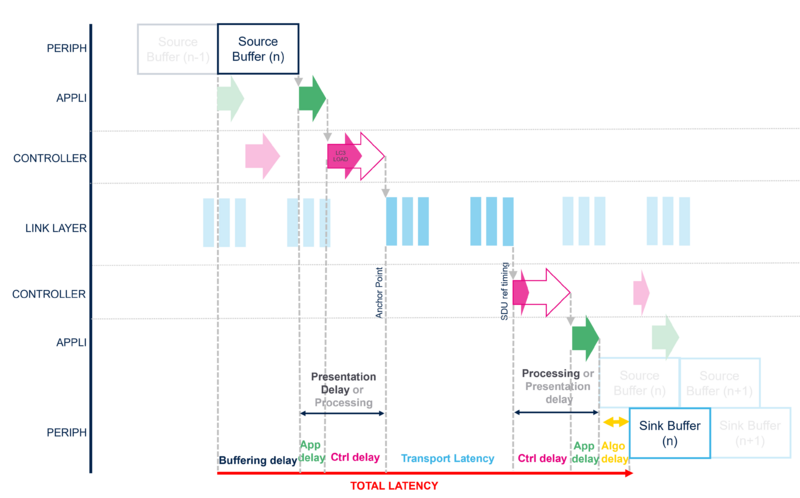

The BLE audio provides way on mastering the audio latency from the source to the sink. The total audio latency is the sum of the following sub latencies :

- Buffering delay is the time needed to buffers a frame and is mostly linked to the used codec.

- Algorithmic delay may be introduced by the codec for ensuring signal continuity.

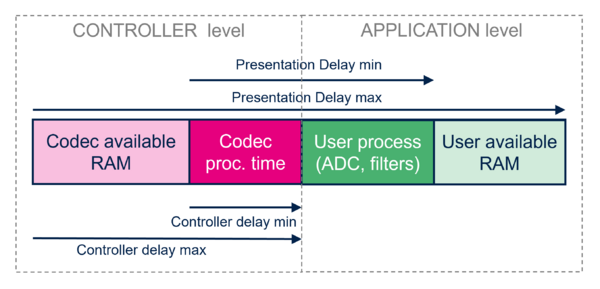

- Presentation delay is a delay managed by the upper layer. This delay may be negotiated within a provided range to ensure synchronization of several devices. It can be spitted into two sub delays

- Application delay in outside the controller, its minimum is related to the audio processing speed, while its maximum is linked to the resources allocated for buffering.

- Controller delay is inside the controller, its minimum is related to the codec processing speed, while its maximum is linked to the resources allocated for buffering.

- Transport latency is introduced by the link layer and correspond to the maximum time for transmitting a packet over the isochronous link. An higher transport latency means more possibilities of retransmitting packet and an higher quality of service.

As introduced, the Presentation delay is negotiated by the BLE audio profiles and useful for synchronizing when several Servers or Broadcast sink are involved. In Unicast, this delay -at Server side- is decided by the Client using ranges provided by all Servers, while in Broadcast this value is imposed to the sink. On the Client side, we don't have synchronization constraint and only speak about a Processing delay.

Locally, the device has to split this delay between the Controller delay and the Application delay. This decision in done at the application level based on the range of both delays.