This article provides details about the support of quantized model by X-CUBE-AI.

{{Info|

- X-CUBE-AI[ST 1] is an expansion software for STM32CubeMX that generates optimized C code for STM32 microcontrollers and neural network inference. It is delivered under the Mix Ultimate Liberty+OSS+3rd-party V1 software license agreement[ST 2] with the additional component license schemes listed in the product data brief[ST 3]

1. Quantization overview

X-CUBE-AI code generator can be used to deploy a quantized model. In this article, “Quantization” refers to 8 bit linear quantization of a NN model (Note that X-CUBE-AI provides also a support for the pre-trained Deep Quantized Neural Network (DQNN) model, see https://wiki.st.com/stm32mcu/wiki/AI:Deep_Quantized_Neural_Network_support Deep Quantized Neural Network (DQNN) support article).

Quantization is an optimization technique[ST 4] to compress a 32-bit floating-point model by reducing the size (smaller storage size and less memory peak usage at runtime), by improving CPU/MCU usage and latency (including power consumption) with a small degradation of accuracy. A quantized model executes some or all of the operations on tensors with integers rather than floating point values. It is an important part of various optimization techniques: topology-oriented, features-map reduction, pruning, weights compression.. which can be applied to address the resource-constrained runtime environment.

There are two classical methods of quantization: post-training quantization (PTQ) and quantization aware training (QAT). First is relatively easier to use, it allows to quantize a pre-trained model with a limited and representative data set (also called calibration data set). Quantization aware training is done during the training process and is often better for model accuracy.

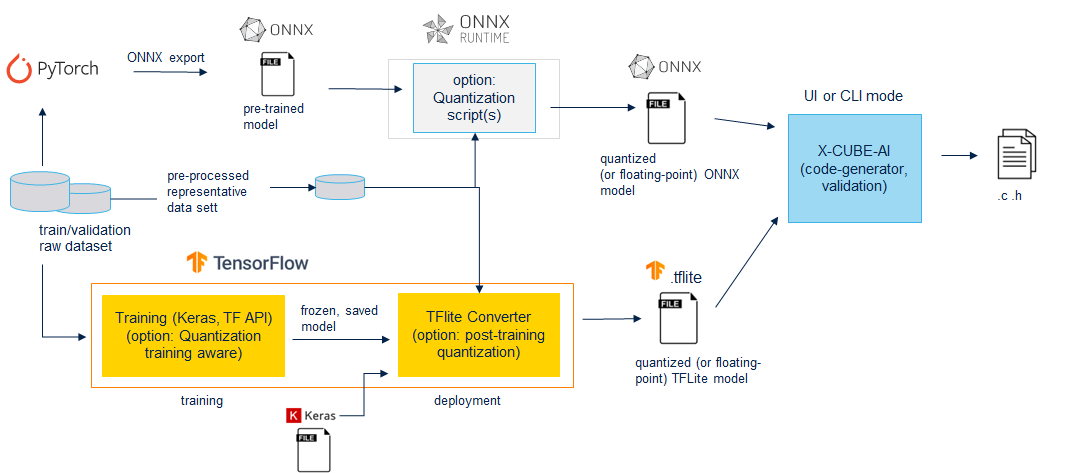

X-CUBE-AI can import different type of quantized model:

- a quantized TensorFlow lite model generated by a post-training or training aware process. The calibration has been performed by the TensorFlow Lite framework, principally through the “TFLite converter” utility exporting a TensorFlow lite file.

- a quantized ONNX model based on the Operator-oriented (QOperator) or the Tensor-oriented (QDQ; Quantize and DeQuantize) format. The first format is dependent of the supported QOperators (see the QLinearXXX operators, [ONNX]), and the second is more generic. The DeQuantizeLinear(QuantizeLinear(tensor)) operators are inserted between the original operators (in float) to simulate the quantization and dequantization process. Both formats can be generated with the ONNX runtime services.

Figure 1: quantization flow

2. STMicroelectronics references

See also: