This article describes how to automatize machine learning model code generation and validation through X-CUBE-AI Command Line Interface (CLI). The example is provided for Windows thanks using batch script, however it can be easily adapted to other operating system or through Python. More information on CLI can be found in the embedded documentation. For Python, please refer to the section How to run locally a c-model.

1. Requirements & installations

- X-CUBE-AI latest version (latest tested v7.2)

- STM32CubeIDE latest version (latest tested v1.10.1)

- STM32CubeProgrammer latest version (latest tested v1.10.1)

- A STM32 evaluation board, for this example: NUCLEO-H723ZG

- A model (Keras .h5, tensorflow lite .tflite or ONNX). For this example we used a MobileNet v1 0.25 quantized with input of image input of 128x128x3.

You can download the model: mobilenet_v1_0.25_128_quantized.tflite.

Once X-CUBE-AI is X-CUBE-AI installed as well as STM32CubeIDE, please note the installation paths, usually by default on Windows (replacing username by your Windows user account name and adapting to your tool version):

- For X-CUBE-AI: C:\Users\username\STM32Cube\Repository\Packs\STMicroelectronics\X-CUBE-AI\7.2.0

- For STM32CubeIDE: C:\ST\STM32CubeIDE_1.10.1\STM32CubeIDE\

- For arm gcc installed through STM32CubeIDE: C:\ST\STM32CubeIDE_1.10.1\STM32CubeIDE\plugins\com.st.stm32cube.ide.mcu.externaltools.gnu-tools-for-stm32.10.3-2021.10.win32_1.0.0.202111181127\tools\bin

- For STM32CubeProgrammer: C:\Program Files\STMicroelectronics\STM32Cube\STM32CubeProgrammer\bin

2. Validation application generation

In order to generate the initial validation project for the targeted board, we are using the STM32CubeMX with X-CUBE-AI plugin.

2.1. Create a new project

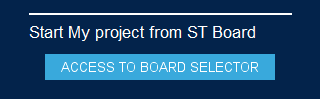

Open STM32CubeMX and start the project using the board selector:

Select the board to use, in our case the NUCLEO-H723ZG, and create a project without initializing all peripherals with their default Mode:

2.2. Add X-CUBE-AI software pack

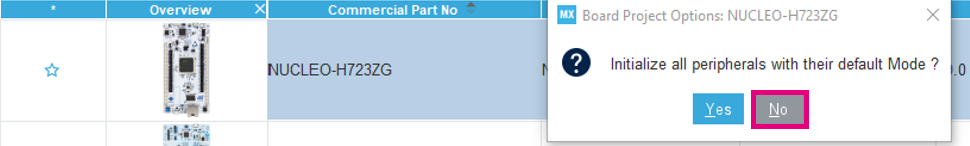

Select X-CUBE-AI software pack Core and Validation application:

Click on X-CUBE-AI software pack:

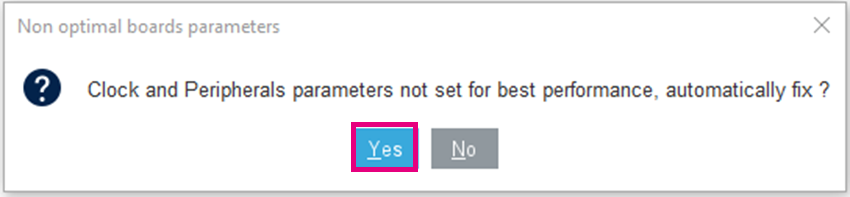

If by default the peripherals parameters are not set for the best performance, the system warns you of this. Select 'yes' to make sure that the maximal frequency is used.

X-CUBE-AI configures default parameters to set the best performance as well as configuring the UART used to report performances.

2.3. Generate the validation application

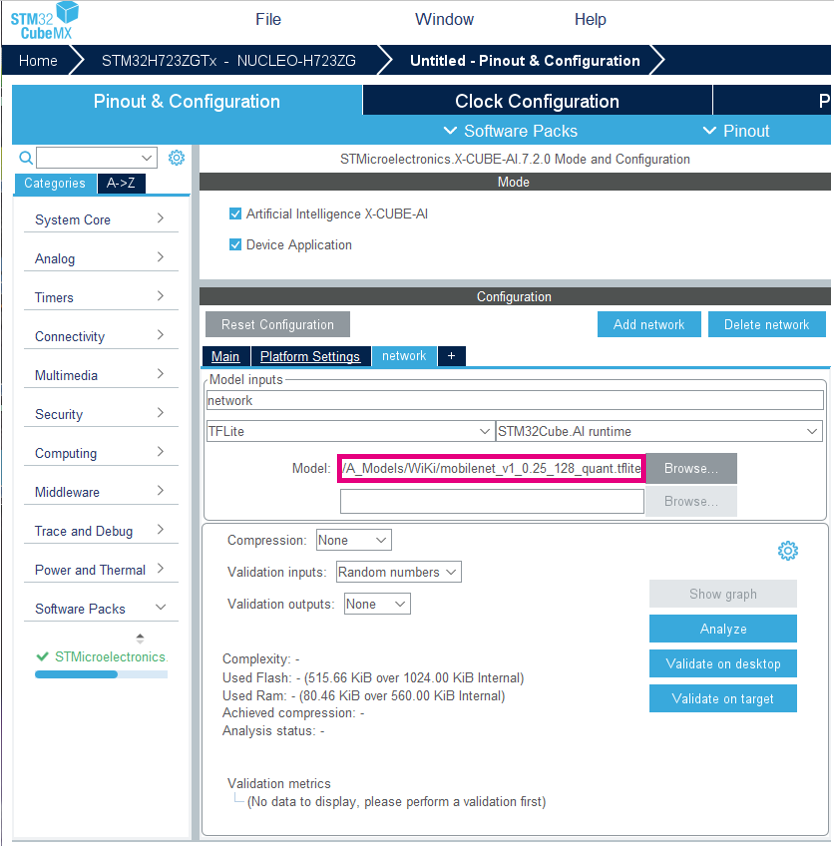

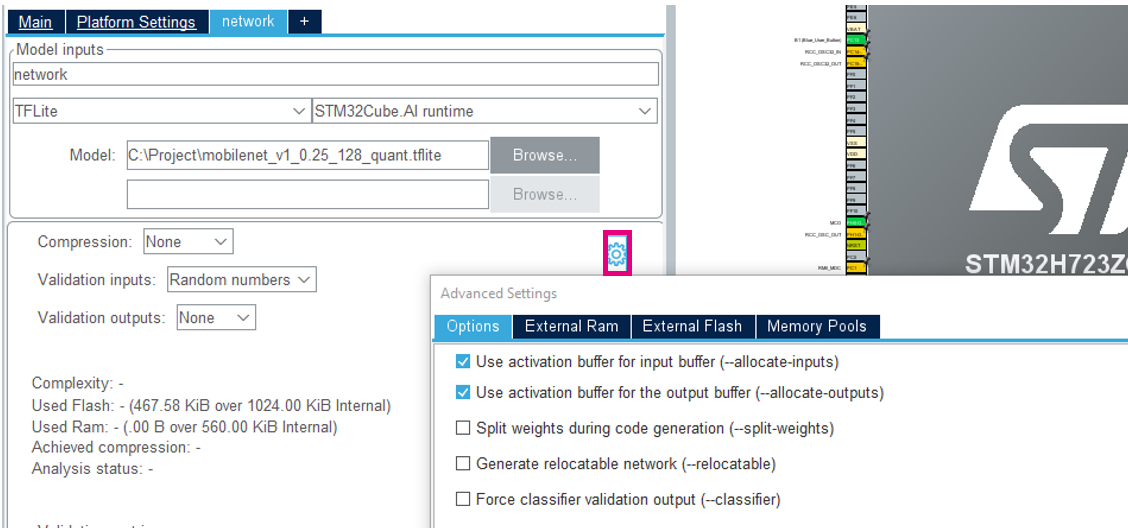

Upload a model, in our example the mobilenet_v1_0.25_128_quantized.tflite.

For our example, we keep the default (from versionv7.2 of X-CUBE-AI) memory options allocate input / output.

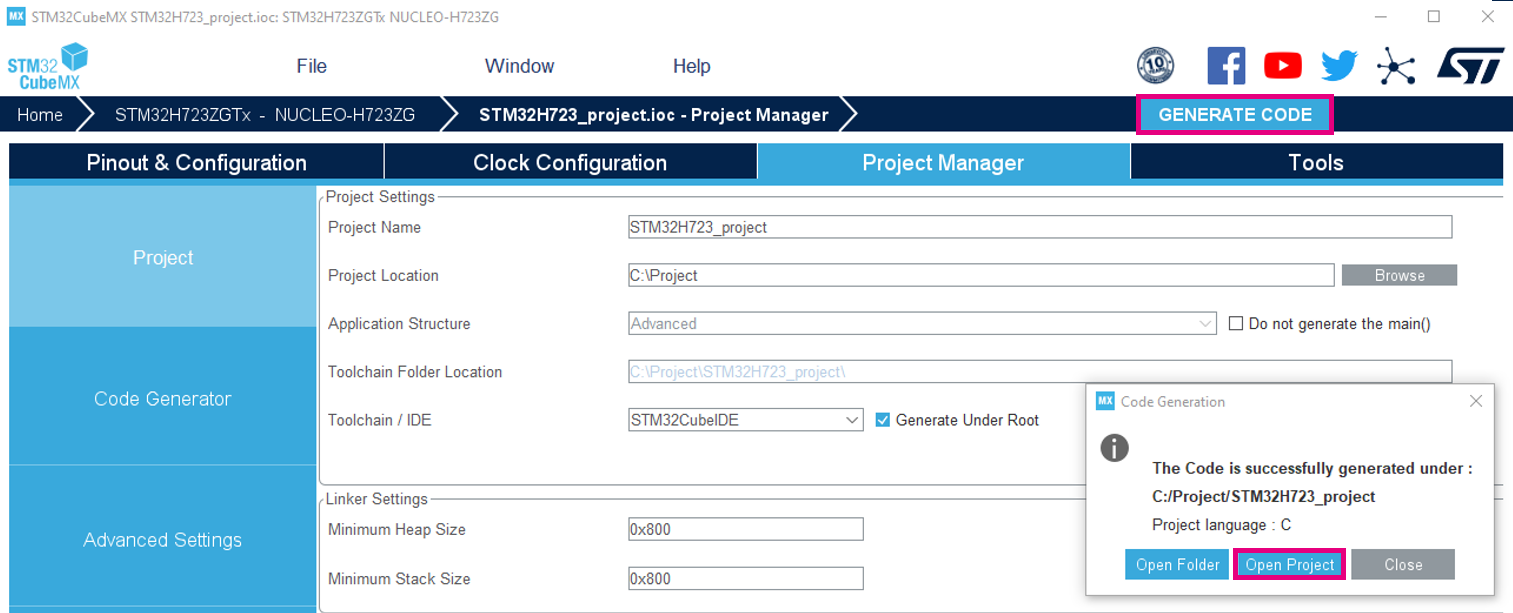

You can then generate the corresponding validation application as STM32Cube IDE project in a dedicated directory

Open the project in STM32CubeIDE

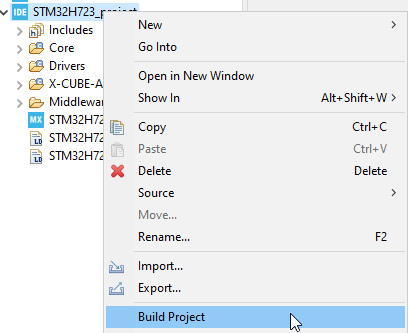

And build the project

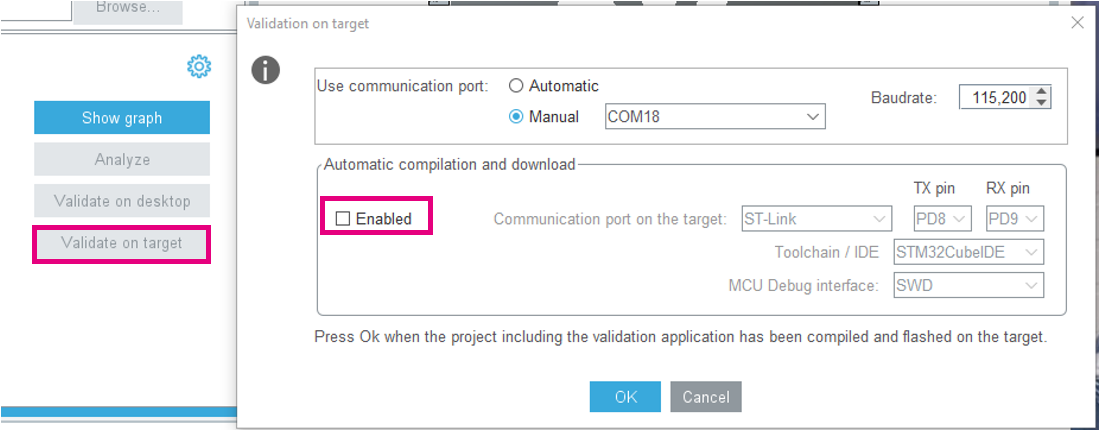

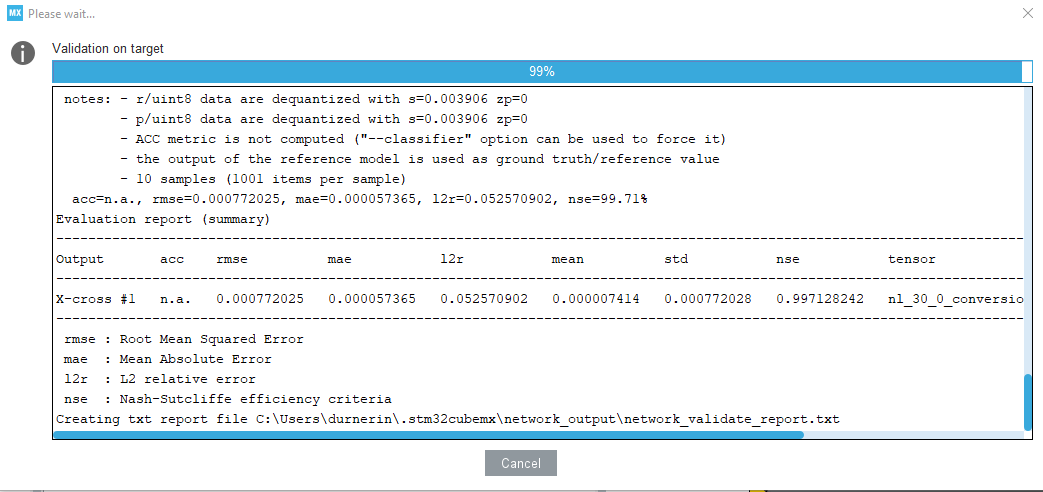

To check that the validation application is working as expected, program first the board (either though STM32CubeIDE or STM32CubeProgrammer) then reset the board. Open the validation on target panel and either select the board COM board or leave the automatic detection. Do not select the automatic compilation and download option as the board is already programmed with the right firmware.

Press ok and check that the validation is running correctly.

3. Automatic validation

The below batch script uses ST tools through command line interface to automatically:

- get the full memory footprint of a given model

- get the inference time

- get the validation metrics (validation done on random data)

In below script, the local working directory is "C:\Project" which shall correspond to the directory of the initial validation application project. There is two modes, defined by the variable "mode". The analyze mode, mode=analyze, do only an analyze to get main memory footprint contributors (ie weights and activations buffer). In this mode, it is not needed to generate a validation application (section2 can be skipped) and only X-CUBE-AI needs to be installed. The validation mode, mode=validate (default), do a validation to get full memory footprint (weights, activations and runtime) as well as inference time and validation metrics. In the script a temporary directory "tmp" is generated with two sub directories "stm32ai_ws" and "stm32ai_output". These directories will be used by the X-CUBE-AI stm32ai CLI executable as workspace and output directory. The main outputs and reports are copied from these directories to a result directory, for our example C:\Project\Results\STM32H723\mobilenet_v1_0.25_128_quant.tflite

The total memory footprint for a given model shall consider not only the size of the weights of the model (stored in Flash) and the size of the activations, input, output buffers. The size of the runtime code (Flash and RAM) shall also be considered. The runtime memory footprint can only be measured by building the code with a toolchain. A convenient way to measure the runtime footprint is to generate the code using the relocatable option. Note that to gain RAM space the "Use activation buffer for input buffer" and "Use activation buffer for the output buffer" options are selected.

he column Flash reports the Flash occupancy including the model weights, the runtime code generated by X-CUBE-AI to run the neural network and its constants (including the initialized tables). The column RAM reports the RAM buffers occupancy, used to store the model activations as well as input and output buffers, and the RAM required by the runtime to inference the model. Note that to gain RAM space the "Use activation buffer for input buffer" and "Use activation buffer for the output buffer" options are selected (through X-CUBE-AI Advanced Settings panel).