1. Introduction

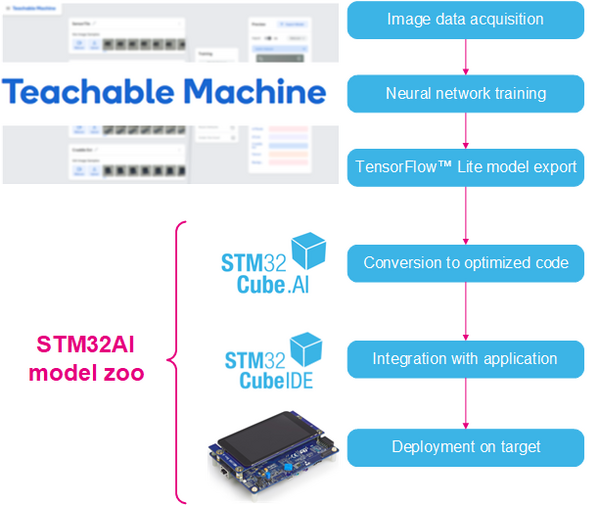

In this article we will see how to use an online tool called Teachable Machine together with STM32Cube.AI and the Function Pack FP-AI-VISION to create an image classifier running on the STM32H747I-DISCO board.

- Teachable Machine is an online tool allowing to quickly train a deep learning model for various tasks, including image classification

- STM32Cube.AI is a software aiming to generate optimized C code for STM32 for neural network inference

- The Function Pack FP-AI-VISION1 is a software example of an image classifier running on the STM32h747 Discovery board.

The following figure present the different steps presented in this article:

2. Prerequisites

2.1. Hardware

- STM32H747-DISCO Board

- STM32F4DIS-CAM Camera module

- A micro-USB to USB Cable

- (Optional) A webcam

2.2. Software

- STM32Cube IDE

- X-Cube-AI command line tool.

- FP-AI-VISION1

3. Part 1 - Train a model using Teachable Machine

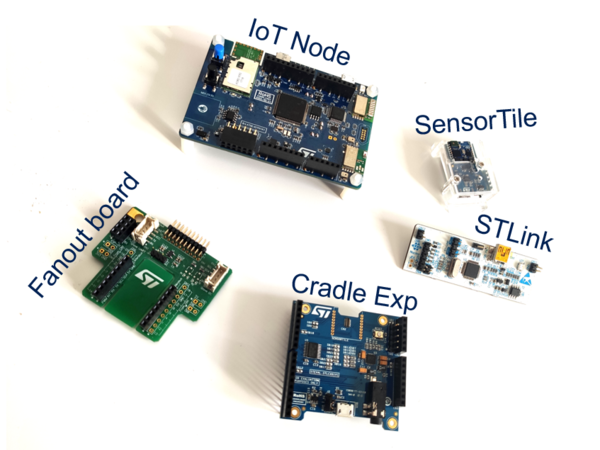

In this section, we will train deep neural network in the browser using Teachable Machine. We fist need to choose something to classify, in this example we will classify some ST boards and modules. The chosen boards are shown in the figure below:

You can choose whatever you want: Fruits, pasta, animals, people, etc...

Note: If you don't have a webcam, teachable machine allows to import images from your computer.

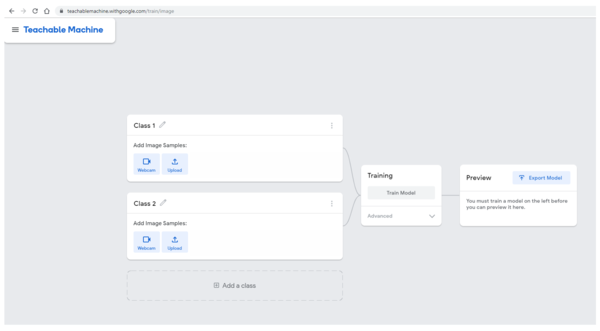

Let's get started. Open https://teachablemachine.withgoogle.com/, preferably from Chrome browser.

Click on Get started, then select Image Project. You will be presented with the following interface.

3.1. Add training data

You can skip this park by importing the dataset for this example provided here and clicking on "Teachable Machine" on the top-left side, then "Open Project file"

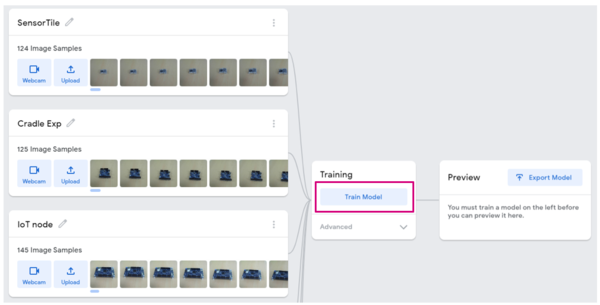

For each category you want to classify, edit the class name by clicking on (1). In my example, I choose to start with SensorTile.

If you want to add images with your webcam, click on webcam and record some images, if you have images on your computer click upload and select a directory containing your images.

Tips: Don't capture too many images, ~100 per class is usually enough. Try to vary camera angle and subject pose as much as possible. To unsure best result, a uniform background is recommended.

Once you have a satisfying amount of image for this class, repeat the process for the next one.

Note: It can be nice to have a "Background/Nothing" class, so the model will be able to tell when nothing is presented to the camera.

3.2. Train the model

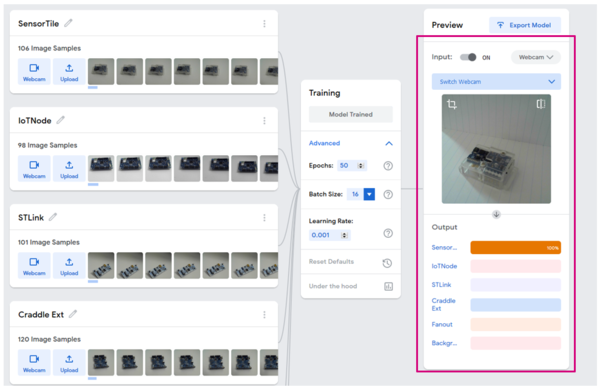

Now that we have a good amount of data, we are going to train a deep learning model for classifying theses different objects. In order to do this click the "Train Model" button, as shown below:

Depending on the amount of data you have, this process can take a while. If you are curious, you can select "Advanced" and click on "Under the hood". A side panel should pop up and you'll be able to see training metrics.

At the end of training you can see the predictions of your network on the "Preview" pane. You can either choose a webcam input or an imported file.

Note: If, at this point, your results are not good enough, here are a few tips: TODO

3.2.1. Under the hood

TODO

3.3. Export the model

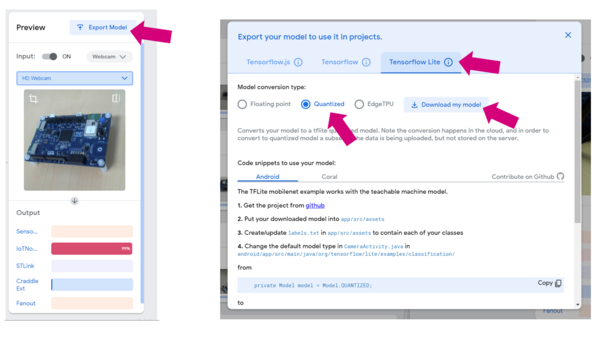

If you are happy with your model, it is time to export it. In order to do so, click the "Export Model" button. In the pop-up window, select "Tensorflow Lite", Check "Quantized" and click "Download my model".

The model conversion is done in the cloud, this step can take a few minutes.

Your browser will download a zip file containing the model as a .tflite file and a .txt file containing your label. Extract these two files in a clean directory that we will call the workspace.

3.3.1. Optional: Inspect the model using Netron

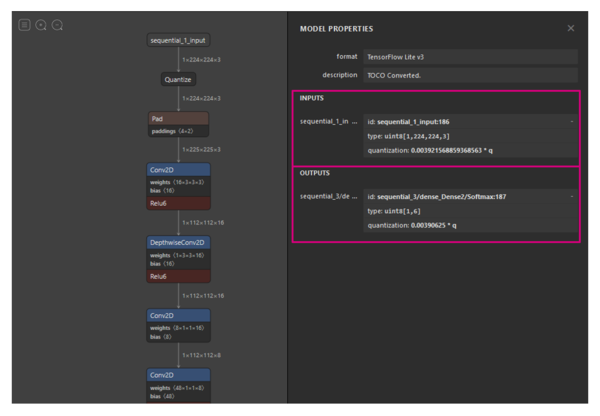

It's always interesting to take a look at a model architecture, input and output formats and shape. In order to do this, we will use the Netron webapp.

Visit https://lutzroeder.github.io/netron/ and select Open model, then choose the model.tflite file from Teachable Machine. Click on sequental_1_input, we observe that the input is of type uint8 and of size [1, 244, 244, 3]. Now let's look at outputs, in my case I have 6 classes so I see that the output of shape [1,6]. The quantization parameters are also reported, we will see how to use them in Part 3.

4. Part 2 - Convert the model

In this part we will use stm32ai command line tool to convert the TensorflowLite model to optimized C code for STM32.

For instructions on how to setup X-Cube-AI command line, please refer to [TODO]

Warning: FP-AI-VISION1 is using X-Cube-AI version 5.0.0. You can check your version of Cube.AI by running

stm32ai --version

Start by opening a shell in your workspace directory, then execute the following command:

cd <path to your workspace > stm32ai generate -m model.tflite -v 2

The expected output is:

Neural Network Tools for STM32 v1.2.0 (AI tools v5.0.0)

Running "generate" cmd...

-- Importing model

model files : /path/to/workspace/model.tflite

model type : tflite (tflite)

-- Importing model - done (elapsed time 0.531s)

-- Rendering model

-- Rendering model - done (elapsed time 0.184s)

-- Generating C-code

Creating /path/to/workspace/stm32ai_output/network.c

Creating /path/to/workspace/stm32ai_output/network_data.c

Creating /path/to/workspace/stm32ai_output/network.h

Creating /path/to/workspace/stm32ai_output/network_data.h

-- Generating C-code - done (elapsed time 0.782s)

Creating report file /path/to/workspace/stm32ai_output/network_generate_report.txt

Exec/report summary (generate dur=1.500s err=0)

-----------------------------------------------------------------------------------------------------------------

model file : /path/to/workspace/model.tflite

type : tflite (tflite)

c_name : network

compression : None

quantize : None

L2r error : NOT EVALUATED

workspace dir : /path/to/workspace/stm32ai_ws

output dir : /path/to/workspace/stm32ai_output

model_name : model

model_hash : 2d2102c4ee97adb672ca9932853941b6

input : input_0 [150,528 items, 147.00 KiB, ai_u8, scale=0.003921568859368563, zero=0, (224, 224, 3)]

input (total) : 147.00 KiB

output : nl_71 [6 items, 6 B, ai_i8, scale=0.00390625, zero=-128, (6,)]

output (total) : 6 B

params # : 517,794 items (526.59 KiB)

macc : 63,758,922

weights (ro) : 539,232 (526.59 KiB)

activations (rw) : 853,648 (833.64 KiB)

ram (total) : 1,004,182 (980.65 KiB) = 853,648 + 150,528 + 6

------------------------------------------------------------------------------------------------------------------

id layer (type) output shape param # connected to macc rom

------------------------------------------------------------------------------------------------------------------

0 input_0 (Input) (224, 224, 3)

conversion_0 (Conversion) (224, 224, 3) input_0 301,056

------------------------------------------------------------------------------------------------------------------

1 pad_1 (Pad) (225, 225, 3) conversion_0

------------------------------------------------------------------------------------------------------------------

2 conv2d_2 (Conv2D) (112, 112, 16) 448 pad_1 5,820,432 496

nl_2 (Nonlinearity) (112, 112, 16) conv2d_2

------------------------------------------------------------------------------------------------------------------

( ... )

------------------------------------------------------------------------------------------------------------------

71 nl_71 (Nonlinearity) (1, 1, 6) dense_70 102

------------------------------------------------------------------------------------------------------------------

72 conversion_72 (Conversion) (1, 1, 6) nl_71

------------------------------------------------------------------------------------------------------------------

model p=517794(526.59 KBytes) macc=63758922 rom=526.59 KBytes ram=833.64 KiB io_ram=147.01 KiB

Complexity per-layer - macc=63,758,922 rom=539,232

------------------------------------------------------------------------------------------------------------------

id layer (type) macc rom

------------------------------------------------------------------------------------------------------------------

0 conversion_0 (Conversion) || 0.5% | 0.0%

2 conv2d_2 (Conv2D) ||||||||||||||||||||||||| 9.1% | 0.1%

3 conv2d_3 (Conv2D) |||||||||| 3.5% | 0.0%

4 conv2d_4 (Conv2D) ||||||| 2.5% | 0.0%

5 conv2d_5 (Conv2D) |||||||||||||||||||||||||| 9.4% | 0.1%

7 conv2d_7 (Conv2D) ||||||| 2.6% | 0.1%

( ... )

64 conv2d_64 (Conv2D) |||| 1.5% ||||| 3.7%

65 conv2d_65 (Conv2D) | 0.3% | 0.8%

66 conv2d_66 (Conv2D) |||||||| 2.9% |||||||| 7.1%

67 conv2d_67 (Conv2D) ||||||||||||||||||||||||||||||| 11.3% ||||||||||||||||||||||||||||||| 27.5%

69 dense_69 (Dense) | 0.2% |||||||||||||||||||||||||| 23.8%

70 dense_70 (Dense) | 0.0% | 0.1%

71 nl_71 (Nonlinearity) | 0.0% | 0.0%

------------------------------------------------------------------------------------------------------------------

This command will generate 4 files:

- network.c

- network_data.c

- network.h

- network_data.h

under workspace/stm32ai_ouptut/.

Let's take a look at the highlighted lines, we learn that the model uses 526.59 KiB of weights (read-only memory) and 833.54 KiB of activations. As the STM32H747 doesn't have 833 KiB of contiguous RAM, we need to use the external SDRAM present on the STM32H747-DISCO board (Please refer to UM-XXXX )

5. Part 3 - Integration with FP-AI-VISION1

In this part we will import our brand new model into the AI-VISION1 function pack. This function pack provides a software example for a food classification application, find out more info on FP-AI-VISION1 here.

The main idea of this section is to replace the network and network_data files in FP-AI-VISION1 by the newly generated files and make a few adjustments to the code.

5.1. Load the project

If it's not already done, download the zip file from ST website and extract the content to your workspace. It should now look like this:

TODO

If we take a look at the files of the function pack, we see 2 configurations, as shown below.

Our model is a quantized one, so we choose the Quantized_Model directory.

Go into workspace/FP_AI_VISION1/Projects/STM32H747I-DISCO/Applications/FoodReco_MobileNetDerivative/Quantized_Model/STM32CubeIDE/STM32H747I_DISCO and double-click on .project. STM32CubeIDE starts with the project loaded.

5.2. Replace the network files

The model files are located in workspace/FP_AI_VISION1/Projects/STM32H747I-DISCO/Applications/FoodReco_MobileNetDerivative/Quantized_Model/CM7/ Src and Inc directory.

Delete the following files and replace them with the ones from workspace/stm32ai_output:

In Src:

- network.c

- network_data.c

In Inc:

- network.h

- network_data.h

5.3. Update the labels

In this step we will updates the labels for the output of the network. The label.txt file downloaded with TeachableMachine can help for this. In this example, the content of this file looks like this:

0 SensorTile 1 IoTNode 2 STLink 3 Craddle Ext 4 Fanout 5 Background

From STM32CubeIDE, open fp_vision_app.c. Go to line 129 where the g_food_classes is defined. We need to update this variable with our label names:

// fp_vision_app.c line 129

const char* g_food_classes[AI_NET_OUTPUT_SIZE] = {

"SensorTile", "IoTNode", "STLink", "Craddle Ext", "Fanout", "Background"};

5.4. Update the output format

In the original code, the neural network output is in floating point (ai_float). With Teachable Machine, the output is in 8-bit integer (ai_i8). We need to update the code consequently.

In fp_vision_app.c line 97 modify the line as follows:

// fp_vision_app.c line 97

// ai_float nn_output_buff[AI_NET_OUTPUT_SIZE] = {0}; // Old code

ai_i8 nn_output_buff[AI_NET_OUTPUT_SIZE] = {0}; // New code code

Apply this change to fp_vision_app.h as well. You can open the file from the Include directory in the Project Explorer as shown bellow.

// fp_vision_app.h line 48

//extern ai_float nn_output_buff[]; // Old code

extern ai_i8 nn_output_buff[]; // New code

5.5. Dequantize the output

We're almost done, the last thing we need to do is dequantize the output from the model. As we can see from the output of stm32ai, the output is quantized in 8-bit signed integer with the following parameters:

scale = 0.00390625

zero_point = -128

The formula for converting quantized to real is the following:

𝑅𝑒𝑎𝑙 = 𝑆𝑐𝑎𝑙𝑒 × (𝑄𝑢𝑎𝑛𝑡𝑖𝑧𝑒𝑑 − 𝑍𝑒𝑟𝑜𝑃𝑜𝑖𝑛𝑡)

Let's apply this transformation to the code. Open main.c and visit the AI_Output_Display() located line 562.

Just before the BubbleSort() function, we need to perform the dequantization. Add the following lines and update the BublleSort() call as following:

/* Added lines */

const ai_i32 zero_point = -128;

const ai_float scale = 0.00390625f;

ai_float float_output[NN_OUTPUT_CLASS_NUMBER];

for(int i = 0; i < NN_OUPUT_CLASS_NUMBER; i++){

float_output[i] = scale * ( (ai_float) (NN_OUTPUT_BUFFER[i]) - zero_point);

}

/* End added lines */

Bubblesort(float_output, ranking, NN_OUPUT_CLASS_NUMBER); /* Updated line */

We still need to add two modifications to this function

- First update the display mode by setting

display_modeto 1 in order to see the image and label on LCD Display. - Then last thing that we need to do use

float_outputin the display function. (see bellow)

All the modifications are highlighted in the snippet bellow.

// main.c line 562

static void AI_Output_Display(void)

{

static uint32_t occurrence_number = NN_OUTPUT_DISPLAY_REFRESH_RATE;

static uint32_t display_mode=1; /* Updated line */

occurrence_number--;

if (occurrence_number == 0)

{

char msg[70];

int ranking[NN_OUPUT_CLASS_NUMBER];

occurrence_number = NN_OUTPUT_DISPLAY_REFRESH_RATE;

for (int i = 0; i < NN_OUPUT_CLASS_NUMBER; i++)

{

ranking[i] = i;

}

/* Added lines */

const ai_i32 zero_point = -128;

const ai_float scale = 0.00390625f;

ai_float float_output[NN_OUTPUT_CLASS_NUMBER];

for(int i = 0; i < NN_OUPUT_CLASS_NUMBER; i++){

float_output[i] = scale * ( (ai_float) (NN_OUTPUT_BUFFER[i]) - zero_point);

}

/* End added lines */

Bubblesort(float_output, ranking, NN_OUPUT_CLASS_NUMBER); /* Updated line */

/*Check if PB is pressed*/

if (BSP_PB_GetState(BUTTON_WAKEUP) != RESET)

{

display_mode = !display_mode;

BSP_LCD_Clear(LCD_COLOR_BLACK);

if (display_mode == 1)

{

sprintf(msg, "Entering CAMERA PREVIEW mode");

}

else if (display_mode == 0)

{

sprintf(msg, "Exiting CAMERA PREVIEW mode");

}

BSP_LCD_DisplayStringAt(0, LINE(9), (uint8_t*)msg, CENTER_MODE);

sprintf(msg, "Please release button");

BSP_LCD_DisplayStringAt(0, LINE(11), (uint8_t*)msg, CENTER_MODE);

LCD_Refresh();

/*Wait for PB release*/

while (BSP_PB_GetState(BUTTON_WAKEUP) != RESET);

HAL_Delay(200);

BSP_LCD_Clear(LCD_COLOR_BLACK);

}

if (display_mode == 0)

{

BSP_LCD_Clear(LCD_COLOR_BLACK);/*To clear the camera capture*/

DisplayFoodLogo(LCD_RES_WIDTH / 2 - 64, LCD_RES_HEIGHT / 2 -100, ranking[0]);

}

else if (display_mode == 1)

{

sprintf(msg, "CAMERA PREVIEW MODE");

BSP_LCD_DisplayStringAt(0, LINE(DISPLAY_ACQU_MODE_LINE), (uint8_t*)msg, CENTER_MODE);

}

for (int i = 0; i < NN_TOP_N_DISPLAY; i++)

{

sprintf(msg, "%s %.0f%%", NN_OUTPUT_CLASS_LIST[ranking[i]], float_output[i] * 100); /* Updated Line */

BSP_LCD_DisplayStringAt(0, LINE(DISPLAY_TOP_N_LAST_LINE - NN_TOP_N_DISPLAY + i), (uint8_t *)msg, CENTER_MODE);

}

sprintf(msg, "Inference: %ldms", *(NN_INFERENCE_TIME));

BSP_LCD_DisplayStringAt(0, LINE(DISPLAY_INFER_TIME_LINE), (uint8_t *)msg, CENTER_MODE);

sprintf(msg, "Fps: %.1f", 1000.0F / (float)(Tfps));

BSP_LCD_DisplayStringAt(0, LINE(DISPLAY_FPS_LINE), (uint8_t *)msg, CENTER_MODE);

LCD_Refresh();

/* (...) */

5.6. Compile the project

The function pack for quantized models comes in 4 memory configuration :

- Quantized_Ext

- Quantized_Int_Fps

- Quantized_Int_Mem

- Quantized_Int_Split

As we saw in section X, the activation buffer takes more than 800 KB of RAM, for this reason, we can only use the Quantized_Ext configuration in order to place activation buffer. For more details on the memory configuration, refer to UM3232.

In order to compile only the Quantized_Ext configuration, select Project > Properties from the top bar. Then select C/C++ Build from the left pane. Click on manage configuration and delete all configuration which are not Quantized_Ext. You should be left with only one configuration.

Clean the project by selecting Project > Clean... and clicking Clean.

Finally, build the project clicking on Project > Build All.

At the end of compilation, a file named STM32H747I_DISCO_CM7.hex is generated in

workspace > FP_AI_VISION1 > Projects > STM32H747I-DISCO > Applications > FoodReco_MobileNetDerivative > Quantized_Model > STM32CubeIDE > STM32H747I_DISCO > Quantized_Ext

5.7. Flash the board

Connect the STM32H747I-DISCO to your PC via a micro-USB to USB cable. Open STM32CubeProgrammer and connect to ST-LINK. Then flash the board with the hex file.