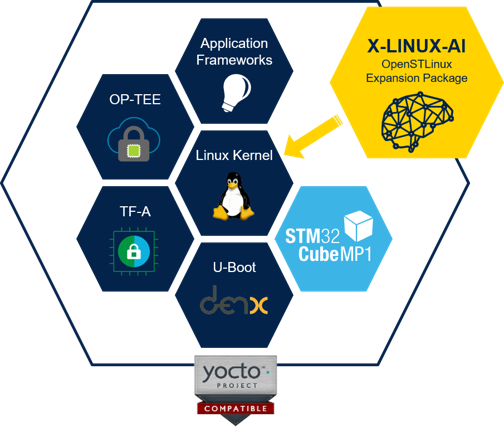

X-LINUX-AI is an STM32 MPU OpenSTLinux Expansion Package that targets artificial intelligence for STM32MP1 Series devices.

It contains Linux AI frameworks, as well as application examples to get started with some basic use cases such as computer vision (CV).

It is composed of an OpenEmbedded meta layer, named meta-st-stm32mpu-ai, to be added on top of the STM32MP1 Distribution Package.

It brings a complete and coherent easy-to-build / install environment to take advantage of AI on STM32MP1 Series devices.

1. Versions[edit source]

1.1. X-LINUX-AI v2.1.0[edit source]

1.1.1. Contents[edit source]

- /!\ New /!\ TensorFlow Lite[2] 2.4.1

- Coral Edge TPU[3] accelerator support

- /!\ New /!\ libedgetpu 2.4.1 aligned with TensorFlow Lite 2.4.1 (built from source)

- /!\ New /!\ armNN[4] 20.11

- /!\ New /!\ OpenCV[5] 4.1.x

- Python[6] 3.8.x (enabling Pillow module)

- Support STM32MP15xF[7] devices operating at up to 800MHz

- Application samples

- C++ / Python image classification using TensorFlow Lite based on MobileNet v1 quantized model

- C++ / Python object detection using TensorFlow Lite based on COCO SSD MobileNet v1 quantized model

- C++ / Python image classification using Coral Edge TPU based on MobileNet v1 quantized model and compiled for the Coral Edge TPU

- C++ / Python object detection using Coral Edge TPU based on COCO SSD MobileNet v1 quantized model and compiled for the Coral Edge TPU

- C++ image classification using armNN TensorFlow Lite parser based on MobileNet v1 float model

- C++ object detection using armNN TensorFlow Lite parser based on COCO SSD MobileNet v1 quantized model

- /!\ New /!\ C++ face recognition using TensorFlow Lite models capable of recognizing the face of a known (enrolled) user (available on demand)

1.1.2. Validated hardware[edit source]

As any software expansion package, the X-LINUX-AI is supported on all STM32MP1 Series and it has been validated on the following boards:

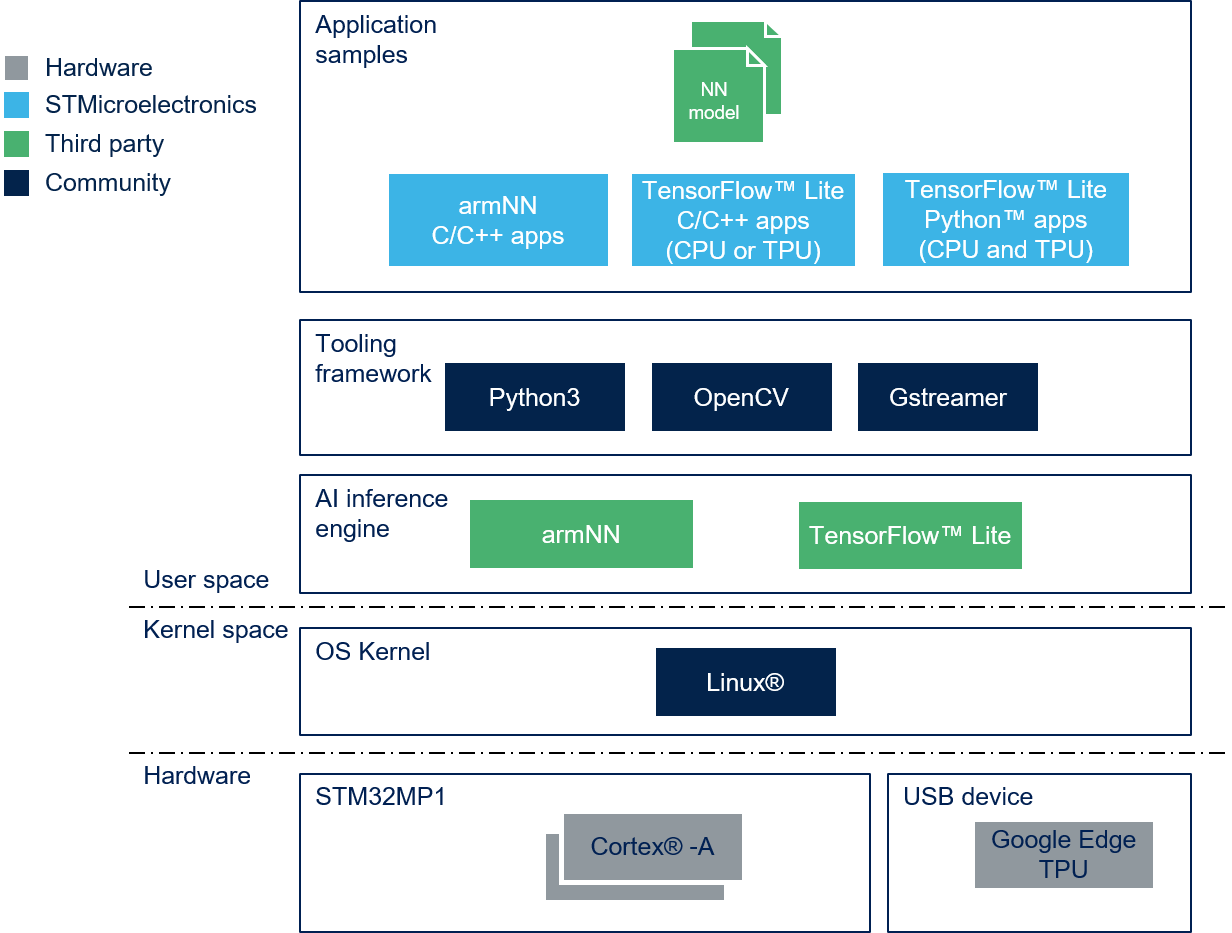

1.1.3. Software structure[edit source]

1.2. X-LINUX-AI v2.0.0[edit source]

2. Install from the OpenSTLinux AI package repository[edit source]

All the generated X-LINUX-AI packages are available from the OpenSTLinux AI package repository service hosted at the non-browsable URL http://extra.packages.openstlinux.st.com/AI.

This repository contains AI packages that can be simply installed using apt-* utilities, which the same as those used on a Debian system:

- the main group contains the selection of AI packages whose installation is automatically tested by STMicroelectronics

- the updates group is reserved for future uses such as package revision update.

You can install them individually or by package group.

2.1. Prerequisites[edit source]

ST boards prerequisites:

Avenger96 board prerequisites:

2.2. Configure the AI OpenSTLinux package repository[edit source]

Once the board is booted, execute the following command in the console in order to configure the AI OpenSTLinux package repository:

For ecosystem release v2.1.0: wget http://extra.packages.openstlinux.st.com/AI/2.1/pool/config/a/apt-openstlinux-ai/apt-openstlinux-ai_1.0_armhf.deb dpkg -i apt-openstlinux-ai_1.0_armhf.deb For ecosystem release v2.0.0

:Expand For ecosystem release v1.2.0 :Expand

Then synchronize the AI OpenSTLinux package repository.

apt-get update

2.3. Install AI packages[edit source]

2.3.1. Install all X-LINUX-AI packages[edit source]

| Command | Description |

|---|---|

apt-get install packagegroup-x-linux-ai

|

Install all the X-LINUX-AI packages (TensorFlow Lite, Edge TPU, armNN, application samples and tools) |

2.3.3. Install individual packages[edit source]

3. Re-generate X-LINUX-AI OpenSTLinux distribution[edit source]

With the following procedure, you can re-generate the complete distribution enabling the X-LINUX-AI expansion package.

This procedure is mandatory if you want to update frameworks by yourself, or if you want to modify the application samples.

For further details, please expand the contents...

4. How to use the X-LINUX-AI Expansion Package[edit source]

4.1. Material needed[edit source]

To use the X-LINUX-AI OpenSTLinux Expansion Package, choose one of the following materials:

- STM32MP157C-DK2[8] + an UVC USB WebCam

- STM32MP157C-EV1[9] with the built in OV5640 parallel camera

- STM32MP157A-EV1[10] with the built in OV5640 parallel camera

- STM32MP157 Avenger96 board[1] + an UVC USB WebCam or the OV5640 CSI Camera mezzanine board[11]

Optional:

- Coral USB Edge TPU[3] accelerator

4.2. Boot the OpenSTlinux Starter Package[edit source]

At the end of the boot sequence, the demo launcher application appears on the screen.

4.3. Install the X-LINUX-AI[edit source]

After having configured the AI OpenSTLinux package you can install the X-LINUX-AI components.

apt-get install packagegroup-x-linux-ai

And restart the demo launcher

systemctl restart weston@root

4.4. Launch an AI application sample[edit source]

Once the demo launcher is restarted, notice that it is slightly different because new AI application samples have been installed.

The demo laucher has the following appearance, and you can navigate into the different screens by using the NEXT or BACK buttons.

Screens 2, 3 and 4 contain AI application samples that are described within dedicated article available in the X-LINUX-AI application samples zoo page.

4.5. Enjoy running your own NN models[edit source]

The above examples provide application samples to demonstrate how to execute models easily on the STM32MP1.

You are free to update the C/C++ application or Python scripts for your own purposes, using your own NN models.

Source code locations are provided in application sample pages.

5. References[edit source]

- ↑ Jump up to: 1.0 1.1 1.2 Avenger96

- ↑ Jump up to: 2.0 2.1 TensorFlow Lite

- ↑ Jump up to: 3.0 3.1 3.2 Coral Edge TPU

- ↑ Jump up to: 4.0 4.1 armNN

- ↑ Jump up to: 5.0 5.1 OpenCV

- ↑ Jump up to: 6.0 6.1 Python

- ↑ Jump up to: 7.0 7.1 STM32MP1 series

- ↑ Jump up to: 8.0 8.1 8.2 STM32MP157C-DK2

- ↑ Jump up to: 9.0 9.1 9.2 STM32MP157C-EV1

- ↑ Jump up to: 10.0 10.1 10.2 STM32MP157A-EV1

- ↑ OV5640 CSI D3Camera board