This article explains how to get started on the TensorFlow Lite[1] face recognition application.

1. Description[edit source]

The face recognition application is capable of recognizing the face of a known (i.e. enrolled) user.

The application demonstrates a computer vision use case for face recognition where frames are grabbed from a camera input (/dev/videox) and compute by 2 neural network models (face detection and face recognition) interpreted by the TensorFlow Lite[1] framework.

A Gstreamer pipeline is used to stream camera frames (using v4l2src), to display a preview (using waylandsink) and to execute neural network inference (using appsink).

The result of the inference is displayed in the preview. The overlay is done using GtkWidget with cairo.

This combination is quite simple and efficient in terms of CPU overhead.

1.1. Frame processing flow[edit source]

The figure below shows the different frame processing stages involved in the face recognition application:

1.1.1. Camera capture[edit source]

The camera frame capture has the following characteristics:

- Resolution is set to QVGA (320 x 240)

- Pixel color format is set to RGB565

1.1.2. Frame Preprocessing[edit source]

The main preprocessing stage involved in the Face Reco application is the pixel color format conversion so to convert the RGB565 captured frame into a RGB888 frame.

1.1.3. Face Detection[edit source]

The Face Detection block is in charge of finding the faces present in the input frame (QVGA, RGB888). In the current version of the application, the maximum number of faces that can be found is set to one. The output of this block is a frame of resolution 96 x 96 that contains the face found in the input captured frame.

1.1.4. Face Recognition[edit source]

The Face Recognition block is in charge of extracting features from the face and computing a signature (embedding vector) corresponding to the input face.

1.1.5. Face Identification[edit source]

The Face Identification block is in charge of computing the distance between:

- The vector produced by the Face Recognition block, and

- Each of the vectors stored in memory (and corresponding to the enrolled faces)

The output Face Identification block generates the two following outputs:

- a User Face ID corresponding to the minimum distance

- a similarity score

2. Installation[edit source]

2.1. Install from the OpenSTLinux AI package repository[edit source]

After having configured the AI OpenSTLinux package you can install X-LINUX-AI components for this application:

apt-get install tflite-cv-apps-face-recognition-c++

Then restart the demo launcher:

systemctl restart weston@root

3. How to use the application[edit source]

3.1. Launching via the demo launcher[edit source]

3.2. Executing with the command line[edit source]

The facereco_tfl_gst_gtk C/C++ application is located in the userfs partition:

/usr/local/demo-ai/computer-vision/tflite-face-recognition/bin/facereco_tfl_gst_gtk

It accepts the following input parameters:

Usage: ./facereco_tfl_gst_gtk [option] --reco_simultaneous_faces <val>: number of faces that could be recognized simultaneously (default is 1) --reco_threshold <val>: face recognition threshold for face similarity (default is 0.70 = 70%) --max_db_faces <val>: max number of faces to be stored in the data base (default is 200) -i --image <directory path>: image directory with image to be classified -v --video_device <n>: video device (default /dev/video0) --frame_width <val>: width of the camera frame (default is 640) --frame_height <val>: height of the camera frame (default is 480) --framerate <val>: framerate of the camera (default is 15fps) --help: show this help

- launch face recognition based on the pictures located in /usr/local/demo-ai/computer-vision/tflite-face-recognition/testdata directory

/usr/local/demo-ai/computer-vision/tflite-face-recognition/bin/launch_bin_facereco_tfl_model_testdata.sh

3.3. In practice[edit source]

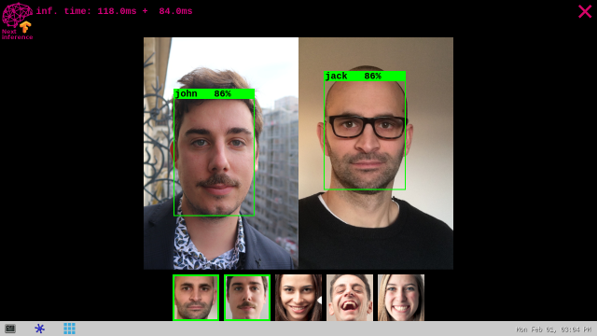

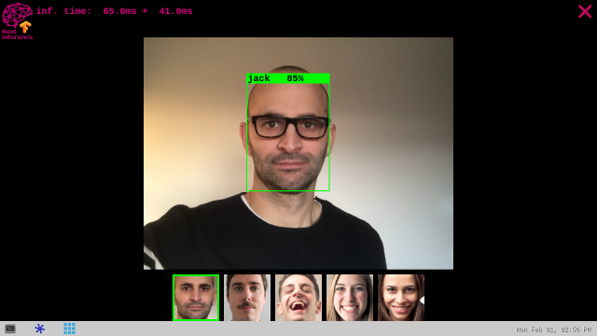

As soon as a face is detected within the camera captured frame, a rectangle box is drawn around it.

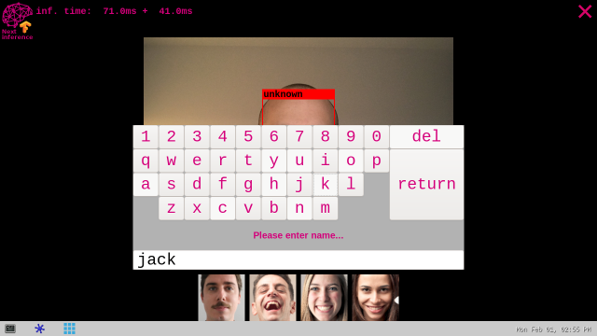

If the system is not able to match the detected face with one of the enrolled faces (either because the user face is not yet enrolled or because the Face Identification similarity score is lower than the default recognition threshold), the rectangle box is drawn in red with the unknown identity.

To enroll a new user, simply touch (or clicked) inside the red rectangle. The virtual keyboard is then displayed to get the user name. To finish the enrollment process simply press the return key. Note that the face picture is capture when the red rectangle is touched (or clicked).

If the system is able to match the detected face with one of the enrolled faces, the rectangle box is drawn in green and the registered user name is displayed with the similarity score expressed in percentage (%). The thumbnail (representing the user's enrolled face matching the detected face) is displayed and highlight with a green rectangle in the banner located at the bottom of the preview.

The information displayed at the top of the preview provide performances figures:

- disp. fps is the average framerate of the preview expressed in frame per second

- inf. fps is the average framerate for both face detection and face recognition inferences grouped together

- inf. time is the instant measure of inference time for the face detection processing and the face recognition processing.

3.4. Performances[edit source]

The average execution framerate to execute both face detection and face recognition on 1 face is around 5 FPS:

- face detection execution time ~70 ms

- face recognition execution time ~55 ms

Using the LFW dataset, a False Acceptance Rate (FAR) of ~1% (when plotting the True Acceptance Rate TAR=f(FAR) is reach with a recognition threshold set to 0.70.

The default recognition threshold is set to 0.70. A threshold of 0.70 corresponds to a FAR of ~1% when plotting the TAR=f(FAR) graph using the LFW dataset. TAR stands for True Acceptance Rate; It represents the degree at which the system can correctly match the biometric information from the same person. FAR stands for False Acceptance Rate; It is the probability of cases for which the system fallaciously authorizes an unauthorized person.

4. References[edit source]