This article describes the content of the X-LINUX-AI-CV Expansion Package and explains how to use it.

1. Description[edit source]

X-LINUX-AI-CV is the STM32 MPU OpenSTLinux Expansion Package that targets artificial intelligence for computer vision.

This package contains AI and computer vision frameworks, as well as application examples to get started with some basic use cases.

1.1. Current version[edit source]

X-LINUX-AI-CV v1.1.0

1.2. Contents[edit source]

- Tensorflow Lite[1] 2.0.0

- OpenCV[2] 3.4.x

- Python[3] 3.5.x (enabling Pillow module)

- Python application examples

- Image classification example based on MobileNet v1 model

- Object detection example based on COCO SSD MobileNet v1 model

- C/C++ application examples

- Image classification example based on MobileNet v1 model

- Object detection example based on COCO SSD MobileNet v1 model

- Support of the STM32MP157 Avenger96 board[4] + OV5640 CSI Camera mezzanine board[5]

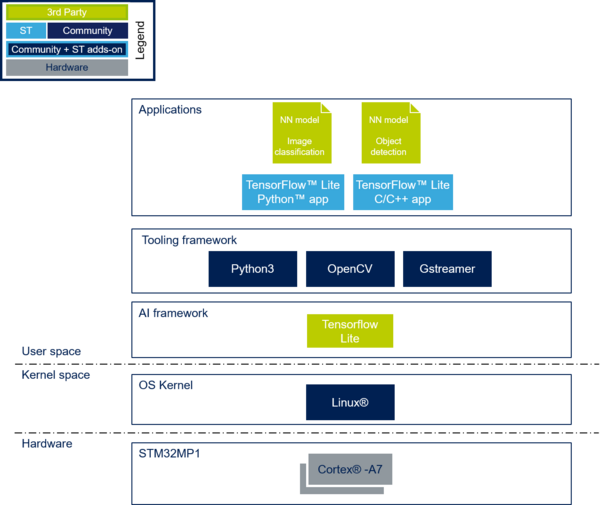

1.3. Software structure[edit source]

1.4. Supported hardware[edit source]

As any software expansion package, the X-LINUX-AI-CV is supported on all STM32MP1 Series and is compatible with the following boards:

2. How to use the X-LINUX-AI-CV Expansion Package[edit source]

2.1. Software installation[edit source]

Please refer to the STM32MP1 artificial intelligence expansion packages article to build and install the X-LINUX-AI-CV software.

2.2. Material needed[edit source]

To use the X-LINUX-AI-CV OpenSTLinux Expansion Package, choose one of the following materials:

- STM32MP157C-DK2[6] + an UVC USB WebCam

- STM32MP157C-EV1[7] with the built in camera

- STM32MP157A-EV1[8] with the built in camera

- STM32MP157 Avenger96 board[4] + an UVC USB WebCam or the OV5640 CSI Camera mezzanine board[5]

3. AI application examples[edit source]

Application examples are provided within two flavors:

- C/C++ application

- Python application

3.1. C/C++ TensorFlowLite applications[edit source]

This part provides information about the C/C++ applications examples based on TensorflowLite, Gstreamer and OpenCV.

The applications integrate a camera preview and test data picture that is then connected to the chosen TensorFlowLite model.

Two C/C++ application examples are available and are described below:

- Image classification example based on MobileNet v1 model

- Object detection example based on COCO SSD MobileNet v1 model

3.1.1. Image classification application[edit source]

3.1.1.1. Description[edit source]

The image classification[9] neural network model allows identification of the subject represented by an image. It classifies an image into various classes.

The label_tfl_multiprocessing.py Python script (located in the userfs partition: /usr/local/demo-ai/ai-cv/python/label_tfl_multiprocessing.py) is a multi-process python application for image classification.

The application enables OpenCV camera streaming (or test data picture) and TensorFlowLite interpreter runing the NN inference based on the camera (or test data pictures) inputs.

The user interface is implemented using Python GTK.

3.1.1.2. How to use it[edit source]

The Python script label_tfl_multiprocessing.py accepts the following input parameters:

-i, --image image directory with images to be classified -v, --video_device video device (default /dev/video0) --frame_width width of the camera frame (default is 320) --frame_height height of the camera frame (default is 240) --framerate framerate of the camera (default is 30fps) -m, --model_file tflite model to be executed -l, --label_file name of file containing labels --input_mean input mean --input_std input standard deviation

3.1.1.3. Testing with MobileNet V1[edit source]

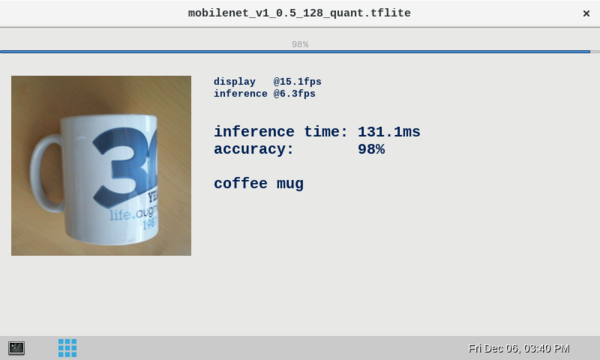

3.1.1.3.1. Default model: MobileNet V1 0.5 128 quant[edit source]

The default model used for tests is the mobilenet_v1_0.5_128_quant.tflite downloaded from Tensorflow Lite hosted models[10]

To ease launching of the Python script, two shell scripts are available:

- launch image classification based on camera frame inputs

/usr/local/demo-ai/ai-cv/python/launch_python_label_tfl_mobilenet.sh

- launch image classification based on the picture located in /usr/local/demo-ai/ai-cv/models/mobilenet/testdata directory

/usr/local/demo-ai/ai-cv/python/launch_python_label_tfl_mobilenet_testdata.sh

3.1.1.3.2. Testing another MobileNet v1 model[edit source]

You can test other models by downloading them directly to the STM32MP1 board. From example:

curl http://download.tensorflow.org/models/mobilenet_v1_2018_02_22/mobilenet_v1_1.0_224_quant.tgz | tar xzv -C /usr/local/demo-ai/ai-cv/models/mobilenet/ python3 /usr/local/demo-ai/ai-cv/python/label_tfl_multiprocessing.py -m /usr/local/demo-ai/ai-cv/models/mobilenet/mobilenet_v1_1.0_224_quant.tflite -l /usr/local/demo-ai/ai-cv/models/mobilenet/labels.txt -i /usr/local/demo-ai/ai-cv/models/mobilenet/testdata/

3.1.1.4. Testing with your own model[edit source]

The label_tfl_multiprocessing.py python script fits with Tensorflow Lite model format for image classification. Any model with a .tflite extension and a label file can be used with label_tfl_multiprocessing.py python script.

You are free to update the label_tfl_multiprocessing.py python script to perfectly fit your needs.

3.1.2. Object detection application[edit source]

3.1.2.1. Description[edit source]

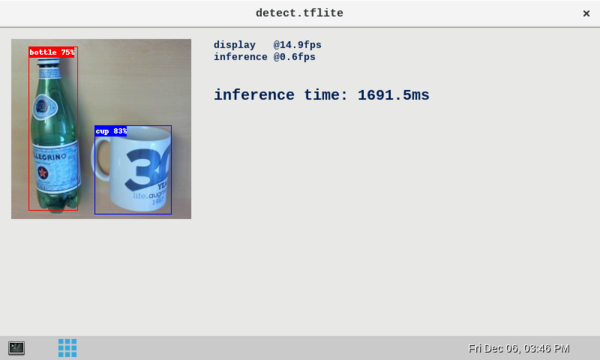

The object detection[11] neural network model allows identification and localization of a known object within an image.

The objdetect_tfl_multiprocessing.py python script (located in the userfs partition: /usr/local/demo-ai/ai-cv/python/objdetect_tfl_multiprocessing.py) is a multi-process python application for image classification.

The application enables OpenCV camera streaming (or test data pictures) and TensorFlowLite interpreter runing the NN inference based on the camera (or test data picture) inputs.

The user interface is implemented using Python GTK..

3.1.2.2. How to use it[edit source]

The Python script objdetect_tfl_multiprocessing.py accepts different input parameters:

-i, --image image directory with images to be classified -v, --video_device video device (default /dev/video0) --frame_width width of the camera frame (default is 320) --frame_height height of the camera frame (default is 240) --framerate framerate of the camera (default is 30fps) -m, --model_file tflite model to be executed -l, --label_file name of file containing labels --input_mean input mean --input_std input standard deviation

3.1.2.3. Testing with COCO ssd MobileNet v1[edit source]

The model used for test is the detect.tflite downloaded from object detection overview[11]

To ease launching of the Python script, two shell scripts are available:

- launch object detection based on camera frame inputs

/usr/local/demo-ai/ai-cv/python/launch_python_objdetect_tfl_coco_ssd_mobilenet.sh

- launch object detection based on the picture located in /usr/local/demo-ai/ai-cv/models/coco_ssd_mobilenet/testdata directory

/usr/local/demo-ai/ai-cv/python/launch_python_objdetect_tfl_coco_ssd_mobilenet_testdata.sh

3.2. Python TensorFlowLite applications[edit source]

This part provides information about the Python applications examples based on TensorflowLite and OpenCV.

The applications integrate a camera preview and test data picture that is then connected to the chosen TensorFlowLite model.

Two Python application examples are available and are described below:

- Image classification example based on MobileNet v1 model

- Object detection example based on COCO SSD MobileNet v1 model

3.2.1. Image classification application[edit source]

3.2.1.1. Description[edit source]

The image classification[9] neural network model allows identification of the subject represented by an image. It classifies an image into various classes.

The label_tfl_multiprocessing.py Python script (located in the userfs partition: /usr/local/demo-ai/ai-cv/python/label_tfl_multiprocessing.py) is a multi-process python application for image classification.

The application enables OpenCV camera streaming (or test data picture) and TensorFlowLite interpreter runing the NN inference based on the camera (or test data pictures) inputs.

The user interface is implemented using Python GTK.

3.2.1.2. How to use it[edit source]

The Python script label_tfl_multiprocessing.py accepts the following input parameters:

-i, --image image directory with images to be classified -v, --video_device video device (default /dev/video0) --frame_width width of the camera frame (default is 320) --frame_height height of the camera frame (default is 240) --framerate framerate of the camera (default is 30fps) -m, --model_file tflite model to be executed -l, --label_file name of file containing labels --input_mean input mean --input_std input standard deviation

3.2.1.3. Testing with MobileNet V1[edit source]

3.2.1.3.1. Default model: MobileNet V1 0.5 128 quant[edit source]

The default model used for tests is the mobilenet_v1_0.5_128_quant.tflite downloaded from Tensorflow Lite hosted models[10]

To ease launching of the Python script, two shell scripts are available:

- launch image classification based on camera frame inputs

/usr/local/demo-ai/ai-cv/python/launch_python_label_tfl_mobilenet.sh

- launch image classification based on the picture located in /usr/local/demo-ai/ai-cv/models/mobilenet/testdata directory

/usr/local/demo-ai/ai-cv/python/launch_python_label_tfl_mobilenet_testdata.sh

3.2.1.3.2. Testing another MobileNet v1 model[edit source]

You can test other models by downloading them directly to the STM32MP1 board. From example:

curl http://download.tensorflow.org/models/mobilenet_v1_2018_02_22/mobilenet_v1_1.0_224_quant.tgz | tar xzv -C /usr/local/demo-ai/ai-cv/models/mobilenet/ python3 /usr/local/demo-ai/ai-cv/python/label_tfl_multiprocessing.py -m /usr/local/demo-ai/ai-cv/models/mobilenet/mobilenet_v1_1.0_224_quant.tflite -l /usr/local/demo-ai/ai-cv/models/mobilenet/labels.txt -i /usr/local/demo-ai/ai-cv/models/mobilenet/testdata/

3.2.1.4. Testing with your own model[edit source]

The label_tfl_multiprocessing.py python script fits with Tensorflow Lite model format for image classification. Any model with a .tflite extension and a label file can be used with label_tfl_multiprocessing.py python script.

You are free to update the label_tfl_multiprocessing.py python script to perfectly fit your needs.

3.2.2. Object detection application[edit source]

3.2.2.1. Description[edit source]

The object detection[11] neural network model allows identification and localization of a known object within an image.

The objdetect_tfl_multiprocessing.py python script (located in the userfs partition: /usr/local/demo-ai/ai-cv/python/objdetect_tfl_multiprocessing.py) is a multi-process python application for image classification.

The application enables OpenCV camera streaming (or test data pictures) and TensorFlowLite interpreter runing the NN inference based on the camera (or test data picture) inputs.

The user interface is implemented using Python GTK..

3.2.2.2. How to use it[edit source]

The Python script objdetect_tfl_multiprocessing.py accepts different input parameters:

-i, --image image directory with images to be classified -v, --video_device video device (default /dev/video0) --frame_width width of the camera frame (default is 320) --frame_height height of the camera frame (default is 240) --framerate framerate of the camera (default is 30fps) -m, --model_file tflite model to be executed -l, --label_file name of file containing labels --input_mean input mean --input_std input standard deviation

3.2.2.3. Testing with COCO ssd MobileNet v1[edit source]

The model used for test is the detect.tflite downloaded from object detection overview[11]

To ease launching of the Python script, two shell scripts are available:

- launch object detection based on camera frame inputs

/usr/local/demo-ai/ai-cv/python/launch_python_objdetect_tfl_coco_ssd_mobilenet.sh

- launch object detection based on the picture located in /usr/local/demo-ai/ai-cv/models/coco_ssd_mobilenet/testdata directory

/usr/local/demo-ai/ai-cv/python/launch_python_objdetect_tfl_coco_ssd_mobilenet_testdata.sh

4. Enjoy running your own CNN[edit source]

The two above examples provide application samples to demonstrate how to execute Tensforflow Lite CNN easily on the STM32MP1.

You are free to update the Python scripts for your own purposes, using your own CNN Tensorflow Lite models.

5. References[edit source]

- ↑ Tensorflow Lite

- ↑ OpenCV

- ↑ Python

- ↑ 4.0 4.1 4.2 Avenger96 board

- ↑ 5.0 5.1 [ https://www.96boards.org/product/d3camera/ OV5640 CSI D3Camera board]

- ↑ 6.0 6.1 STM32MP157C-DK2

- ↑ 7.0 7.1 STM32MP157C-EV1

- ↑ 8.0 8.1 STM32MP157A-EV1

- ↑ 9.0 9.1 TFLite image classification overview

- ↑ 10.0 10.1 TFLite hosted models

- ↑ 11.0 11.1 11.2 11.3 TFLite object detection overview