This article provides the documentation related to the support for Deep Quantized Neural Network (DQNN) in X-CUBE-AI. The documentation is also provided within the embedded documentation installed with X-CUBE-AI, which ensures to provide the accurate documentation for the considered version of X-CUBE-AI.

1. Overview

The stm32ai application can be used to deploy a pretrained DQNN model. designed and trained with the QKeras and Larq libraries. The purpose of this article is to highlight the supported configurations and limitations to be able to deploy an efficient and optimized C-inference model for STM32 targets. For detailed explanations and recommendations to design a DQNN model checkout the respective user guide or provided notebook.

2. Deeply quantized neural network ?

The quantized model generally refers to models that use an 8-bit signed or unsigned integer data format to encode each weight and activation. After an optimization and quantization process (Post Training Quantization, PTQ or Quantization Aware Training, QAT fashion), it allows the deployment of a floating-point network with arithmetic using smaller integers to be more efficient in terms of computational resources. DQNN denotes models that use the bit width less than 8 bits to encode some weight, activation, or both. Mixed data types (hybrid layer) can be also considered for a given operator (weight in binary, activation in 8-bit signed integer or 32-bit floating point) allowing a trade-off in terms of accuracy and precision against memory peak usage. To ensure performance, the QKeras and Larq libraries only train in a quantization-aware manner (QAT).

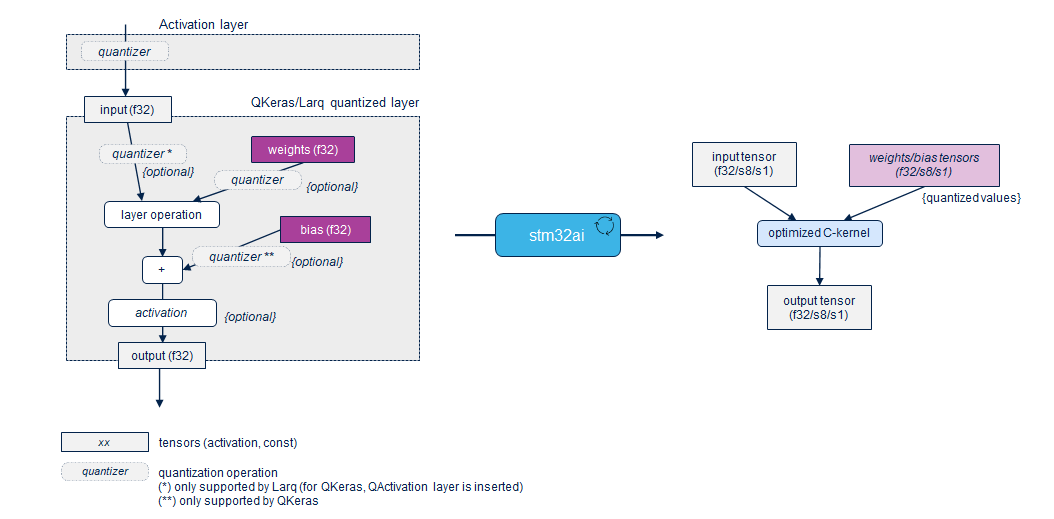

Each library is designed as an extension of the high-level Keras API (custom layer) that provides an easy way to create quickly a deep quantized version of original Keras network. As shown in the left part of the following figure, based on the concept of quantized layers and quantizers, the user can transform a full precision layer describing how to quantize incoming activations and weights. Note that the quantized layers are fully compatible with the Keras API so they can be used with Keras Layers interchangeably. This property allows the mixed models design with layers that are kept in float.

Note that after using a classical quantized model (8-bit format only), the DQNN model can be considered as an advanced optimization approach or an alternative to deploy a model in the resource-constrained environments like an STM32 device without significant loss of accuracy. “Advanced” means that the design of this type of model is, by construction, not direct.

3. 1-bit and 8-bit signed format support

The Arm® Cortex®-M instructions and the requested data manipulations (such as the pack data and unpack data operations during memory transfers) do not allow an efficient implementation for all the combinations of data types. The stm32ai application focuses primarily on implementations to have improved memory peak usage (flash memory, RAM, or both) and reduced latency (execution time), which means that no optimization for size only option is supported. Therefore, only the 32-bit float, 8-bit signed and binary signed (1-bit) data types are considered by the code generator to deploy the optimized C-kernels (see the next “Optimized C-kernels” section). Otherwise, if possible, fallback to 32-bit floating point C-kernel is used with pre- or post- quantizer or dequantized operations.

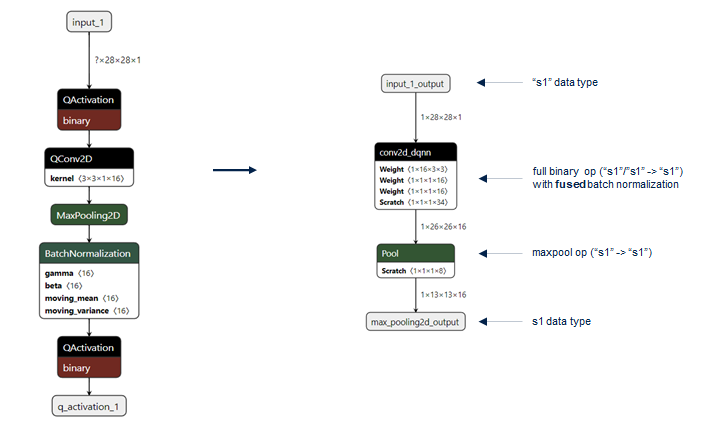

Figure 1 from quantized layer to deployed operator:

- Data type of the input tensors is defined by the 'input_quantizer' argument for Larq or inferred by the data type of the incoming operators (Larq or QKeras)

- Data type of the output tensors is inferred by the outcoming operator-chain.

- In the case, where the input, output, and weight tensors are quantized with a classical 8-bit integer scheme (like for the TensorFlow™ Lite quantized models), the respective optimized int8 C-kernel implementations is used. This is the same for the full floating-point operators.

4. QKeras library

Qkeras[Ex 1] is a quantization extension framework developed by Google®. It provides drop-in replacement for some of the Keras layers, especially the ones that create parameters and activation layers, and perform arithmetic operations. With QKeras, it is possible to create quickly a deep quantized version of a Keras network. QKeras is being designed to extend the functionality of Keras using Keras design principle: it is user friendly, modular and extensible, and adding to it is “minimally intrusive” of Keras native functionality. It provides also the QTools and AutoQKeras tools to assist the user to deploy a quantized model on a specific hardware implementation or to treat the quantization as a hyperparameter search in a KerasTuner environment.

import tensorflow as tf

import qkeras

...

x = tf.keras.Input(shape=(28, 28, 1))

y = qkeras.QActivation(qkeras.quantized_relu(bits=8, alpha=1))(x)

y = qkeras.QConv2D(16, (3, 3),

kernel_quantizer=qkeras.binary(alpha=1),

bias_quantizer=qkeras.binary(alpha=1),

use_bias=False,

name="conv2d_0")(y)

y = tf.keras.layers.MaxPooling2D(pool_size=(2,2))(y)

y = tf.keras.layers.BatchNormalization()(y)

y = qkeras.QActivation(qkeras.binary(alpha=1))(y)

...

model = tf.keras.Model(inputs=x, outputs=y)

4.1. Supported QKeras quantizers and layers

- QActivation

- QBatchNormalization

- QConv2D

- QConv2DTranspose

- 'padding' parameter must be 'valid'

- 'stride' must be '(1, 1)'

- QDense

- Only 2D input shape is supported: [batch_size, input_dim]. A rank greater than 2 is not supported, Flatten layer before the QuantDense/QDense operator must be added

- QDepthwiseConv2D

The following quantizers and associated configurations are supported:

| Quantizer | Comments and limitations |

|---|---|

| quantized_bits() | only 8 bit size (bits=8) is supported |

| quantized_relu() | only 8 bit size (bits=8) is supported |

| quantized_tanh() | only 8 bit size (bits=8) is supported |

| binary() | only supported in signed version (use_01=False), without scale (alpha=1) |

| stochastic_binary() | only supported in signed version (use_01=False), without scale (alpha=1) |

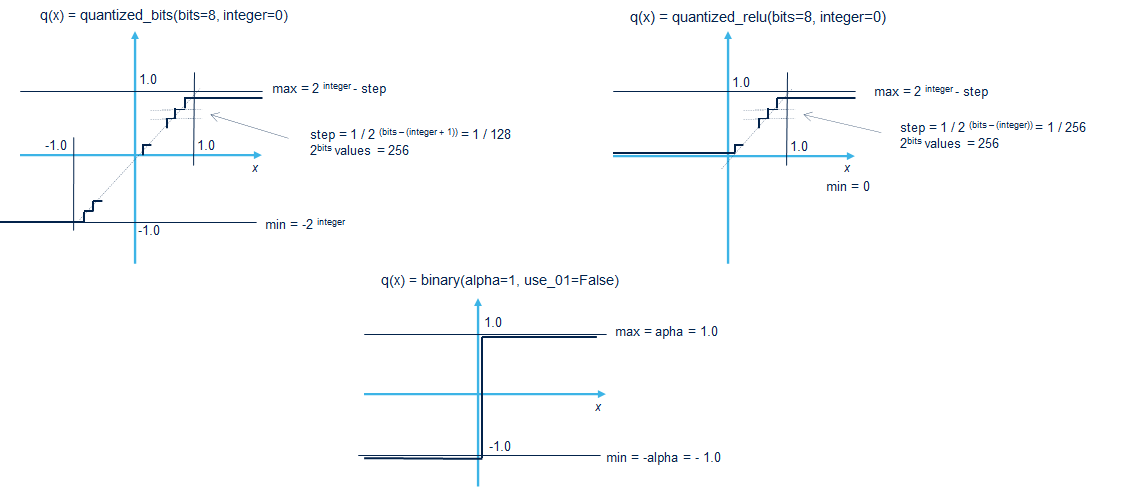

Figure 2 QKeras quantizers:

- Typically, 'quantized_relu()' quantizer can be used to quantize the inputs that are normalized between '0.0' and '1.0'. Note that 'quantized_relu(bits=8, integer=8)' can be considered if the range of the input values are between '0.0' and '256.0'.

x = tf.keras.Input(shape=(..))

y = qkeras.QActivation(qkeras.quantized_relu(bits=8, integer=0))(x)

y = qkeras.QConv2D(..)

...

- The 'quantized_bits()' quantizer can be used to quantize the inputs that are normalized between '-1.0' and '1.0'. Note that 'quantized_bits(bits=8, integer=7)' can be considered if the range of the input values are between '-128.0' and '127.0'.

x = tf.keras.Input(shape=(..))

y = qkeras.QActivation(qkeras.quantized_bits(bits=8, integer=0, symmetric=0, alpha=1))(x)

y = qkeras.QConv2D(..)

...

- To have a fully binarized operation without bias and a normalized and binarized output:

...

y = qkeras.QActivation(qkeras.binary(alpha=1))(y)

y = qkeras.QConv2D(..

kernel_quantizer="binary(alpha=1)",

bias_quantizer=qkeras.binary(alpha=1),

use_bias=False,

)(y)

y = keras.MaxPooling2D(...)(y)

y = keras.BatchNormalization()(y)

y = qkeras.QActivation(qkeras.binary(alpha=1))(y)

...

5. Larq library

Larq[Ex 2] is an open-source Python™ library for training neural networks with extremely low-precision weights and activations, such as Binarized Neural Networks (BNNs). The approach is similar to the QKeras library with a preliminary focus on the BNN models. To deploy the trained model, a specific highly optimized inference engine (Larq Compute Engine, LCE) is also provided for various mobile platforms.

...

import tensorflow as tf

import larq as lq

...

x = tf.keras.Input(shape=(28, 28, 1))

y = tf.keras.layers.Flatten()(x)

y = lq.layers.QuantDense(

512,

kernel_quantizer="ste_sign",

kernel_constraint="weight_clip")(y)

y = lq.layers.QuantDense(

10,

input_quantizer="ste_sign",

kernel_quantizer="ste_sign",

kernel_constraint="weight_clip")(y)

y = tf.layers.Activation("softmax")(y)

...

model = tf.keras.Model(inputs=x, outputs=y)

5.1. Supported Larq layers

- QuantConv2D

- for binary quantization, 'pad_values=-1 or 1' is requested if 'padding="same"'

- QuantDense

- 'DoReFa(..)' quantizer is not supported

- only 2D input shape is supported: [batch_size, input_dim]. A rank greater than 2 is not supported, Flatten layer before the QuantDense/QDense operator must be added

- QuantDepthwiseConv2D

- for binary quantization, 'pad_values=-1 or 1' is requested if 'padding="same"'

- 'DoReFa(..)' quantizer is not supported

Only the following quantizers and associated configurations are supported. Larq quantizers are fully described in the corresponding Larq documentation section[Ex 3]:

| Quantizer | Comments / limitations |

|---|---|

| 'SteSign' | used for binary quantization |

| 'ApproxSign' | used for binary quantization |

| 'SwishSign' | used for binary quantization |

| 'DoReFa' | only 8 bit size (k_bit=8) is supported for the QuantConv2D layer |

- Typically, 'DoReFa(k_bit=8, mode="activations")' quantizer can be used to quantize the inputs that are normalized between '0.0' and '1.0'. Note that 'DoReFa(k_bit=8, mode="weights")' quantizes the weights between '-1.0' and '1.0'.

x = tf.keras.Input(shape=(..))

y = larq.layers.QuantConv2D(..,

input_quantizer=larq.Dorefa(k_bits=8, mode="activations",

kernel_quantizer=larq.Dorefa(k_bits=8, mode="weights",

use_bias=False,

)(x)

...

6. Optimized C-kernel configurations

6.1. Implementation conventions

This section shows the optimized data-type combinations using the naming conversion:

- f32 to identify the absence of quantization (namely 32-bit floating point)

- s8 to refer the 8-bit signed quantizers

- s1 to refer to the binary signed quantizers

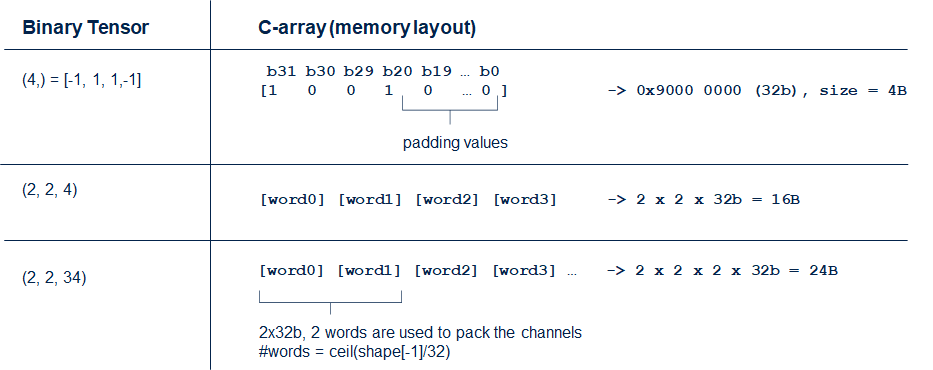

6.2. C-layout of the s1 type

Elements of a binary activation tensors are packed on 32-bit words along the last dimension ('axis=-1') with the following rules:

- bit order: little or MSB first

- pad value: '0b'

- a positive value is coded with '0b', while a negative value is coded with '1b'

6.3. Quantized Dense layers

| input format | output format | weight format | bias format (1) | notes |

|---|---|---|---|---|

| s1 | s1 | s1 | s1 | (2) |

| s1 | s1 | s1 | f32 | (2) |

| s1 | s1 | s8 | s1 | (2) |

| s1 | s8 | s1 | s8 | (2) |

| s1 | s8 | s8 | s8 | (2) |

| s1 | f32 | s1 | s1 | (2) |

| s1 | f32 | s1 | f32 | (2) |

| s1 | f32 | s8 | s8 | (2) |

| s1 | f32 | f32 | f32 | (2) |

| s8 | s1 | s1 | s1 | (2) |

| s8 | s8 | s1 | s1 | (2) |

| s8 | f32 | s1 | s1 | (2) |

| s8 | s8 | s8 | s8 | (2), bias are stored as s32 format |

| s8 | s8 | s8 | s32 | (2), int8-tflite kernels |

| s8 | f32 | s1 | s1 | (2) |

| f32 | s1 | s1 | s1 | (2) |

| f32 | s1 | s1 | f32 | (2) |

| f32 | f32 | s1 | s1 | (2) |

| f32 | f32 | s1 | f32 | (2) |

(1) usage of the bias is optional (2) batch-normalization can be fused

6.4. Optimized Convolution layers

| input format | output format | weight format | bias format (1) | notes |

|---|---|---|---|---|

| s1 | s1 | s1 | s1 | (2), (3), including pointwise and depthwise version |

| s1 | s8 | s1 | s8 | (2), including pointwise version |

| s1 | s8 | s1 | f32 | (2), including pointwise version |

| s1 | f32 | s1 | f32 | (2), including pointwise version |

| s8 | s1 | s8 | ||

| s8 (Dorefa) | s1 | s8 (Dorefa) | (2) | |

| s8 | s8 | s8 | s8 | (2) bias are stored as s32 format |

| s8 | s8 | s8 | s32 | (2) int8-tflite kernels |

(1) usage of the bias is optional (2) batch-normalization can be fused (3) maxpool can be inserted between the convolution and the batch-normalization operators

6.5. Miscellaneous layers

The following layers are also available to support the more complex topology, for example with the residual connections.

| layer | input format | output format | notes |

|---|---|---|---|

| maxpool | s1 | s1 | s8/f32 data type is also supported by the “standard” C-kernels |

| concat | s1 | s1 | s8/f32 data type is also supported by the “standard” C-kernels |

7. Evidence of efficient code generation

Similar to the qkeras.print_qstats() function or the extended summary() function in Larq, the analyze command reports a summary of the number of operations used for each generated C-layer according the type of data. The number of operation types for the entire generated C-model is also reported. This last information makes it possible to know if the deployed model is entirely or partially based on the optimized binarized or quantized C-kernels.

The following example shows that 90% of the operations are the binary operations provided by the main contributor: "quant_conv2d_1_conv2d" layer.

$ stm32ai <model_file.h5>

...

params # : 93,556 items (365.45 KiB)

macc : 2,865,718

weights (ro) : 14,496 B (14.16 KiB) (1 segment) / -359,728(-96.1%) vs float model

activations (rw) : 86,528 B (84.50 KiB) (1 segment)

ram (total) : 89,704 B (87.60 KiB) = 86,528 + 3,136 + 40

...

Number of operations and param per C-layer

-------------------------------------------------------------------------------------------

c_id m_id name (type) #op (type)

-------------------------------------------------------------------------------------------

0 2 quant_conv2d_conv2d (conv2d) 194,720 (smul_f32_f32)

1 3 quant_conv2d_1_conv (conv) 43,264 (conv_f32_s1)

2 1 max_pooling2d (pool) 21,632 (op_s1_s1)

3 3 quant_conv2d_1_conv2d (conv2d_dqnn) 2,230,272 (sxor_s1_s1)

4 5 max_pooling2d_1 (pool) 6,400 (op_s1_s1)

5 7 quant_conv2d_2_conv2d (conv2d_dqnn) 331,776 (sxor_s1_s1)

6 10 quant_dense_quantdense (dense_dqnn_dqnn) 36,864 (sxor_s1_s1)

7 13 quant_dense_1_quantdense (dense_dqnn_dqnn) 640 (sxor_s1_s1)

8 15 activation (nl) 150 (op_f32_f32)

-------------------------------------------------------------------------------------------

total 2,865,718

Number of operation types

---------------------------------------------

smul_f32_f32 194,720 6.8%

conv_f32_s1 43,264 1.5%

op_s1_s1 28,032 1.0%

sxor_s1_s1 2,599,552 90.7%

op_f32_f32 150 0.0%

8. Example of supported patterns

As already mentioned in the overview, the stm32ai application infers the data-type of the input and output tensors from the data-type of the incoming and outcome chains respectively. This section illustrates the typical patterns considered to deploy an optimized C-kernel.

8.1. Activation layer

Activation layer (including the QActivation layer) is mainly supported by fusing it the previous or following layer. Supported arguments are the supported quantizer configurations.

x = tf.keras.Input(shape=(..))

y = qkeras.QActivation(qkeras.binary(alpha=1))(x)

y = qkeras.QConv2D(..)(y)

...

is equivalent to (code gen point of view):

x = tf.keras.Input(shape=(..))

y = larq.layers.QuantConv2D(..,

input_quantizer='ste_sign',..)(x)

...

8.2. Quantized dense layer

Dense layer patterns are a sequence of layers composed of:

- an optional QActivation to specify the input format. If missing, the input format is f32

- the QDense layer. The use of bias is optional

- an optional other layer: for instance (Q)BatchNormalization, which can be merged info the previous dense layer

- an optional QActivation to specify the output format. If missing, the output format is f32

The first example illustrates the case where 8-bit quantized input (s8: symmetric with 7-bit fractional part) is used for the QDense layer exploiting 1-bit quantized (or binary) weights with bias. The output is normalized and in f32.

x = tf.keras.Input(shape=shape_in)

y = qkeras.QActivation(qkeras.quantized_bits(bits=8, integer=7))(x)

y = tf.keras.layers.Flatten()(y)

y = qkeras.QDense(16,

kernel_quantizer=qkeras.binary(alpha=1),

bias_quantizer=qkeras.binary(alpha=1),

use_bias=True,

name="dense_0")(y)

y = tf.keras.layers.BatchNormalization()(y)

y = tf.keras.layers.Activation("softmax")(y)

model = tf.keras.Model(inputs=x, outputs=y)

Number of operations and param per C-layer

----------------------------------------------------------------------------

c_id m_id name (type) #op (type)

----------------------------------------------------------------------------

0 2 conv2d_0_conv2d (conv2d_dqnn) 97,344 (sxor_s1_s1)

1 3 max_pooling2d (pool) 10,816 (op_s1_s1)

----------------------------------------------------------------------------

total 108,160

Number of operation types

---------------------------------------------

sxor_s1_s1 97,344 90.0%

op_s1_s1 10,816 10.0%

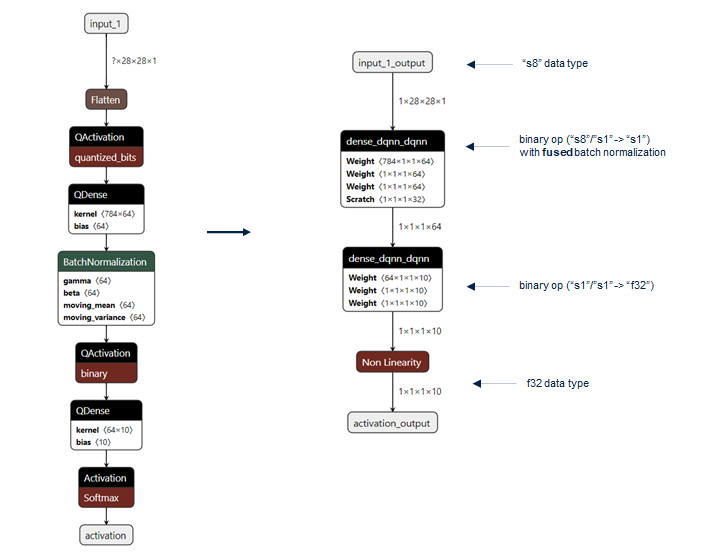

Second example shows the case, where two dense layers are used. The first uses the binary weights with an 8-bit quantized input to compute the binary output. Second layer uses the binary weights and the previous binary output to compute the f32 output.

x = tf.keras.Input(shape=shape_in)

y = tf.keras.layers.Flatten()(x)

y = qkeras.QActivation(qkeras.quantized_bits(bits=8, integer=7))(y)

y = qkeras.QDense(64,

kernel_quantizer=qkeras.binary(alpha=1),

bias_quantizer=qkeras.binary(alpha=1),

use_bias=True,

name="dense_0")(y)

y = tf.keras.layers.BatchNormalization()(y)

y = qkeras.QActivation(qkeras.binary(alpha=1))(y)

y = qkeras.QDense(10,

kernel_quantizer=qkeras.binary(alpha=1),

bias_quantizer=qkeras.binary(alpha=1),

use_bias=True,

name="dense_1")(y)

y = tf.keras.layers.Activation("softmax")(y)

Number of operations and param per C-layer

-------------------------------------------------------------------------------

c_id m_id name (type) #op (type)

-------------------------------------------------------------------------------

0 3 dense_0_qdense (dense_dqnn_dqnn) 50,176 (smul_s8_s1)

1 6 dense_1_qdense (dense_dqnn_dqnn) 650 (sxor_s1_s1)

2 7 activation (nl) 150 (op_f32_f32)

-------------------------------------------------------------------------------

total 50,976

Number of operation types

---------------------------------------------

smul_s8_s1 50,176 98.4%

sxor_s1_s1 650 1.3%

op_f32_f32 150 0.3%

Figure 3 quantized dense layer example:

8.3. Quantized Convolution layer

For the quantized convolution layer, the pattern is similar to the quantized dense layer.

The following example shows the case where the binary input and weight are used to compute a normalized binary output. The MaxPooling2D layer allows the activations to be compacted.

x = tf.keras.Input(shape=shape_in)

y = qkeras.QActivation(qkeras.binary(alpha=1))(x)

y = qkeras.QConv2D(filters=16, kernel_size=(3, 3),

kernel_quantizer=qkeras.binary(alpha=1),

bias_quantizer=qkeras.binary(alpha=1),

use_bias=False,

padding="same",

name="dense_0")(y)

y = tf.keras.layers.MaxPooling2D(pool_size=(2, 2))(y)

y = tf.keras.layers.BatchNormalization()(y)

y = qkeras.QActivation(qkeras.binary(alpha=1))(y)

model = tf.keras.Model(inputs=x, outputs=y)

Figure 4 quantized dense layer example:

Variation with 'stride' argument can be used to avoid to use the MaxPooling2D layer.

x = tf.keras.Input(shape=shape_in)

y = qkeras.QActivation(qkeras.binary(alpha=1))(x)

y = qkeras.QConv2D(filters=16, kernel_size=(3, 3),

kernel_quantizer=qkeras.binary(alpha=1),

bias_quantizer=qkeras.binary(alpha=1),

use_bias=False,

strides=(2, 2),

padding="same",

name="dense_0")(y)

y = tf.keras.layers.BatchNormalization()(y)

y = qkeras.QActivation(qkeras.binary(alpha=1))(y)

model = tf.keras.Model(inputs=x, outputs=y)

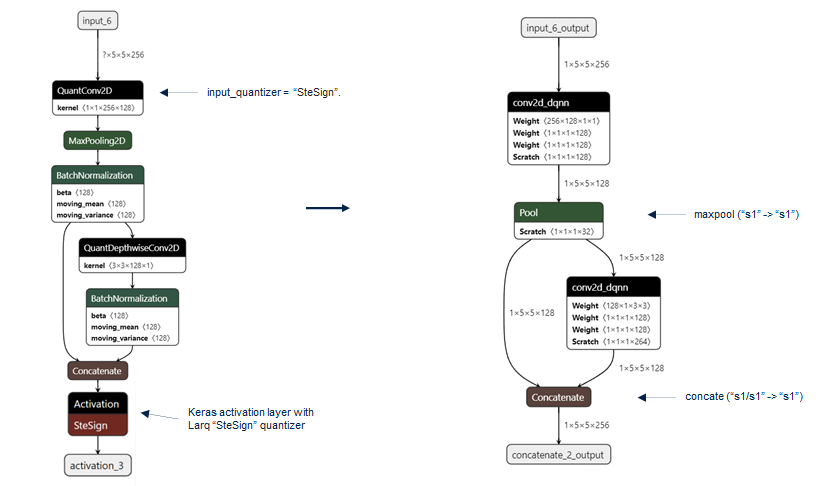

8.4. Residual connections case

The following model shows how residual connections can be created to concatenate the activations. Particular attention shall be given to the shapes to be concatenated since must be the same. Figure 5 residual connections case example:

...

Number of operations and param per C-layer

--------------------------------------------------------------------------------------------

c_id m_id name (type) #op (type)

--------------------------------------------------------------------------------------------

0 1 quant_conv2d_5_conv2d (conv2d_dqnn) 819,200 (sxor_s1_s1)

1 2 max_pooling2d (pool) 12,800 (op_s1_s1)

2 4 quant_depthwise_conv2d_2_conv2d (conv2d_dqnn) 28,800 (sxor_s1_s1)

3 6 concatenate_2 (concat) 0 (op_s1_s1)

--------------------------------------------------------------------------------------------

total 860,800

Number of operation types

---------------------------------------------

sxor_s1_s1 848,000 98.5%

op_s1_s1 12,800 1.5%

...

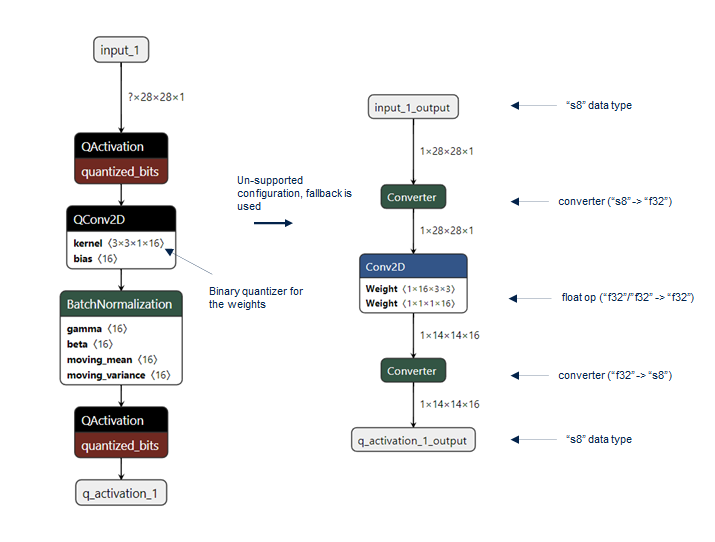

8.5. Fallback to 32-bit floating point kernels

Following code shows the case where the requested configuration: 's8xs1->s8' is not supported and the fallback is applied. This is a typical case where the user has the opportunity to modify its model (after a pre-analyze step of the model), to keep this layer in float limiting the possible loss of precision.

x = tf.keras.Input(shape=shape_in)

y = qkeras.QActivation(qkeras.quantized_bits(bits=8, integer=7))(x)

y = qkeras.QConv2D(filters=16, kernel_size=(3, 3), strides=(2, 2),

kernel_quantizer=qkeras.binary(alpha=1),

bias_quantizer=qkeras.binary(alpha=1),

use_bias=True,

padding="same",

name="dense_0")(y)

y = tf.keras.layers.BatchNormalization()(y)

y = qkeras.QActivation(qkeras.quantized_bits(bits=8, integer=7))(y)

model = tf.keras.Model(inputs=x, outputs=y)

Figure 6 fallback to 32-bit floating point example:

Number of operations and param per C-layer

----------------------------------------------------------------------------

c_id m_id name (type) #op (type)

----------------------------------------------------------------------------

0 0 input_1_0_conversion (conv) 1,568 (conv_s8_f32)

1 3 dense_0_conv2d (conv2d) 28,240 (smul_f32_f32)

2 4 q_activation_1 (conv) 6,272 (conv_f32_s8)

----------------------------------------------------------------------------

total 36,080

Number of operation types

---------------------------------------------

conv_s8_f32 1,568 4.3%

smul_f32_f32 28,240 78.3%

conv_f32_s8 6,272 17.4%

9. Building an efficient DQNN or BNN model for stm32ai

Consider all the highlighted recommendations from the Larq documentation[Ex 4] or from the QKeras notebooks[Ex 5] [Ex 6] to design an efficient DQNN or BNN model for an STM32 series. In particular:

- It is preferable to leave the first layer and the last layer in higher precision: 's8' or 'f32'

- Usage of the 'BatchNormalization' layer

- Placement of the 'MaxPool' layer before the 'BatchNormalization'

- Due to the way to encode the binary tensors (see “C-layout of the s1 type” section), it is recommended to have the number of channels as a multiple of 32 to optimize the flash memory and RAM sizes, and MAC/Cycle. This is not mandatory anyhow.

9.1. Pre-analyze step

It is recommended to execute regularly the 'analyze' during the design of the DQNN/BNN model to know if it is efficiently deployed (fallback not used) before performing a complete training. This avoids to use a quantized layer not supported or without gain in term of memory usage when it deploys on an STM32 target.

10. FAQ

10.1. Is it possible to mix the quantized Larq and QKeras layers?

For the stm32ai point of view, yes. When importing the model, it is translated into an independent internal representation before applying the different optimization passes and the rendering stage. However, this is not recommended, even though each library (Larq or QKeras) is based on the Keras API, they are designed independently. There is no guarantee to converge with a good level of precision during the training phase.

11. Terms and references

11.1. Terms

| Term | Description |

|---|---|

| API | Embedded inference client API |

| BNN | Binarized neural network |

| CLI | stm32ai - Command-line interface

|

| DQNN | Deep quantized neural network |

| PTQ | Post-training quantization |

| QAT | Quantization aware training |

| TFL | TensorFlow™ Lite toolbox |

| TFLM | TensorFlow™ Lite for Microcontroller |

11.2. STMicroelectronics references

See also:

11.3. Third-party references