1. STM32CUBE.AI enabled OpenMV firmware

This tutorial will walk you through the process of integrating your own neural network the OpenMV environement.

The OpenMV open-source project provides the source code for compiling the OpenMV H7 firmware with STM32Cube.AI enabled. The process for using Cube.AI with OpenMV is described in the following figure.

- Train your neural network using you favorite deep learning framework.

- Convert your trained network to optimized C code using ST Cube.AI tool

- Download the OpenMV firmware source code, and

- Add the generated files to the firmware source code

- Compile with GCC toolchain

- Flash the board using OpenMV IDE

- Program the board with microPython and perform inference

Licence information:

- X-CUBE-AI is delivered under the Mix Ultimate Liberty+OSS+3rd-party V1 software license agreement SLA0048

- OpenMV is deviered under the MIT licence

1.1. Requirements

- For Windows users only: Windows Subsystem for Linux (WSL) Ubuntu 18.04 Distribution, it will provide a full Linux environnement. All following commands should be executed in Ubuntu WSL shell.

- stm32ai command line to generate the optimized code. Download the latest version from ST website

- unzip the archive from the ST website, rename the .pack file to .zip, extract it somewhere. In the Documentation folder you will find the instructions on how to add

stm32aito your PATH.

- unzip the archive from the ST website, rename the .pack file to .zip, extract it somewhere. In the Documentation folder you will find the instructions on how to add

- GNU Arm Toolchain Version 7-2018-q2 to compile the firmware. Download from ARM website

- Extract the archive somewhere and add

gcc-arm-none-eabi-7-2018-q2-update/bin/to your PATH. Make sure the commandarm-none-eabi-gccpoints to the location of the installation.

- Extract the archive somewhere and add

- Python 3. Can be installed using Miniconda. Make sure that when you type

pythonin your shell, python 3.x.x is called. If not, add it manually to your PATH.

- OpenMV IDE to develop microPython and flash the board. Download form OpenMV website

- git in order to clone the project. Can be installed by running

sudo apt install git

1.2. Step 1 - Clone the openMV project

In this section we will clone the OpenMV project, checkout a known working version and create a branch. Then we need to init the submodule, this will clone OpenMV dependencies, such as microPython.

git clone https://github.com/openmv/openmv.git

cd openmv

git checkout b4bad33 -b cubeai

git submodule update --init --recursive

Troubleshooting: During the command

git submodule update --init --recursiveand error about lwip may appear. In this case, go tosrc/micropython/edit the .gitmodules file on line 10 and change the the URL withhttps://github.com/lwip-tcpip/lwip.gitthen executegit submodule syncandgit submodule --init --recursiveagain

1.3. Step 2 - Add the Cube.AI library to OpenMV

Now that the OpenMV firmware is downloaded, we need to copy over the Cube.AI runtime library and header files into the project.

Inside src/stm32cubeai directory, run:

$ cd openmv/src/stm32cubeai

$ mkdir -p AI/{Inc,Lib}

$ mkdir data

Then copy the files from Cube.AI to the AI directory:

$ cd openmv/src/stm32cubeai

$ cp <cube-ai-path>/Middlewares/ST/AI/Inc/* ./AI/Inc/

$ cp <cube-ai-path>/Middlewares/ST/AI/Lib/ABI2.1/STM32H7/NetworkRuntime*_CM7_IAR.a ./NetworkRuntime_CM7_GCC.a

After this operation, the AI directory should look like this

AI/ ├── Inc │ ├── ai_common_config.h │ ├── ai_datatypes_defines.h │ ├── ai_datatypes_format.h │ ├── ai_datatypes_internal.h │ ├── ai_log.h │ ├── ai_math_helpers.h │ ├── ai_network_inspector.h │ ├── ai_platform.h │ ├── ... ├── Lib │ └── NetworkRuntime_CM7_GCC.a └── LICENSE

1.4. Step 3 - Generate the code for a NN model

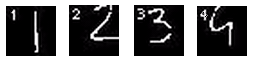

In this section, we will train a convolutional neural network to regognize hand-written digits using. We will then generate optimized C code for this network thanks to STM32Cube.AI. These files will be added to OpenMV firmware source code.

1.4.1. Code generation

The Convolutional Neural Network for digit classification (MNIST) from Keras will be use as an example. If you want to train the network, you need to have Keras installed.

Go to src/stm32cubeai/example.

To train the network and save the model to disk, run

$ cd src/stm32cubeai/example

$ python mnist_cnn.py

Alternatively, you can skip this step and use the pre-trained mnist_cnn.h5 file provided.

Using the stm32ai command line tool, generate the code

$ stm32ai generate -m mnist_cnn.h5 -o ../data/

The following files will be generated in src/stm32cubeai/data:

network.hnetwork.cnetwork_data.hnetwork_data.c

1.4.2. Preprocessing

If you need to do some special preprocessing before running the inference, you should modify the function ai_transform_input located into src/stm32cubeai/nn_st.c . By default, the code does the following:

- Simple resizing (subsampling)

- Conversion from unsigned char to float

- Scaling pixels from [0,255] to [0, 1.0]

The provided example might just work out of the box, but you may want to take a look at this function.

1.5. Step 4 - Compile

Before compiling, please check the version of the gcc arm toolchain by running : arm-none-eabi-gcc --version The output should be : arm-none-eabi-gcc (GNU Tools for Arm Embedded Processors 7-2018-q2-update)

if it's not, please make sure you installed it and that it is in you PATH.

Check that there are no spaces in the path of the current directory, make will fail. You can check by running pwd . If there are some spaces, move this directory to a path with no spaces.

Once this is done, edit omv/boards/OPENMV4/omv_boardconfig.h line 76 and set OMV_HEAP_SIZE to 230K. This will lower the heap section in RAM allowing more space for our neural network activation buffers.

Execute the following command to compile:

$ cd openmv/src

$ make clean

$ make CUBEAI=1

Note: This may take a while, you can speed up the process by adding -j4 or more (depending on your CPU) to the make command, but it can be the right time to take a coffee

Troubleshooting: If the compilation fails with a message saying that the .heap section overflows RAM1, you can edit the file

src/omv/boards/OPENMV4/omv_boardconfig.hand lower the OMV_HEAP_SIZE by a few kilobytes and try to build again. Runmake cleanbetween builds.

1.6. Step 5 - Flash the firmware

Plug the OpenMV camera to the computer using a micro-USB to USB cable.

Open OpenMV IDE. From the toolbar select Tools > Run Bootloader. Select the firmware file, it will be located in src/build/bin/firmware.bin. Follow the instructions. Once this is done, you can click the Connect icon on the bottom left of the icon.

1.7. Step 6 - Program with microPython

Open OpenMV IDE, and click the Connect button on the bottom-right side.

You can start from this example code, running the MNIST example we used bellow (The code is provided as example_script.py in this directory)

# STM32 CUBE.AI on OpenMV MNIST Example

import sensor, image, time, nn_st

sensor.reset() # Reset and initialize the sensor.

sensor.set_contrast(3)

sensor.set_brightness(0)

sensor.set_auto_gain(True)

sensor.set_auto_exposure(True)

sensor.set_pixformat(sensor.GRAYSCALE) # Set pixel format to Grayscale

sensor.set_framesize(sensor.QQQVGA) # Set frame size to 80x60

sensor.skip_frames(time = 2000) # Wait for settings take effect.

clock = time.clock() # Create a clock object to track the FPS.

# [CUBE.AI] Initialize the network

net = nn_st.loadnnst('network')

nn_input_sz = 28 # The NN input is 28x28

while(True):

clock.tick() # Update the FPS clock.

img = sensor.snapshot() # Take a picture and return the image.

# Crop in the middle (avoids vignetting)

img.crop((img.width()//2-nn_input_sz//2,

img.height()//2-nn_input_sz//2,

nn_input_sz,

nn_input_sz))

# Binarize the image

img.midpoint(2, bias=0.5, threshold=True, offset=5, invert=True)

# [CUBE.AI] Run the inference

out = net.predict(img)

print('Network argmax output: {}'.format( out.index(max(out)) ))

img.draw_string(0, 0, str(out.index(max(out))))

print('FPS {}'.format(clock.fps())) # Note: OpenMV Cam runs about half as fast when connected

Take a white sheet of paper and draw numbers with a black pen, point the camera towards the paper. The code should yield the following output:

1.8. (Optional step) Quantize the model

1.8.1. Quantization script with TensorflowLite Converter

Model quantization allows to reduce the weight size by 4 and can also speed up inference time to a factor of 3. For more information about quantization you check Cube.AI documentation about quantization.

In this article we will use TensorFlowLite Converter tool to quantize our mnist network. In the stm32cubeai/example directory, create a new python file named quantize.py and paste the following lines:

'''

Exemple of quantization for mnist_cnn Keras model

'''

import keras

from keras.datasets import mnist

from keras.models import load_model

import numpy as np

import tensorflow as tf

# input image dimensions

img_rows, img_cols = 28, 28

# load the data, split between train and test sets

(x_train, y_train), (x_test, y_test) = mnist.load_data()

# reshape x_train

x_train = x_train.reshape(x_train.shape[0], img_rows, img_cols, 1)

# This function is needed to give TFLite converter a representative dataset of inputs

def representative_dataset_gen():

for img in x_train[:512]:

img = img.astype(np.float32)

img /= 255.0

yield [np.expand_dims(img, axis=0)]

converter = tf.lite.TFLiteConverter.from_keras_model_file('./mnist_cnn.h5')

converter.representative_dataset = representative_dataset_gen

converter.target_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8] # Use INT8 builtins operators

converter.inference_input_type = tf.uint8 # Our input image will be in uint8 format

converter.inference_output_type = tf.float32 # The output from softmax will be in floating point

quant_model = converter.convert() # Call the converter

# Write the quantized model a file

with open('mnist_cnn_quant.tflite', "wb") as f:

f.write(quant_model)

1.8.2. Analyse the quantized model

In a shell, run:

$ cd openmv/src/stm32ai/examples

$ stm32ai analyse -m mnist_cnn_quant.tflite

# You can compare with the floating point model

# stm32ai analyse -m mnist_cnn.h5

A comparison between the floating point and the quantized models yields the following results:

| Model type | ROM usage (weights) | RAM usage (activations and I/Os) |

|---|---|---|

| Floating point | 136 KB | 51 KB |

| Quantized | 34 KB | 14 KB |

We notice a 1/4 factor between floating-point and integer, due to the 32 to 8-bits representation of weights and tensors.

1.8.3. Generate the code

To generate the C code, run:

stm32ai generate -m mnist_cnn_quant.tflite -o ../data

The generated .c and .h files will be placed stm32cubeai/data

1.8.4. Edit the preprocessing function

Now that we are working with uint8 data instead of floating point, we need to update the ai_transform_input function located in nn_st.c.

If we inspect the mnist_cnn_quant.tflite file with Neutron, we notice that the input is expected to be quantized with the following scaling parameter : 0.0039. This value is equal to 1/255 and means that the floating point value is equal to the quantized value times 1/255. So in the case we don't need to do any transformation to the input as we already have greyscale values ranging from 0 to 255.

Back to the code, we just need get rid of the conversion to floating point and just copy the greyscale value to the neural network input buffer, as shown in the listing bellow.

void ai_transform_input(ai_buffer *input_net, image_t *img, ai_u8 *input_data,

rectangle_t *roi) {

// Example for MNIST CNN

// We don't need this casting to floating point

// ai_float *_input_data = (ai_float *)input_data;

int x_ratio = (int)((roi->w << 16) / input_net->width) + 1;

int y_ratio = (int)((roi->h << 16) / input_net->height) + 1;

for (int y = 0, i = 0; y < input_net->height; y++) {

int sy = (y * y_ratio) >> 16;

for (int x = 0; x < input_net->width; x++, i++) {

int sx = (x * x_ratio) >> 16;

uint8_t p = IM_GET_GS_PIXEL(img, sx + roi->x, sy + roi->y);

// We don't need this conversion

// _input_data[i] = (float)(p / 255.0f);

// instead we simply set the input_data to the greyscale value of input

input_data[i] = p;

}

}

1.8.5. Compile and run

A few modifications must be done to enable quantized model to be compiled. First, edit stm32cubeai/cube.mk line 26 to add -lgcc. The line should look like this after the modification :

LIBS += -l:NetworkRuntime_CM7_GCC.a -Lstm32cubeai/AI/Lib -lc -lm -lgccThen, edit the file stm32cubeai/Makefile line 20 to add some source files from the CMSIS DSP library. The full block of code should look like this after the modification:

SRCS += $(addprefix ../cmsis/src/dsp/SupportFunctions/,\

arm_float_to_q7.c\

arm_float_to_q15.c\

arm_q7_to_float.c\

arm_q15_to_float.c\

arm_q7_to_q15.c\

arm_q15_to_q7.c\

arm_fill_q7.c\

arm_fill_q15.c\

arm_copy_q15.c\

arm_copy_q7.c\

)Note that 4 files have been added at the end of the list.

The steps to compile the firmware and run the python code are exactly the same as for the floating point case. Consider running make clean between builds.

2. Documentation of microPython CUBE.AI wrapper

2.1. loadnnst

nn_st.loadnnst(network_name)

Initialize the network named network_name

Arguments:

network_name: String, usually'network'

Returns:

- A network object, used to make predictions

Example:

import nn_st

net = nn_set.loadnnst('network')

2.2. predict

out = net.predict(img)

Runs a network prediction with img as input

Arguments:

img: Image object, from the image module of nn_st. Usually taken fromsensor.snapshot()

Returns:

- Network predictions as an python list

Example:

import sensor, image, nn_st

# Init the sensor

sensor.reset()

sensor.set_pixformat(sensor.RGB565)

sensor.set_framesize(sensor.QVGA)

# Init the network

net = nn_st.loadnnst('network')

# Capture a frame

img = sensor.snapshot()

# Do the prediction

output = net.predict(img)